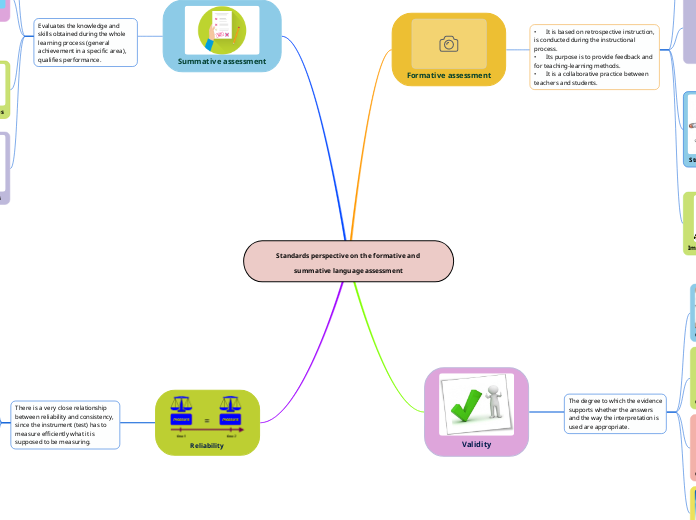

Standards perspective on the formative and summative language assessment

Formative assessment

• It is based on retrospective instruction, is conducted during the instructional process.

• Its purpose is to provide feedback and for teaching-learning methods.

• It is a collaborative practice between teachers and students.

Types

Formal

Learning expectations based on a timeline.

Evidence of learning based on activities performed and documented.

Interpretation of instruction or teaching methods based on evidence gathered.

Informal

Learning expectations that are executed without a plan in advance.

Evidence of learning without a sensible order and documented casually.

Interpretation of instruction or teaching methods according to the will of the teacher.

Principles

Performance standards

Combining effort and time in activities

Good feedback for self-evaluation

Encourage motivation

Don't disturb students' concentration.

Give way for responses on feedback.

Strategies & techniques

allow students to be involved in the process of making decisions about their classes and learning.

Target-setting

Students become responsible for their own learning process and meaningful knowledge is achieved.

Sharing learning objectives

Teacher and student commit to achieve the objectives set (in terms of performance and responsibility).

Structuring lesson introductions

Start the class with a logical order (greeting, topic, objectives) and a brief description that captures the students' attention.

Impact on students learning

Acceptance by students

Engage with their learning

They strive to achieve the socialized objectives

It is a useful resource throughout the duration of the course

Good class management is achieved

It is effective at the time of evaluation

Validity

The degree to which the evidence supports whether the answers and the way the interpretation is used are appropriate.

Content-Relate Evidence

Content validity has to do with the reasonableness of the skill or knowledge provided in the course.

Requirements

Sample the subject matter from which conclusions are drawn.

The performance of the examinees must conform to the content to be assessed.

Criterion-Related Evidence

Provided that the results match equally with those of the criterion measure of other tests of the same nature.

Construct-Related Evidence

It comprises a set of knowledge and skills about a specific area that requires additional data to make inferences to be measured.

Consequential Validity

It refers to the effect and impact of the evaluation on the students' reality.

Summative assessment

Evaluates the knowledge and skills obtained during the whole learning process (general achievement in a specific area), qualifies performance.

Objectives

Determine the success of students at a given point in time.

Establish eligibility for study programs.

Principles

Practicality

It is affordable in terms of cost and time; in addition, the evaluation process is efficient.

Reliability

Consistent at the time of application and reliable

Validity

Must conform to guidelines that justify meaning and utility

Authenticity

Must take into account the real world in terms of applicability and concordance.

Strategies & techniques

Product-assessments

Deliver the final product previously agreed upon according to the guidelines.

Performance-assessments

Improve student understanding in a practical way

Process-focused assessments

Prioritize student ingenuity

Impact on students

Students benefit from the external uses of this assessment (professional qualifications, job selection, school performance).

Motivates students to continue their efforts.

Persistence with learning.

Reliability

There is a very close relationship between reliability and consistency, since the instrument (test) has to measure efficiently what it is supposed to be measuring.

Characteristics

Replicable

There is a small probability of error

Student-Related Reliability

An evaluation can be inconsistent when the student manifests factors (fatigue, anxiety, illness, etc.) that alter the truth value of a specific score.

Rater Reliability

Human error or subjectivity can affect the scoring process.

Inter-rater reliability

two or more evaluators score the same data and obtain different results.

Intra-rater reliability

the same evaluator scores the same data at different times.

Test Administration Reliability

Refers to the conditions under which the test will be taken (environment and familiarity with the process).

Test Reliability

The test should be in accordance with the target population, paying special attention to its design and writing the items in a clear and concise manner.