von Aditya Sharma Vor 10 Monaten

67

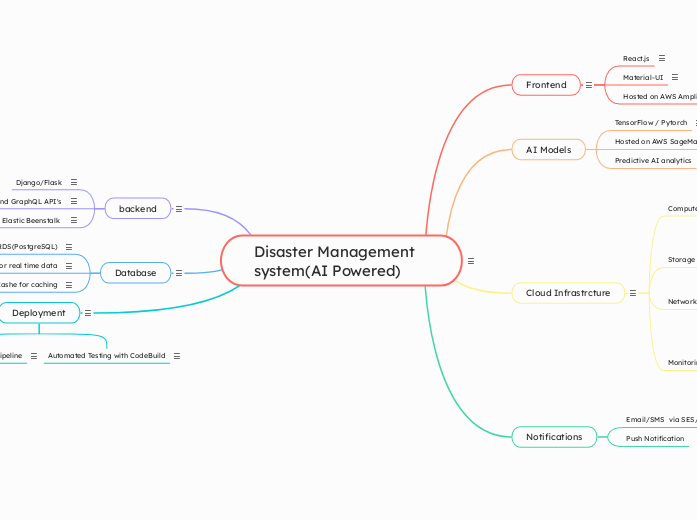

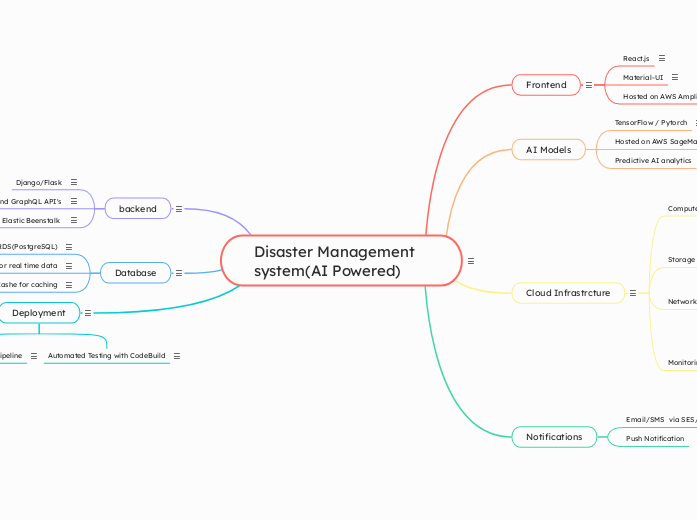

Disaster Management system(AI Powered)

von Aditya Sharma Vor 10 Monaten

67

Mehr dazu

The AI-Powered Disaster Relief Allocation system aims to streamline the allocation of relief resources during disasters. The system leverages AI, deep learning, and cloud computing to process data such as disaster severity, affected regions, available resources, and priority metrics. The project will culminate in a full-stack application with a robust architecture, deployed entirely on the cloud.

The entire application follows a CI/CD pipeline using AWS CodePipeline and AWS CodeBuild for automated testing and deployment. The backend, frontend, and infrastructure code are managed in a GitHub or AWS CodeCommit repository, with automated build and deployment processes triggered upon code changes. The system ensures that all updates are deployed smoothly with minimal downtime, enabling a continuous delivery process for new features and bug fixes.

Notes: CodeBuild will be used to automate testing (unit, integration, and end-to-end) before code is deployed to production.

Features:

Automated testing pipeline.

Integration with CodePipeline for smooth CI/CD workflow.

Notes: CodePipeline will automate the deployment process, ensuring continuous integration and delivery of the frontend and backend.

Features:

GitHub integration for automated deployments.

Testing and validation before production deployment.

The database for the AI-powered disaster relief allocation system will be designed to handle structured and unstructured data efficiently, ensuring quick access to real-time information and scalability for future growth.

ElastiCache for Caching

Notes: ElastiCache will be used to cache frequently accessed data, such as disaster status, reducing the load on RDS and DynamoDB.

Features:

Caching disaster severity reports and resource status.

Improving response times for frequently requested data.

Notes: DynamoDB will store real-time data, such as resource availability and allocation status, which require fast, low-latency reads and writes.

Features:

Low-latency, high-throughput NoSQL database.

Automatic scaling based on traffic.

Notes: RDS will be used for storing structured data, such as user information, disaster reports, and resource logs.

Features:

Highly available and scalable relational database.

Support for complex queries and transactions.

The backend of the AI-powered disaster relief allocation system is built using Python, leveraging Flask or FastAPI for building RESTful APIs. The backend processes data from the frontend, runs AI algorithms to allocate resources efficiently, and communicates with databases and other cloud services. AWS Lambda may be used for serverless functions that handle specific tasks like AI model inference or data processing, ensuring scalability. The backend is deployed on AWS EC2 or AWS Fargate for containerized microservices. Amazon RDS or DynamoDB will store data such as disaster event details, relief resources, and allocation status, while AWS S3 will be used for storing large datasets, such as images or logs.

Notes: Elastic Beanstalk will handle the deployment, scaling, and monitoring of the backend services. It simplifies the management of the app infrastructure.

Features:

Automatic scaling based on load.

Easy deployment via Git-based integration.

Notes: The backend will expose both REST and GraphQL APIs to provide flexibility for frontend communication. REST will be used for standard operations, while GraphQL can be used for complex data queries, such as fetching disaster data for specific regions.

Features:

RESTful endpoints for data CRUD.

GraphQL endpoints for fetching resource data and disaster predictions.

Notes: Django or Flask will be used for the backend API, depending on the complexity and need for an ORM (Django) or lightweight framework (Flask).

Features:

User authentication (via JWT tokens or OAuth).

API endpoints for CRUD operations on disaster data, resources, and allocations.

Secure, RESTful API design.

Notes: SES will send email notifications to stakeholders when a new disaster event is detected, or when resources are allocated.

Features:

Automated emails for disaster updates.

Alerts to managers about resource allocation.

Notes: SNS will handle SMS and mobile push notifications for real-time updates on resource allocation.

Features:

Mobile push notifications for real-time alerts.

SMS for on-the-go updates.

The infrastructure is fully cloud-based, hosted on AWS. Amazon VPC ensures secure networking by isolating resources in private subnets. The application uses Amazon ECS for container orchestration, ensuring scalability and ease of deployment. Amazon S3 and AWS CloudFront deliver static assets efficiently. For real-time monitoring, Amazon CloudWatch tracks performance, while AWS X-Ray helps with debugging and tracing API calls. The application follows AWS IAM best practices to ensure secure access control for users and services

VPC

Notes: VPC will be used to create a private network for the application, ensuring that backend services and databases are isolated from the public internet.

Features:

Private Subnets: Place sensitive services like the backend API and database in private subnets to limit exposure.

Security Groups and NACLs: Define security groups and network access control lists (NACLs) to control inbound and outbound traffic.

VPN Access: Use VPNs for secure access to the VPC for administrative tasks.

Notes: IAM will be used to manage user permissions and ensure that the principle of least privilege is enforced across all services and resources.

Features:

Role-based Access Control (RBAC): Create fine-grained roles and permissions to restrict access to sensitive data and resources.

Multi-Factor Authentication (MFA): Enforce MFA for sensitive user roles to prevent unauthorized access.

IAM Policies: Define specific policies for users, groups, and roles to restrict access to specific services and resources.

Notes: WAF will be used to protect the web application from common web exploits such as SQL injection, cross-site scripting (XSS), and other OWASP Top 10 threats.

Features:

Protection Against Common Attacks: Define rules to block malicious traffic based on patterns or IP addresses.

Bot Protection: Mitigate automated attacks and bots that may attempt to overload the system or scrape data.

Custom Rules: Create custom rules to filter out malicious requests based on specific application needs.

Notes: CloudWatch will be used to monitor application performance, collect logs, and track the health of resources.

Features:

Logs: Collect logs from EC2, Lambda, and other services for real-time monitoring and troubleshooting.

Metrics: Track system metrics like CPU utilization, memory usage, disk I/O, and network traffic for EC2 instances and containers.

Alarms: Set alarms to notify the team when metrics exceed thresholds, indicating issues like high server load, or if a resource fails.

Custom Metrics: Define custom application-specific metrics (e.g., resource allocation status, AI model performance).

CloudWatch Dashboards: Visualize application performance in real-time with dashboards for easy monitoring.

Route 53

Notes: Route 53 will manage the DNS for the application, ensuring fast and reliable access to resources.

Features:

Custom domain management.

DNS failover for high availability.

API Gateway

EFS for Shared File Storage

Notes: EFS will be used for shared storage across multiple instances in the backend.

Features:

Scalable file system for multi-instance access.

Suitable for storing logs and temporary data.

S3 for Static Assets

Notes: S3 will store static files, including frontend assets, disaster images, and AI model files.

Features:

Highly durable object storage.

Versioning for AI models to keep track of changes.

Notes: ECS will be used for containerized applications to ensure efficient scaling and management of Docker containers for the backend services.

Features:

Containerized deployments for scalability.

Integration with AWS Fargate for serverless containers.

Notes: AWS Lambda will be used for event-driven operations such as sending notifications or triggering resource allocation models when new disaster data is available.

Features:

Serverless architecture for cost-effective scaling.

Integration with AWS SNS and SES for notifications.

Notes: EC2 instances will run the backend API services, which can be scaled up or down based on traffic.

Features:

Scalable instances for backend logic.

Use of spot instances to reduce costs for non-critical operations.

Notes: The AI model will process historical disaster data (e.g., from public APIs, government reports) and apply machine learning algorithms to predict which areas need more resources and what types of resources are most needed.

Features:

Predict disaster impact based on historical trends.

Generate real-time allocation plans based on current disaster data.

Notes: AWS SageMaker will be used to train, tune, and deploy the AI models. SageMaker provides a fully managed environment to build and deploy machine learning models.

Features:

Model training and hyperparameter tuning.

Integration with backend APIs for real-time predictions.

Notes: Deep learning models built using TensorFlow or PyTorch will be used to predict and allocate disaster relief resources based on disaster severity and available resources.

Features:

Predictive analytics based on historical disaster data.

Prioritize resource allocation based on urgency, severity, and population density.

Continuous training using new data (new disasters).

The frontend is a React.js application built to provide an intuitive and responsive user interface. It communicates with the backend through REST APIs or GraphQL for data fetching. The frontend is designed with a clean, user-friendly dashboard that visualizes real-time disaster data, resource allocation statuses, and predictions from the AI model. It may be deployed using AWS Amplify for fast deployment and hosting, with Amazon CloudFront serving the static assets through a Content Delivery Network (CDN) for low latency.

Notes: AWS Amplify will be used for hosting the React.js frontend. Amplify provides an easy way to deploy, manage, and scale frontend applications on AWS.

Features:

Automatic CI/CD pipeline for frontend updates.

Integration with backend APIs.

Notes: Material-UI will be used to implement pre-designed UI components that follow Google's Material Design guidelines. This will make the app more user-friendly and aesthetically pleasing.

Features:

Navigation bars, buttons, cards, etc.

Theming and responsiveness for different screen sizes.

Notes: The frontend will be built using React.js to ensure a responsive, dynamic user interface. The use of React ensures that the UI is fast, maintainable, and scalable.

Features:

User authentication and dashboard.

Forms for data input (disaster severity, resources, etc.).

Interactive maps for resource allocation visualization.