von Rhys w Vor 6 Jahren

494

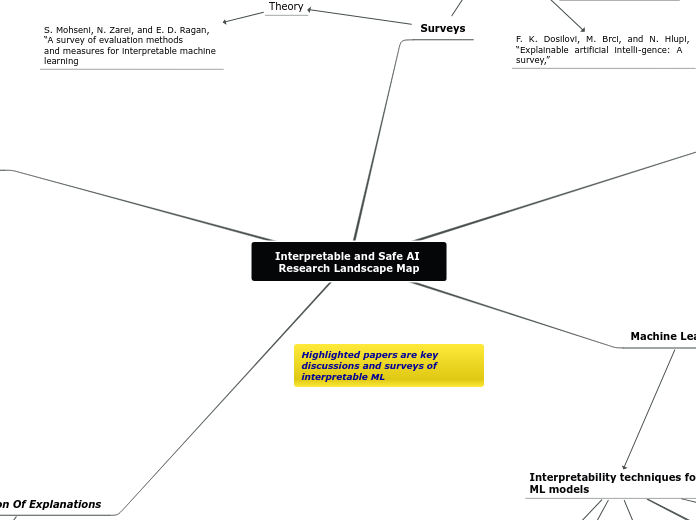

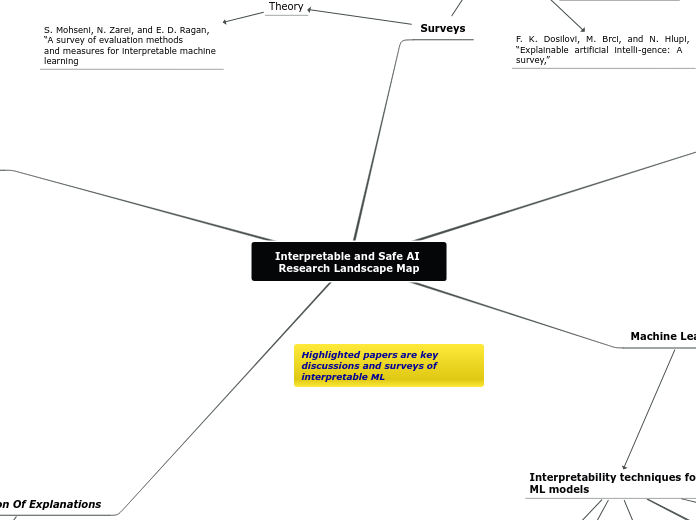

Interpretable and Safe AI Research Landscape Map

von Rhys w Vor 6 Jahren

494

Mehr dazu

N. Tomaev, X. Glorot, and S. Mohamed, “A clinically applicable ap-proach to continuous prediction of future acute kidney injury,” 2019.

O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional net-works for biomedical image segmentation,” 2015.

A. Avati, K. Jung, S. Harman, L. Downing, A. Ng, and N. H. Shah, “Im-proving palliative care with deep learning,”

L. A. Hendricks, Z. Akata, M. Rohrbach, J. Donahue, B. Schiele,and T. D. and, “Generating visual explanations,”

C. Otte, “Safe and interpretable machine learning a methodologicalreview,”

M. T. Ribeiro, S. Singh, and C. Guestrin,“why should i trust you? explaining the predictions of any classifier, ” https://arxiv.org/abs/1602.04938, 2016.

S. Wachter, B. D. Mittelstadt, and C. Russell, “Counterfactualexplanations without opening the black box: Automated decisions andthe GDPR,”CoRR, vol. abs/1711.00399, 2017

I. Higgins, L. Matthey, A. Pal, C. Burgess, X. Glorot, M. Botvinick,S. Mohamed, and A. Lerchner, “Learning basic visual concepts with aconstrained variational framework,”ICLR 2017, 2017.

H. Lakkaraju, E. Kamar, R. Caruana, and J. Leskovec, “Interpretableexplorable approximations of black box models,” inarXiv:1707.01154v1[cs.AI] 4 Jul 2017, 2017

P. W. Koh and P. Liang, “Understanding black-box predictions viainfluence functions,”ICML’17 Proceedings of the 34th InternationalConference on Machine Learning - Volume 70 Pages 1885-1894, 2017.11

M. T. Ribeiro,S. Singh,and C. Guestrin,“why shoulditrustyou?explainingthepredictionsofanyclassifier,”https://arxiv.org/abs/1602.04938, 2016

S. M. Lundberg and S.-I. Lee, “A unified approach to interpreting modelpredictions,” inarXiv:1705.07874v2 [cs.AI] 25 Nov 2017, 2017

Z.C.Lipton,“Themythosofmodelinterpretability,”arXiv:1606.03490v3 [cs.LG], 2017

F. K. Dosilovi, M. Brci, and N. Hlupi, “Explainable artificial intelli-gence: A survey,”MIPRO 2018, May 21-25, 2018, Opatija Croatia,2018

K. Simonyan, A. Vedaldi, and A. Zisserman, “Deep inside convolutionalnetworks: Visualising image classification models and saliency maps,”https://arxiv.org/abs/1312.6034, 2013

Q. shi Zhang and S. chun Zhu, “Visual interpretability for deep learning:a survey,”Frontiers of Information Technology Electronic Engineering,2018.

S. Sarkar, “Accuracy and interpretability trade-offs in machine learningapplied to safer gambling,” inCEUR Workshop Proceedings

L. H. Gilpin, D. Bau, B. Z. Yuan, A. Bajwa, M. Specter, and L. Kagal,“Explaining explanations: An overview of interpretability of machinelearning,”arXiv:1806.00069v3 [cs.AI] 3 Feb 2019, 2019

A. Avati, K. Jung, S. Harman, L. Downing, A. Ng, and N. H. Shah, “Im-proving palliative care with deep learning,”arXiv:1711.06402v1 [cs.CY]17 Nov 2017, 2017

C. Olah, L. Schubert, and A. Mordvintsev, “Feature visualization how neural networks build up their understanding of images

T. Zahavy, N. B. Zrihem, and S. Mannor, “Graying the black box:Understanding dqns

L. A. Hendricks, Z. Akata, M. Rohrbach, J. Donahue, B. Schiele,and T. D. and, “Generating visual explanations,”arXiv:1603.08507v1[cs.CV] 28 Mar 2016, 2016