por Anand Puntambekar hace 6 años

410

Structured Data Learning

por Anand Puntambekar hace 6 años

410

Ver más

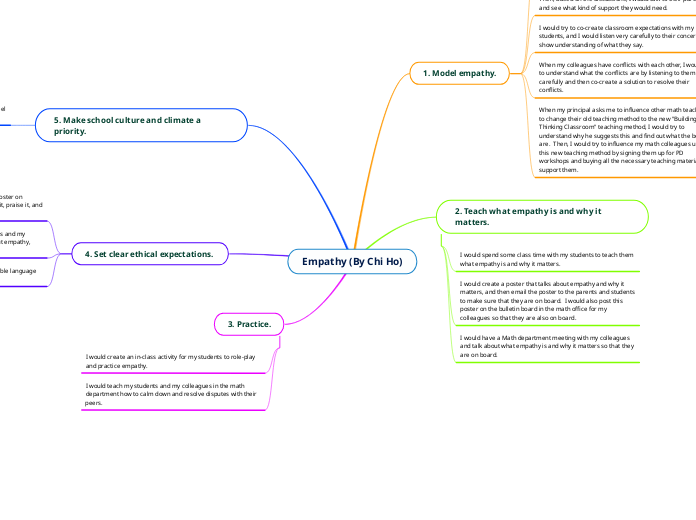

por Chi Kin Ho

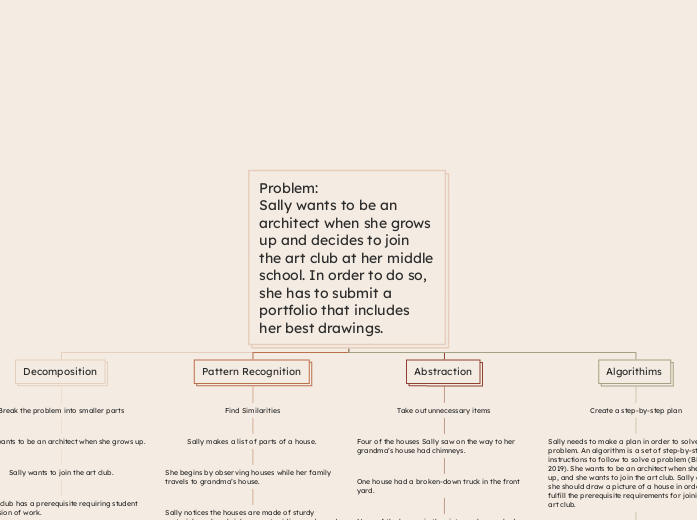

por Sandra Stephens

por Onur Sürhan

por Fred Watke

a = T([[1.,2],[3,4]]) b = T([[2.,2],[10,10]]) a,b

( 1 2 3 4 [torch.FloatTensor of size 2x2], 2 2 10 10 [torch.FloatTensor of size 2x2]

)

(a*b).sum(1)

6

70

Object

model=DotProduct() model(a,b)

Class

class DotProduct(nn.Module):

def forward(self, u, m): return (u*m).sum(1)

preds = learn.predict()

y=learn.data.val_y sns.jointplot(preds, y, kind='hex', stat_func=None);

learn = cf.get_learner(n_factors, val_idxs, 64, opt_fn=optim.Adam) learn.fit(1e-2, 2, wds=wd, cycle_len=1, cycle_mult=2)

epoch trn_loss val_loss

0 0.746956 0.772499

1 0.711768 0.750826

2 0.590427 0.735018

Since the output is Mean Squared Error, you can take RMSE by:

math.sqrt(0.765)

0.8746427842267951

#Create a model data object from CSV file cf = CollabFilterDataset.from_csv(path, 'ratings.csv', 'userId', 'movieId', 'rating')

# and n_factors is how big an embedding matrix we want. n_factors = 50

# wd is a weight decay for L2 regularization, wd=2e-4

# create a validation set by picking random set of ID’s. val_idxs = get_cv_idxs(len(ratings))

ratings = pd.read_csv(path+'ratings.csv') ratings.head()

import os

#Change working Directory os.chdir('/home/paperspace/fastai/courses/SelfCodes/Colaborative_Fiter_IMDB/data')

%pwd path='/home/paperspace/fastai/courses/SelfCodes/Colaborative_Fiter_IMDB/data/ml-latest-smal

# Import relevant Libraries %reload_ext autoreload %autoreload 2 %matplotlib inline from fastai.learner import * from fastai.column_data import *

# Modules to Import for Stuructural Data Analysis from fastai.structured import * from fastai.column_data import * # These options determine the way floating point numbers, arrays and other NumPy objects are displayed. np.set_printoptions(threshold=50, edgeitems=20)

We shall use a pre-trained network which at least knows how to read English. we will train a model that

predicts a next word of a sentence (i.e. language model), and just like in computer vision, stick some

new layers on the end and ask it to predict whether something is positive or negative.

We had pre-trained a language model and now we want to fine-tune it to do sentiment classification.

Now you can go ahead and call get_model that gets us our learner. Then we can load into it the pre-trained language model (load_encoder).

Predict

accuracy(*m3.predict_with_targs())

Load Cycle

m3.load_cycle('imdb2', 4)

Code Part 3

m3.fit(lrs, 7, metrics=[accuracy], cycle_len=2,

cycle_save_name='imdb2')

Code Part 2

We make sure all except the last layer is frozen. Then we train a bit, unfreeze it, train it a bit. The nice thing is once you have got a pre-trained language model, it actually trains really fast.

m3.freeze_to(-1) m3.fit(lrs/2, 1, metrics=[accuracy]) m3.unfreeze() m3.fit(lrs, 1, metrics=[accuracy], cycle_len=1)

Code Part 1

# increase the max gradient for clipping m3.clip=25.

# use differential learning rates

lrs=np.array([1e-4,1e-3,1e-2])

m3.load_encoder(f'adam3_20_enc')

m3 = md2.get_model(opt_fn, 1500, bptt, emb_sz=em_sz, n_hid=nh,

n_layers=nl, dropout=0.1, dropouti=0.4,

wdrop=0.5, dropoute=0.05, dropouth=0.3)

m3.reg_fn = partial(seq2seq_reg, alpha=2, beta=1)

fastai can create a ModelData object directly from torchtext splits.

md2 = TextData.from_splits(PATH, splits, bs)

splits is a torchtext method that creates train, test, and validation sets. The IMDB dataset is built into torchtext, so we can take advantage of that. Take a look at lang_model-arxiv.ipynb to see how to define your own fastai/torchtext datasets.

code

splits = torchtext.datasets.IMDB.splits(TEXT, IMDB_LABEL, 'data/')

t = splits[0].examples[0]

t.label, ' '.join(t.text[:16])

('pos', 'ashanti is a very 70s sort of film ( 1979 , to be precise ) .')

sequential=False tells torchtext that a text field should be tokenized (in this case, we just want to store the 'positive' or 'negative' single label).

IMDB_LABEL = data.Field(sequential=False)

To use a pre-trained model, we will need to the saved vocab from the language model, since we need to ensure the same words map to the same IDs.

TEXT = pickle.load(open(f'{PATH}models/TEXT.pkl','rb'))

Let's see if our model can generate more text all by itself

print(ss,"\n")

for i in range(50):

n=res[-1].topk(2)[1]

n = n[1] if n.data[0]==0 else n[0]

print(TEXT.vocab.itos[n.data[0]], end=' ')

res,*_ = m(n[0].unsqueeze(0))

print('...')

So, it wasn't quite was I was expecting, but I really liked it anyway! The

best

part of the movie . the movie is a bit of a mess , but it 's not a bad movie

. it 's a very good movie , and i would recommend it to anyone who likes a g

ood laugh . <eos> i have seen this movie several times ...

nexts = torch.topk(res[-1], 10)[1] [TEXT.vocab.itos[o] for o in to_np(nexts)]

m=learner.model

# Set batch size to 1

m[0].bs=1

# Turn off dropout

m.eval()

# Reset hidden state

m.reset()

# Get predictions from model

res,*_ = m(t)

# Put the batch size back to what it was

m[0].bs=bs

ss=""". So, it wasn't quite was I was expecting, but I really liked it anyway! The best""" s = [TEXT.preprocess(ss)] t=TEXT.numericalize(s) ' '.join(s[0])

Testing language model: create a short bit of text to ‘prime’ a set of predictions.

Application torchtext field to numericalize it so we can feed it to our language model

Learning Iterations

Iteration 2

learner.fit(3e-3, 1, wds=1e-6, cycle_len=20,

cycle_save_name='adam3_20')

learner.load_cycle('adam3_20',0)

Iteration 1

learner.fit(3e-3, 4, wds=1e-6, cycle_len=10,

cycle_save_name='adam3_10')

learner.save_encoder('adam3_10_enc')

In the sentiment analysis section, we'll just need half of the language model - the

encoder, so we save that part.

learner.save_encoder('adam3_20_enc')

learner.load_encoder('adam3_20_enc')

Save Learner

learner.save_encoder('adam1_enc')

Fit learner

learner.fit(3e-3, 4, wds=1e-6, cycle_len=1, cycle_mult=2)

Create learner

learner = md.get_model(opt_fn, em_sz, nh, nl, dropouti=0.05, dropout=0.05, wdrop=0.1, dropoute=0.02, dropouth=0.05) # to avoid over fitting learner.reg_fn = partial(seq2seq_reg, alpha=2, beta=1) learner.clip=0.3

learner.clip=0.3 : when you look at your gradients and you multiply them by the learning rate to decide how

much to update your weights by, this will not allow them be more than 0.3. This is a cool little trick to prevent us

from taking too big of a step

Fast.ai uses a variant of the state of the art AWD LSTM Language Model developed by Stephen Merity. A key

feature of this model is that it provides excellent regularization through Dropout. There is no simple way known

(yet!) to find the best values of the dropout parameters below — you just have to experiment…

However, the other parameters (alpha, beta, and clip) shouldn't generally need tuning.

opt_fn = partial(optim.Adam, betas=(0.7, 0.99))

Researchers have found that large amounts of momentum (which we’ll learn about later) don’t work well with

these kinds of RNN models, so we create a version of the Adam optimizer with less momentum than its default

of 0.9. Any time you are doing NLP, you should probably include this line:

em_sz = 200 # size of each embedding vector nh = 500 # number of hidden activations per layer nl = 3 # number of layers

The embedding size is 200 which is much bigger than our previous embedding vectors. Not surprising because

a word has a lot more nuance to it than the concept of Sunday. Generally, an embedding size for a word will be

somewhere between 50 and 600.

View Batch

next(iter(md.trn_dl))

A neat trick torchtext does is to randomly change the bptt number every time so each epoch it is getting slightly

different bits of text — similar to shuffling images in computer vision. We cannot randomly shuffle the words

because they need to be in the right order, so instead, we randomly move their breakpoints a little bit.

Now that we have a model data object that can fee d us batches, we can create a model. First, we are going to

create an embedding matrix.

Our LanguageModelData object will create batches with 64 columns (that's our batch size), and varying

sequence lengths of around 80 tokens (that's our bptt parameter - backprop through time).

Each batch also contains the exact same data as labels, but one word later in the text - since we're trying to

always predict the next word. The labels are flattened into a 1d array.

Integet Format

# Torch text will handle turing changing word to int TEXT.numericalize([md.trn_ds[0].text[:12]])

Text Format

md.trn_ds[0].text[:12]

['at',

'first',

',',

'i',

'thought',

'this',

'was',

'a',

'sequel',

'to',

'entre',

'nous']

# 'stoi': 'string to int' TEXT.vocab.stoi['the']

# 'itos': 'int-to-string' TEXT.vocab.itos[:12]

['<unk>', '<pad>', 'the', ',', '.', 'and', 'a', 'of', 'to', 'is', 'in', 'i

t']

Save Model

# Save the model for later

pickle.dump(TEXT, open(f'{PATH}models/TEXT.pkl','wb'))

View Model info

#Here are the: # batches; print(len(md.trn_dl)) # unique tokens in the vocab; print(md.nt) # tokens in the training set; print(len(md.trn_ds)) # sentences print(len(md.trn_ds[0].text))

4583

37392

1

20540756

Step 2

md = LanguageModelData.from_text_files(PATH, TEXT, **FILES, bs=bs, bptt=bptt, min_freq=10)

PATH : as per usual where the data is, where to save models, etc

TEXT : torchtext’s Field definition ( Tokenised text)

**FILES : list of all of the files we have: training, validation, and test (to keep things simple, we do not have a

separate validation and test set, so both points to validation folder)

bs : batch size

bptt : Back Prop Through Time. It means how long a sentence we will stick on the GPU at once

min_freq=10 : In a moment, we are going to be replacing words with integers (a unique index for every word). If

there are any words that occur less than 10 times, just call it unknown.

After building our ModelData object, it automatically fills the TEXT object with a very important attribute:

TEXT.vocab. This is a vocabulary, which stores which unique words (or tokens) have been seen in the text, and

how each word will be mapped to a unique integer id.

Step 1

FILES = dict(train=TRN_PATH, validation=VAL_PATH, test=VAL_PATH)

# Now we create the usual Fast.ai model data object: # bptt - how many words are processed at a time in each row of mini batch , making this hig # # bptt making this higher also increases models ability to handle long sentences bs=64; bptt=70

Before we can do anything with text, we have to turn it into a list of tokens.

Token is basically like a word. Eventually we will turn them into a list of numbers, but the first step is to turn it

into a list of words — this is called “tokenization” in NLP.

A good tokenizer will do a good job of recognizing pieces in your sentence.

Each separated piece of punctuation will be separated, and each part of multi-part word will be separated as

appropriate.

Spacy does a lot of NLP stuff, and it has the best tokenizer . So Fast.ai library is designed to work well with the

Spacey tokenizer as with torchtext.

# Text Pre Processing TEXT = data.Field(lower=True, tokenize= "spacy")

' '.join([sent.string.strip() for sent in spacy_tok(review[0])])

Example

"I have to say when a name like Zombiegeddon and an atom bomb on the front c

over I was expecting a flat out chop - socky fung - ku , but what I got inst

ead was a comedy . So , it was n't quite was I was expecting , but I really

liked it anyway ! The best scene ever was the main cop dude pulling those ki

ds over and pulling a Bad Lieutenant on them ! !

import spacy

spacy_tok = spacy.load('en')

Validation Data Set ( Concatenated)

!find {VAL} -name '*.txt' | xargs cat | wc -w

#5686719

Training Data Set ( Concatenated)

!find {TRN} -name '*.txt' | xargs cat | wc -w

#17486581

review = !cat {TRN}{trn_files[6]}

review[0]

"I have to say when a name like Zombiegeddon and an atom bomb on the front c

over I was expecting a flat out chop-socky fung-ku, but what I got instead w

as a comedy. So, it wasn't quite was I was expecting, but I really liked it

anyway! The best scene ever was the main cop dude pulling those kids over an

d pulling a Bad Lieutenant on them!! I was laughing my ass off. I mean, the

cops were just so bad! And when I say bad, I mean The Shield Vic Macky bad.

But unlike that show I was laughing when they shot people and smoked dope.<b

r /><br />Felissa

trn_files = !ls {TRN}

trn_files[:10]

['0_0.txt',

'0_3.txt',

'0_9.txt',

'10000_0.txt',

'10000_4.txt',

'10000_8.txt',

'1000_0.txt',

'10001_0.txt',

'10001_10.txt',

'10001_4.txt']

#import os, sys, tarfile

#import tarfile

#tar = tarfile.open("aclImdb.tgz")

#tar.extractall()

#tar.close()

We do not have separate test and validation in this case. Just like in vision, the training directory has bunch of

files in it:

PATH = '/home/paperspace/fastai/courses/SelfCodes/Text_Class/aclImdb/'

TRN_PATH = 'train/all/'

VAL_PATH = 'test/all/'

TRN = f'{PATH}{TRN_PATH}'

VAL = f'{PATH}{VAL_PATH}'

%ls {PATH}

imdbEr.txt imdb.vocab models/ README test/ tmp/ train/

#To auto-reload modules in jupyter notebook (so that changes in files *.py doesn't require reloading %reload_ext autoreload %autoreload 2 %matplotlib inline from fastai.learner import * # Torch text: Py torch NLP library import torchtext from torchtext import vocab, data from torchtext.datasets import language_modeling from fastai.rnn_reg import * from fastai.rnn_train import * from fastai.nlp import * from fastai.lm_rnn import * import dill as pickle import spacy

Fine-tuning a pre-trained network is really powerful.

If we can get it to learn some related tasks first, then we can use all that information to try and help it on the

second task.

After reading a thousands words knowing nothing about how English is structured or concept of a word or

punctuation, all you get is a 1 or a 0 (positive or negative).

Trying to learn the entire structure of English and then how it expresses positive and negative sentiments from a

single number is just too much to expect.

Step 1. List categorical variable names, and list continuous variable names, and put them in a Pandas data frame

Step 2. Create a list of which row indexes you want in your validation set

Step 3. Call this exact line of code:

md = ColumnarModelData.from_data_frame(PATH, val_idx, df,

yl.astype(np.float32), cat_flds=cat_vars, bs=128,

test_df=df_test)

Step 4. Create a list of how big you want each embedding matrix to be

Step 5. Call get_learner — you can use these exact parameters to start with:

m = md.get_learner(emb_szs, len(df.columns)-len(cat_vars), 0.04, 1,

[1000,500], [0.001,0.01], y_range=y_range)

Step 6. Call m.fit

m.fit(lr, 1, metrics=[exp_rmspe], cycle_len=1)

m.fit(lr, 3, metrics=[exp_rmspe])

m = md.get_learner(emb_szs, len(df.columns)-len(cat_vars), 0.04, 1,

[1000,500], [0.001,0.01], y_range=y_range)

lr = 1e-3

m = md.get_learner(emb_szs, len(df.columns)-len(cat_vars),

0.04, 1, [1000,500], [0.001,0.01],

y_range=y_range)

emb_szs : embedding sizelen(df.columns)-len(cat_vars) : number of continuous variables in the data frame0.04 : embedding matrix has its own dropout and this is the dropout rate1 : how many outputs we want to create (output of the last linear layer)[1000, 500] : number of activations in the first linear layer, and the second linear layer[0.001, 0.01] : dropout in the first linear layer, and the second linear layery_range : we will not worry about that for nowEmbedding

parameters that we are learning that happen to end up giving us a good loss. We will discover later that these particular parameters often are human interpretable and quite interesting but that a side effect.

emb_szs = [(c, min(50, (c+1)//2)) for _,c in cat_sz] emb_szs

cat_sz = [(c, len(joined_samp[c].cat.categories)+1)

for c in cat_vars]

cat_sz

[('Store', 1116),

('DayOfWeek', 8),

('Year', 4),

('Month', 13),

('Day', 32),

('StateHoliday', 3),

('CompetitionMonthsOpen', 26),

('Promo2Weeks', 27),

('StoreType', 5),

('Assortment', 4),

('PromoInterval', 4),

('CompetitionOpenSinceYear', 24),

('Promo2SinceYear', 9),

('State', 13),

('Week', 53),

('Events', 22),

('Promo_fw', 7),

('Promo_bw', 7),

('StateHoliday_fw', 4),

('StateHoliday_bw', 4),

('SchoolHoliday_fw', 9),

('SchoolHoliday_bw', 9)]

lr_find, then call learn.fit and so forth.md = ColumnarModelData.from_data_frame(PATH, val_idx, df,

yl.astype(np.float32), cat_flds=cat_vars, bs=128,

test_df=df_test)

Info

PATH : Specifies where to store model files etcval_idx : A list of the indexes of the rows that we want to put in the validation setdf : data frame that contains independent variableyl : We took the dependent variable y returned by proc_df and took the log of that (i.e. np.log(y))cat_flds : which columns to be treated as categorical. Remember, by this time, everything is a number, so unless we specify, it will treat them all as continuous.def inv_y(a): return np.exp(a)

def exp_rmspe(y_pred, targ):

targ = inv_y(targ)

pct_var = (targ - inv_y(y_pred))/targ

return math.sqrt((pct_var**2).mean())

max_log_y = np.max(yl)

y_range = (0, max_log_y*1.2)

val_idx = np.flatnonzero((df.index<=datetime.datetime(2014,9,17)) &

(df.index>=datetime.datetime(2014,8,1)))

df do not have Sales column, and y only contains Sales column.do_scale : Neural nets really like to have the input data to all be somewhere around zero with a standard deviation of somewhere around 1. So we take our data, subtract the mean, and divide by the standard deviation to make that happen. It returns a special object which keeps track of what mean and standard deviation it used for that normalization so you can do the same to the test set later (mapper).df, y, nas, mapper = proc_df(joined_samp, 'Sales', do_scale=True) yl = np.log(y)

# get_cv_indxs(n) gives back 20% of the random dataset

idxs = get_cv_idxs(n, val_pct=150000/n)

# DataFrame.iloc

# Integer-location based indexing for selection by position.

# Set the DataFrame index (row labels) using one or more existing columns or arrays (of the correct length). The index can replace the existing index or expand on it.

joined_samp = joined.iloc[idxs].set_index("Date")

samp_size = len(joined_samp); samp_size

# Observe Data

joined_samp.head(2)

There are two types of columns:

cat_vars = ['Store', 'DayOfWeek', 'Year', 'Month', 'Day',

'StateHoliday', 'CompetitionMonthsOpen', 'Promo2Weeks',

'StoreType', 'Assortment', 'PromoInterval',

'CompetitionOpenSinceYear', 'Promo2SinceYear', 'State',

'Week', 'Events', 'Promo_fw', 'Promo_bw',

'StateHoliday_fw', 'StateHoliday_bw',

'SchoolHoliday_fw', 'SchoolHoliday_bw']

contin_vars = ['CompetitionDistance', 'Max_TemperatureC',

'Mean_TemperatureC', 'Min_TemperatureC',

'Max_Humidity', 'Mean_Humidity', 'Min_Humidity',

'Max_Wind_SpeedKm_h', 'Mean_Wind_SpeedKm_h',

'CloudCover', 'trend', 'trend_DE',

'AfterStateHoliday', 'BeforeStateHoliday', 'Promo',

'SchoolHoliday']

n = len(joined); n

cat_vars and turn applicable data frame columns into categorical columns.contin_vars and set them as float32 (32 bit floating point) because that is what PyTorch expects.dep = 'Sales' joined = joined[cat_vars+contin_vars+[dep, 'Date']].copy()

for v in cat_vars:

joined[v] = joined[v].astype('category').cat.as_ordered()

for v in contin_vars:

joined[v] = joined[v].astype('float32')

If you are using year as a category, what happens when a model encounters a year it has never seen before? [

] We will get there, but the short answer is that it will be treated as an unknown category. Pandas has a special category called unknown and if it sees a category it has not seen before, it gets treated as unknown.

Year , Month, although we could treat them as continuous, we do not have to. If we decide to make Year a categorical variable, we are telling our neural net that for every different “level”of Year (2000, 2001, 2002), you can treat it totally differently; where-else if we say it is continuous, it has to come up with some kind of smooth function to fit them. So often things that actually are continuous but do not have many distinct levels (e.g. Year, DayOfWeek), it often works better to treat them as categorical.# store data and csv files observe the same

# Example of cats and dogs

# list directories of 'PATH'

os.listdir(PATH)

# list directories of 'train'

os.listdir(f'{PATH}train')

# or

# Example 1: Binary Image Classifcation #Path is path to Data PATH='/home/paperspace/fastai/courses/SelfCodes/Structured and Time series analysis/data/' os.chdir(PATH) %pwd

# NVidia GPU with programming framework CUDA is critical & following command must return true torch.cuda.is_available()

# Make sure deep learning package from CUDA CuDNN is enabled for improving training performance ( prefered) torch.backends.cudnn.enabled

#To auto-reload modules in jupyter notebook (so that changes in files *.py doesn't require manual reloading): %reload_ext autoreload %autoreload 2 #To inline the output of plotting commands is displayed inline within frontend Jupyter notebook %matplotlib inline