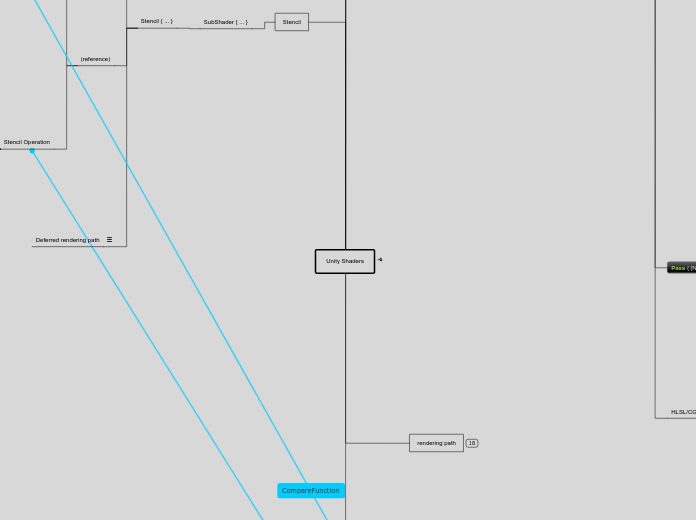

Unity Shaders

Stencil

SubShader { ... }

Stencil { ... }

Deferred rendering path

Stencil functionality for objects rendered in the deferred rendering path is somewhat limited, as during the base pass and lighting pass the stencil buffer is used for other purposes. During those two stages stencil state defined in the shader will be ignored and only taken into account during the final pass. Because of that it’s not possible to mask out these objects based on a stencil test, but they can still modify the buffer contents, to be used by objects rendered later in the frame. Objects rendered in the forward rendering path following the deferred path (e.g. transparent objects or objects without a surface shader) will set their stencil state normally again.

The deferred rendering path uses the three highest bits of the stencil buffer, plus up to four more highest bits - depending on how many light mask layers are used in the scene. It is possible to operate within the range of the “clean” bits using the stencil read and write masks, or you can force the camera to clean the stencil buffer after the lighting pass using Camera.clearStencilAfterLightingPass.

(reference)

Stencil Operation

DecrWrap

Decrement the current value in the buffer. If the value is 0 already, it becomes 255.

IncrWrap

Increment the current value in the buffer. If the value is 255 already, it becomes 0.

Invert

Negate all the bits.

DecrSat

Decrement the current value in the buffer. If the value is 0 already, it stays at 0.

IncrSat

Increment the current value in the buffer. If the value is 255 already, it stays at 255.

Replace

Write the reference value into the buffer.

Zero

Write 0 into the buffer.

Keep

Keep the current contents of the buffer.

Comparison Function

Never

Make the stencil test always fail.

Always

Make the stencil test always pass.

NotEqual

Only render pixels whose reference value differs from the value in the buffer.

Equal

Only render pixels whose reference value equals the value in the buffer.

LEqual

Only render pixels whose reference value is less than or equal to the value in the buffer.

Less

Only render pixels whose reference value is less than the value in the buffer.

GEqual

Only render pixels whose reference value is greater than or equal to the value in the buffer.

Greater

Only render pixels whose reference value is greater than the value in the buffer.

(example)

Pass

keep

(stencilOperation)

// What to do with the contents of the buffer if the stencil test (and the depth test) passes. Default: keep.

Comp

notequal

(comparisonFunction)

// The function used to compare the reference value to the current contents of the buffer. Default: always.

Ref

1

(referenceValue)

0–255 integer

The value to be compared against (if Comp is anything else than always) and/or the value to be written to the buffer (if either Pass, Fail or ZFail is set to replace)

// Keep buffer if stencil buffer is not equal to 1

RenderTarget

Object

Hidden/

Camera-DepthNormalTexture

replace

SubShaderArray

"mton_Mode"

Color (1,1,1,1)

Fog { Mode Off }

"RenderType"="Ethereal"

Color (0,0,0,0)

//color.a == _MainTex.a in composite shader

"RenderType"="Opaque"

"RenderType"="TagValue"

"Ethereal"

Camera

xform

composite

mtonComposite.

SubShader

Pass{}

Fallback off

half4 frag (v2f i) : COLOR{}

return color;

struct v2f {}

float2 uv[2] : TEXCOORD0;

_MaskTex: register(s2);

_EthrTex : register(s1);

_MainTex : register(s0);

"UnityCG.cginc"

fragmentoption ARB_precision_hint_fastest

fragment frag

exclude_renderers gles

ZTest Always Cull Off ZWrite Off Fog { Mode off }

GrabPass {}

ShaderLab syntax: GrabPass

GrabPass is a special passtype - it grabs the contents of the screen where the object is about to be drawn into a texture. This texture can be used in subsequent passes to do advanced image based effects.

Syntax

The GrabPass belongs inside a subshader. All properties are optional.

GrabPass {

TextureScale 0.5

TextureSize 256

BorderScale 0.3

// following are regular pass commands

Tags { "LightMode" = "VertexLit" }

Name "BASE"

}

Details

You can grab the screen behind the object being rendered in order to use it in a later pass. This is done with the GrabPass command. In a subsequent pass, you can access the grabbed screen as a texture, distorting what is behind the object. This is typically used to create stained glass and other refraction-like effects.

The region grabbed from the screen is available to subsequent passes as _GrabTexture texture property.

After grabbing, BorderScale gets converted into screenspace coordinates as _GrabBorderPixels property. This is the maximum amount you can displace within subsequent passes.

Unity will reuse the screen texture between different objects doing GrabPass. This means that one refractive object will not refract another.

Example

So Much For So Little

Here is the most complex way ever of rendering nothing.

Shader "ComplexInvisible" {

SubShader {

// Draw ourselves after all opaque geometry

Tags { "Queue" = "Transparent" }

// Grab the screen behind the object into _GrabTexture, using default values

GrabPass { }

// Render the object with the texture generated above.

Pass {

SetTexture [_GrabTexture] { combine texture }

}

}

}

This shader has two passes: First pass grabs whatever is behind the object at the time of rendering, then applies that in the second pass. Note that the _GrabTexture is configured to display at the exact position of the object - hence it becomes transparent.

later passes...

After grabbing, BorderScale gets converted into screenspace coordinates as _GrabBorderPixels property. This is the maximum amount you can displace within subsequent passes.

The region grabbed from the screen is available to subsequent passes as _GrabTexture texture property.

Unity will reuse the screen texture between different objects doing GrabPass. This means that one refractive object will not refract another.

BorderScale

Specifies that you want to grab an extra region around the object. The value is relative to the object's bounding box.

TextureScale

Specifies that you want the texture to be a certain scale of the object's screen size. This is the default behaviour.

TextureSize

Specifies that you want the grabbed texture to have a certain pixel dimension.

Tags{}

"RenderEffect"="Ethereal"

Properties {}

_MaskTex

_EthrTex

_MainTex

("", RECT) = "white" {}

// IMPLICIT : Carries render from camera Can't overwrite.

layermask

cam.cullingMask = 1<<(LayerMask.NameToLayer("Ethereal"))

RenderWithShader(shader: Shader, replacementTag: string): void ;

replacementTag

built-in

camera

Under the hood

Depth texture can come directly from the actual depth buffer, or be rendered in a separate pass, depending on the rendering path used and the hardware. When the depth texture is rendered in a separate pass, this is done through Shader Replacement. Hence it is important to have correct "RenderType" tag in your shaders.

In some cases, the depth texture might come directly from the native Z buffer. If you see artifacts in your depth texture, make sure that the shaders that use it do not write into the Z buffer (use ZWrite Off).

When implementing complex shaders or Image Effects, keep Rendering Differences Between Platforms in mind. In particular, using depth texture in an Image Effect often needs special handling on Direct3D + Anti-Aliasing.

Z-depth

// Generate a depth + normals texture

camera.depthTextureMode = DepthTextureMode.DepthNormals;

DepthTextureMode.DepthNormals

screen-sized 32 bit (8 bit/channel) texture, where view space normals are encoded into R&G channels, and depth is encoded in B&A channels

depth is 16 bit value packed into two 8 bit channels

Normals are encoded using Stereographic projection

For examples on how to use the depth and normals texture, please refer to the EdgeDetection image effect in the Shader Replacement example project or SSAO Image Effect.

UnityCG.cginc include file has a helper function DecodeDepthNormal to decode depth and normal from the encoded pixel value. Returned depth is in 0..1 range.

DepthTextureMode.Depth

This builds a screen-sized depth texture.

"RenderType"

!=""

cam.RenderWithShader (replaceShader,"RenderType")

functions

SetReplacementShader(shader: Shader, replacementTag: string): void;

WTHeck is this

Make the camera render with shader replacement.

ResetReplacementShader(): void

Remove shader replacement from camera.

for each object that would be rendered

The real object's shader is queried for the tag value.

object is rendered

1st subshader in replace Shader has tag value == the real object shader's tag value("Ethereal")

object is not rendered.

if no subshader in replaceShader has tag value == the real object shader's tag value ("RenderType")

If no subshader in replaceShader has tag name == "RenderType"

==""

cam.RenderWithShader (replaceShader,"")

all objects in the scene are rendered with the given replacement shader.

using the 1st subshader listed

can be a collection of sub shaders

will map current object's with replacement shader's subshader tag name and tag value

This is used for special effects, e.g. rendering screenspace normal buffer of the whole scene, heat vision and so on.

This will render the camera. It will use the camera's clear flags, target texture and all other settings.

The camera will not send OnPreCull, OnPreRender or OnPostRender to attached scripts. Image filters will not be rendered either.

To make use of this feature, usually you create a camera and disable it. Then call RenderWithShader on it.

You are not able to call the Render function from a camera that is currently rendering. If you wish to do this create a copy of the camera, and make it match the original one using CopyFrom.

MonoBehaviour

mt_Camera_Ethereal.js

Attach to Camera.

//RenderToQuad

DownSample4x(src,dest)

// Downsamples the texture to a quarter resolution.

FourTapCone(src,dest,i)

// Performs one blur iteration.

Render4TapQuad(dest, OffSetX, OffSet)

RendersImage to Quad

Sets up Gl.Quads

Set4TexCoords(x,y,offX,offY)

OnRenderImage(src, dest)

//blit

ImageEffects.BlitWithMaterial(compositeMat, source, destination);

//clean up

RenderTexture.ReleaseTemporary(buffer2);

RenderTexture.ReleaseTemporary(buffer);

//set sources @ composite shader

compositeMat.SetTexture ("_MaskTex", mskTexture) ;

var compositeMat = GetCompositeMaterial();

// _MainTex set automatically by camera

compositeMat.SetTexture ("_EthrTex", delTexture) ;

//blur and buffer images

FourTapCone (buffer, buffer2, i);

DownSample4x (renderTexture, buffer);

OnPreRender()

//replace Shader

cam.RenderWithShader (replaceShader, "RenderType");

//path to RenderTarget

cam.targetTexture = renderTexture;

//init shaderCamera

//copy Main Camera Setting

cam.clearFlags = CameraClearFlags.SolidColor;

cam.backgroundColor = Color(0,0,0,0);

//black background == additive effect

cam.CopyFrom (camera);

//copying FOV, resolution...etc???

var cam = shaderCamera.camera;

if (!shaderCamera) {}

shaderCamera.hideFlags = HideFlags.HideAndDontSave;

shaderCamera.camera.enabled = false;

//???Why disable???

shaderCamera = new GameObject("ShaderCamera", Camera);

//init renderTexture

renderTexture = RenderTexture.GetTemporary (camera.pixelWidth, camera.pixelHeight, 16);

static function GetTemporary(width: int, height: int, depthBuffer: int = 0, format: RenderTextureFormat = RenderTextureFormat.Default, readWrite: RenderTextureReadWrite = RenderTextureReadWrite.Default, antiAliasing: int = 1): RenderTexture;

Parameters

width Width in pixels.

height Height in pixels.

depthBuffer Depth buffer bits (0, 16 or 24).

format Render texture format.

readWrite sRGB handling mode.

antiAliasing Anti-aliasing (1,2,4,8).

Description

Allocate a temporary render texture.

This function is optimized for when you need a quick RenderTexture to do some temporary calculations. Release it using ReleaseTemporary as soon as you're done with it, so another call can start reusing it if needed.

Internally Unity keeps a pool of temporary render textures, so a call to GetTemporary most often just returns an already created one (if the size and format matches). These temporary render textures are actually destroyed when they aren't used for a couple of frames.

If you are doing a series of post-processing "blits", it's best for performance to get and release a temporary render texture for each blit, instead of getting one or two render textures upfront and reusing them. This is mostly beneficial for mobile (tile-based) and multi-GPU systems: GetTemporary will internally do a DiscardContents call which helps to avoid costly restore operations on the previous render texture contents.

You can not depend on any particular contents of the RenderTexture you get from GetTemporary function. It might be garbage, or it might be cleared to some color, depending on the platform.

if(renderTexture != null){}

renderTexture = null;

RenderTexture.ReleaseTemporary (renderTexture);

Start()

disable if

!GetMaterial().shader.isSupported

!SystemInfo.supportsImageEffect

OnDisable()

RenderTexture.ReleaseTemporary()

renderTexture == null

DestroyImmediate()

m_Material.shader

??? why this order?

var

private

GameObject

shaderCamera

RenderTexture

renderTexture

Material

m_CompositeMaterial

GetCompositeMaterial()

m_CompositeMaterial = new Material( compositeShader )

m_Material

GetMaterial()

m_Material = new Material(blurMatString)

public

String

blurMatString

blurRamp

colorRamp

req

@script RequireComponent(Camera)

@script AddComponentMenu("Image Effects/Funky Glowing Things")

@script ExecuteInEditMode

Render()

// Take a "screenshot" of a camera's Render Texture.

function RTImage(cam: Camera) {

// The Render Texture in RenderTexture.active is the one

// that will be read by ReadPixels.

var currentRT = RenderTexture.active;

RenderTexture.active = cam.targetTexture;

// Render the camera's view.

cam.Render();

// Make a new texture and read the active Render Texture into it.

var image = new Texture2D(cam.targetTexture.width, cam.targetTexture.height);

image.ReadPixels(new Rect(0, 0, cam.targetTexture.width, cam.targetTexture.height), 0, 0);

// Replace the original active Render Texture.

RenderTexture.active = currentRT;

return image;

}

render the camera. It will use the camera's clear flags, target texture and all other settings.

You are not able to call the Render function from a camera that is currently rendering.

If you wish to do this create a copy of the camera, and make it match the original one using CopyFrom.

The camera will send OnPreCull, OnPreRender & OnPostRender to any scripts attached, and render any eventual image filters.

This is used for taking precise control of render order. To make use of this feature, create a camera and disable it. Then call Render on it.

UnityEngine.Rendering

Enumerations

AmbientMode

Custom

// Ambient lighting is defined by a custom cube map.

Flat

// Flat ambient lighting.

Trilight

ground

RenderSettings.ambientGroundColor

equator

RenderSettings.ambientEquatorColor

sky

RenderSettings.ambientSkyColor

// Trilight ambient lighting.

Skybox

// Skybox-based or custom ambient lighting.

// Ambient lighting mode.

// Unity can provide ambient lighting in several modes, for example directional ambient with separate sky, equator and ground colors, or flat ambient with a single color.

BlendMode

// Blend mode for controlling the blending.

// The blend mode is set separately for source and destination, and it controls the blend factor of each component going into the blend equation. It is also possible to set the blend mode for color and alpha components separately. Note: the blend modes are ignored if logical blend operations or advanced OpenGL blend operations are in use.

(renderTarget)

BlendOp

// The blend operation that is used to combine the pixel shader output with the render target. This can be passed through Material.SetInt() to change the blend operation during runtime.

//Note that the logical operations are only supported in Gamma (non-sRGB) colorspace, on DX11.1 hardware running on DirectX 11.1 runtime.

(stencil)

StencilOp

UnityEngine.Rendering.StencilOp

// Specifies the operation that's performed on the stencil buffer when rendering.

CompareFunction

// Depth or stencil comparison function.

Classes

Shader

(func)

(keyword)

IsKeywordEnabled

// Is global shader keyword enabled?

EnableKeyword

// Set a global shader keyword.

DisableKeyword

// Unset a global shader keyword.

WarmupAllShaders

// Fully load all shaders to prevent future performance hiccups.

Find

// Finds a shader with the given name.

(global properties)

SetGlobalVector

SetGlobalTexture

SetGlobalMatrix

SetGlobalFloat

SetGlobalInt

// Internally float and integer shader properties are treated exactly the same, so this function is just an alias to SetGlobalFloat.

// Usually this is used if you have a set of custom shaders that all use the same "global" float (for example, density of some custom fog type). Then you can set the global property from script and don't have to setup the same float in all materials.

SetGlobalColor

// Usually this is used if you have a set of custom shaders that all use the same "global" color (for example, color of the sun). Then you can set the global property from script and don't have to setup the same color in all materials.

Shader.SetGlobalColor("_SkyColor", color);

Shader.SetGlobalColor("_EnvironmentColor", color);

SetGlobalBuffer

// Sets a global compute buffer property for all shaders.

PropertyToID

// Gets unique identifier for a shader property name.

// Each name of shader property (for example, _MainTex or _Color) is assigned an unique integer number in Unity, that stays the same for the whole game. The numbers will not be the same between different runs of the game or between machines, so do not store them or send them over network.

// Do this for Uv to transform

// Shader class is mostly used just to check ,

// Setting up global shader properties and keywords

// Finding shaders by name (Find method).

// Shader can run on the user's hardware (isSupported property)

rendering path

Deferred Rendering

Final pass

The final pass produces the final rendered image. Here all objects are rendered again with shaders that fetch the lighting, combine it with textures and add any emissive lighting. Lightmaps are also applied in the final pass. Close to the camera, realtime lighting is used, and only baked indirect lighting is added. This crossfades into fully baked lighting further away from the camera.

objects are rendered again. They fetch the computed lighting, combine it with color textures and add any ambient/emissive lighting.

Lighting pass

The lighting pass computes lighting based on depth, normals and specular power. Lighting is computed in screen space, so the time it takes to process is independent of scene complexity. The lighting buffer is a single ARGB32 Render Texture, with diffuse lighting in the RGB channels and monochrome specular lighting in the A channel. Lighting values are logarithmically encoded to provide greater dynamic range than is usually possible with an ARGB32 texture. The only lighting model available with deferred rendering is Blinn-Phong.

Point and spot lights that do not cross the camera's near plane are rendered as 3D shapes, with the Z buffer's test against the scene enabled. This makes partially or fully occluded point and spot lights very cheap to render. Directional lights and point/spot lights that cross the near plane are rendered as fullscreen quads.

If a light has shadows enabled then they are also rendered and applied in this pass. Note that shadows do not come for "free"; shadow casters need to be rendered and a more complex light shader must be applied.

The only lighting model available is Blinn-Phong. If a different model is wanted you can modify the lighting pass shader, by placing the modified version of the Internal-PrePassLighting.shader file from the Built-in shaders into a folder named "Resources" in your "Assets" folder.

the previously generated buffers are used to compute lighting into another screen-space buffer.

Base Pass

The base pass renders each object once. View space normals and specular power are rendered into a single ARGB32 Render Texture (with normals in RGB channels and specular power in A). If the platform and hardware allow the Z buffer to be read as a texture then depth is not explicitly rendered. If the Z buffer can't be accessed as a texture then depth is rendered in an additional rendering pass using shader replacement.

The result of the base pass is a Z buffer filled with the scene contents and a Render Texture with normals and specular power.

objects are rendered to produce screen-space buffers with depth, normals, and specular power

Forward Rendering

Vertex Lit

environment

lights

shadows

dynamic lights

baked lights

global illumination

lightprobes

samples global illum for skin shapes

ShaderLab

SubShader { [Tags] [CommonState] Passdef [Passdef ...] }

HLSL/CGFX

ENDCG

body

half4 frag (v2f i) : COLOR { }

struct v2f { }

uniform

float

sampler2D

#include

#pragma

CGPROGRAM

??? Overwrites pass???

Pass { [Name and Tags] [RenderSetup] [TextureSetup] }

Details

Per-vertex Lighting

Per-vertex lighting is the standard Direct3D/OpenGL lighting model that is computed for each vertex. Lighting on turns it on. Lighting is affected by Material block, ColorMaterial and SeparateSpecular commands. See material page for details.

Per-pixel Lighting

The per-pixel lighting pipeline works by rendering objects in multiple passes. Unity renders the object once to get ambient and any vertex lights in. Then it renders each pixel light affecting the object in a separate additive pass. See Render Pipeline for details.

RenderSetup

type (vertex op?)

Fixed Function Texture Combiners

SetTexture [_MainTex] { Combine texture * primary DOUBLE, texture * primary }

Combiner

combine src1 * src2 - src3

Multiplies src1 with the alpha component of src2, then subtracts src3.

combine src1 * src2 +- src3

Multiplies src1 with the alpha component of src2, then does a signed add with src3.

ombine src1 * src2 + src3

Multiplies src1 with the alpha component of src2, then adds src3.

combine src1 lerp (src2) src3

Interpolates between src3 and src1, using the alpha of src2. Note that the interpolation is opposite direction: src1 is used when alpha is one, and src3 is used when alpha is zero.

combine src1 +- src2

Adds src1 to src2, then subtracts 0.5 (a signed add).

combine src1 - src2

Subtracts src2 from src1.

combine src1 + src2

Adds src1 and src2 together. The result will be lighter than either input.

combine src1 * src2

Multiplies src1 and src2 together. The result will be darker than either input.

Src

Modifiers

SetTexture [_MainTex] { combine previous * texture, previous + texture }

Here, we multiply the RGB colors and add the alpha.

matrix

matrix [MatrixPropertyName]

constantColor

ConstantColor color

All the src properties can be followed by alpha to take only the alpha channel.

All the src properties, except lerp argument, can optionally be preceded by one - to make the resulting color negated.

The formulas specified above can optionally be followed by the keywords Double or Quad to make the resulting color 2x or 4x as bright.

Constant

Color specified in ConstantColor.

Color of the texture specified by [_TextureName] in the SetTexture (see above).

Primary

Color from the lighting calculation or the vertex color if it is bound.

Previous

The result of the previous SetTexture.

After the render state setup, you can specify a number of textures and their combining modes to apply using SetTexture commands

SetTexture commands have no effect when fragment programs are used; as in that case pixel operations are completely described in the shader.

Fixed Function AlphaTest

AlphaTest (Less | Greater | LEqual | GEqual | Equal | NotEqual | Always) CutoffValue

Turns on alpha testing.

Fixed Function Fog

Fog { Fog Block }

Set fog parameters.

Legacy Fixed Function Shader Commands

ColorMaterial AmbientAndDiffuse | Emission

Uses per-vertex color when computing vertex lighting. See material page for details.

Color Color value

Set color writing mask. Writing ColorMask 0 turns off rendering to all color channels.

Sets color to use if vertex lighting is turned off.

SeparateSpecular On | Off

Turns separate specular color for vertex lighting on or off. See material page for details.

Material { Material Block }

Defines a material to use in a vertex lighting pipeline. See material page for details.

Lighting On | Off

Turn vertex lighting on or off. See material page for details.

Lighting On

Material { Diffuse [_Color] }

Lighting Model

comp

Emission Color

The color of the object when it is not hit by any light.

Shininess Number

The sharpness of the highlight, between 0 and 1. At 0 you get a huge highlight that looks a lot like diffuse lighting, at 1 you get a tiny speck.

Specular Color

The color of the object's specular highlight.

Ambient Color

This is the color the object has when it's hit by the ambient light set in the RenderSettings.

Diffuse Color

This is an object's base color.

Ambient * RenderSettings ambient setting + (Light Color * Diffuse + Light Color * Specular) + Emission

(Offset)

Offset OffsetFactor, OffsetUnits

// Set Z buffer depth offset.

(ColorMask)

ColorMask RGB | A | 0 | any combination of R, G, B, A

// Set color channel writing mask.

// Writing ColorMask 0 turns off rendering to all color channels. Default mode is writing to all channels (RGBA), but for some special effects you might want to leave certain channels unmodified, or disable color writes completely.

(Blend)

AlphaToMask On | Off

BlendOp colorOp, alphaOp

BlendOp colorOp

Blend sourceBlendMode destBlendMode, alphaSourceBlendMode alphaDestBlendMode

Blend sourceBlendMode destBlendMode

Sets alpha blending mode.

(ZWrite)

ZWrite On | Off

Set depth writing mode.

(ZTest)

ZTest (Less | Greater | LEqual | GEqual | Equal | NotEqual | Always)

Set depth testing mode.

(Cull)

Cull Back | Front | Off

Set polygon culling mode.

A pass sets up various states of the graphics hardware, for example should alpha blending be turned on, should fog be used, and so on.

diagram

Lighting Model diagram

Graphics Hardware diagram

Name and Tags

A Pass can define its Name and arbitrary number of Tags - name/value strings that communicate Pass' intent to the rendering engine.

Tags { "TagName1" = "Value1" "TagName2" = "Value2" }

Shader passes interact with Unity’s rendering pipeline in several ways; for example a pass can indicate that it should only be used for deferred shading using Tags command. Certain passes can also be executed multiple times on the same object; for example in forward rendering the “ForwardAdd” pass type will be executed multiple times, based on how many lights are affecting the object. See Render Pipeline page for details.

There are several special passes available for reusing common functionality or implementing various high-end effects:

UsePass includes named passes from another shader.GrabPass grabs the contents of the screen into a texture, for use in a later pass.

IgnoreProjector

Tags { "IgnoreProjector"="True" }

If IgnoreProjector tag is given and has a value of "True", then an object that uses this shader will not be affected by Projectors. This is mostly useful on semitransparent objects, because there is no good way for Projectors to affect them.

ForceNoShadowCasting

Tags { "ForceNoShadowCasting"="True" }

If ForceNoShadowCasting tag is given and has a value of "True", then an object that is rendered using this subshader will never cast shadows. This is mostly useful when you are using shader replacement on transparent objects and you do not wont to inherit a shadow pass from another subshader.

RenderType

terrain engine

GrassBillboard

terrain engine billboarded grass.

Grass

terrain engine grass.

TreeBillboard

terrain engine billboarded trees.

TreeTransparentCutout

terrain engine tree leaves.

TreeOpaque

terrain engine tree bark.

GUITexture, Halo, Flare shaders.

Skybox shaders.

TransparentCutout

masked transparency shaders (Transparent Cutout, two pass vegetation shaders).

most semitransparent shaders (Transparent, Particle, Font, terrain additive pass shaders).

Opaque

most of the shaders (Normal, Self Illuminated, Reflective, terrain shaders).

Tags { "RenderType"="Ethereal" }

RenderType tag categorizes shaders into several predefined groups, e.g. is is an opaque shader, or an alpha-tested shader etc. This is used by Shader Replacement and in some cases used to produce camera's depth texture.

Queue

predefined

Overlay

this render queue is meant for overlay effects. Anything rendered last should go here (e.g. lens flares).

Transparent

this render queue is rendered after Geometry and AlphaTest, in back-to-front order. Anything alpha-blended (i.e. shaders that don't write to depth buffer) should go here (glass, particle effects).

AlphaTest

alpha tested geometry uses this queue. It's a separate queue from Geometry one since it's more efficient to render alpha-tested objects after all solid ones are drawn.

Geometry

(default) - this is used for most objects. Opaque geometry uses this queue.

Background

this render queue is rendered before any others. It is used for skyboxes and the like.

There are four pre-defined render queues, but there can be more queues in between the predefined ones.

For special uses in-between queues can be used. Internally each queue is represented by integer index; Background is 1000, Geometry is 2000, AlphaTest is 2450, Transparent is 3000 and Overlay is 4000. If a shader uses a queue like this:

Tags {"Queue" = "Geometry + 1" }

Tags {"Queue" = "Transparent" }

You can determine in which order your objects are drawn using the Queue tag. A Shader decides which render queue its objects belong to, this way any Transparent shaders make sure they are drawn after all opaque objects and so on.

Specifies TagName1 to have Value1, TagName2 to have Value2. You can have as many tags as you like.

Tags are basically key-value pairs. Inside a SubShader tags are used to determine rendering order and other parameters of a subshader. Note that the following tags recognized by Unity must be inside SubShader section and not inside Pass!

Each shader in Unity consists of a list of subshaders. When Unity has to display a mesh, it will find the shader to use, and pick the first subshader that runs on the user's graphics card.

Properties { ... }

Properties { Property [Property ...] }

Defines the property block. Inside braces multiple properties are defined as follows.

name ("display name", Range (min, max)) = number

Defines a float property, represented as a slider from min to max in the inspector.

name ("display name", Color) = (number,number,number,number)

Defines a color property.

name ("display name", 2D) = "name" { options }

Defines a 2D texture property.

name ("display name", Rect) = "name" { options }

Defines a rectangle (non power of 2) texture property.

name ("display name", Cube) = "name" { options }

Defines a cubemap texture property.

name ("display name", Float) = number

Defines a float property.

name ("display name", Vector) = (number,number,number,number)

Defines a four component vector property.

type

Float

Vector4

_name ("display name", Vector) = (number,number,number,number)

_name ("display name", Float) = number

_name ("display name", Range (min, max)) = number

Texture

_MainTex ("Base (RGB)", 2D) = "white" {}

Cube

_name ("display name", Cube) = "name" { options }

Rect

_name ("display name", Rect) = "name" { options }

(non power of 2) texture property

2D texture

_name ("display name", 2D) = "name" { options }

options

LightmapMode

If given, this texture will be affected by per-renderer lightmap parameters. That is, the texture to use can be not in the material, but taken from the settings of the Renderer instead, see Renderer scripting documentation

TexGen

???

these correspond directly to OpenGL texgen modes

CubeNormal

CubeReflect

SphereMap

EyeLinear

ObjectLinear

Note that TexGen is ignored if custom vertex programs are used

"name"

""

"bump"

"gray"

"black"

"white"

access in Shader

[_MainTex]

Color

_name ("display name", Color) = (number,number,number,number)

_Color ("Main Color", Color) = (1,1,1,1)

Shaders can define a list of parameters to be set by artists in Unity's material inspector. The Properties block in the shader file defines them.

"display name"

The property will show up in material inspector as display name

_name

[_name]

Later on in the shader, property values are accessed using property name in square brackets

in Unity, it's common to start shader property names with underscore

types

fixed function

hardware dependent

glsl/hlsl equivalent

surf(fragment)

vert

vertex structure

appdata_full

vertex consists of position, tangent, normal, two texture coordinates and color

appdata_tan

vertex consists of position, tangent, normal and one texture coordinate.

appdata_base

vertex consists of position, normal and one texture coordinate.

void vert (inout appdata_full v, out Input o) {}

must pipe in render/environment data

image effects/postfx

ssao

bloom/lens flare

tonemapping

lights and shadows

surface shaders

shader

http://forum.unity3d.com/threads/world-space-normal.58810/

Actually, the best methods yet is to write this for points :

float3 worldPosition = mul( _Object2World, float4( v.vertex, 1.0 ) ).xyz;

and this for vectors :

float3 worldNormal = mul( _Object2World, float4( v.normal, 0.0 ) ).xyz;

When you append 1 at the end of a float3 like (X,Y,Z,1) this means the multiplication with a matrix will add the

translation part of the matrix during the operation : so a point gets rotated, scaled

and translated.

When you append 0 at the end of a float3 like (X,Y,Z,0) this only rotates and scales the vector, it doesn't translate it. Which is what we want for normals. And anyway it makes no sense to translate a vector : only points can be translated.

material

{ "_BackTex", "_BumpMap", "_BumpSpecMap", "_Control", "_DecalTex", "_Detail", "_DownTex", "_FrontTex", "_GlossMap", "_Illum", "_LeftTex", "_LightMap", "_LightTextureB0", "_MainTex", "_ParallaxMap", "_RightTex", "_ShadowOffset", "_Splat0", "_Splat1", "_Splat2", "_Splat3", "_TranslucencyMap", "_UpTex", "_Tex", "_Cube" };

output

Alpha

Gloss

Specular

Emission

Normal

Albedo

input

http://docs.unity3d.com/Manual/SL-SurfaceShaders.html

Surface Shader input structure

The input structure Input generally has any texture coordinates needed by the shader. Texture coordinates must be named “uv” followed by texture name (or start it with “uv2” to use second texture coordinate set).

Additional values that can be put into Input structure:

float3 viewDir - will contain view direction, for computing Parallax effects, rim lighting etc.

float4 with COLOR semantic - will contain interpolated per-vertex color.

float4 screenPos - will contain screen space position for reflection or screenspace effects.

float3 worldPos - will contain world space position.

float3 worldRefl - will contain world reflection vector if surface shader does not write to o.Normal. See Reflect-Diffuse shader for example.

float3 worldNormal - will contain world normal vector if surface shader does not write to o.Normal.

float3 worldRefl; INTERNAL_DATA - will contain world reflection vector if surface shader writes to o.Normal.

To get the reflection vector based on per-pixel normal map, use WorldReflectionVector (IN, o.Normal). See Reflect-Bumped shader for example.

float3 worldNormal; INTERNAL_DATA - will contain world normal vector if surface shader writes to o.Normal. To get the normal vector based on per-pixel normal map, use WorldNormalVector (IN, o.Normal).

COLOR

will contain interpolated per-vertex color

position

worldPos

will contain world space position.

screenPos

will contain screen space position for reflection effects. Used by WetStreet shader in Dark Unity for example.

normal

worldNormal

worldRefl

will contain world normal vector if surface shader does not write to o.Normal

will contain world reflection vector if surface shader does not write to o.Normal

viewDir

will contain view direction, for computing Parallax effects, rim lighting etc.

best option if your shader needs to be affected by lights and shadows

higher level