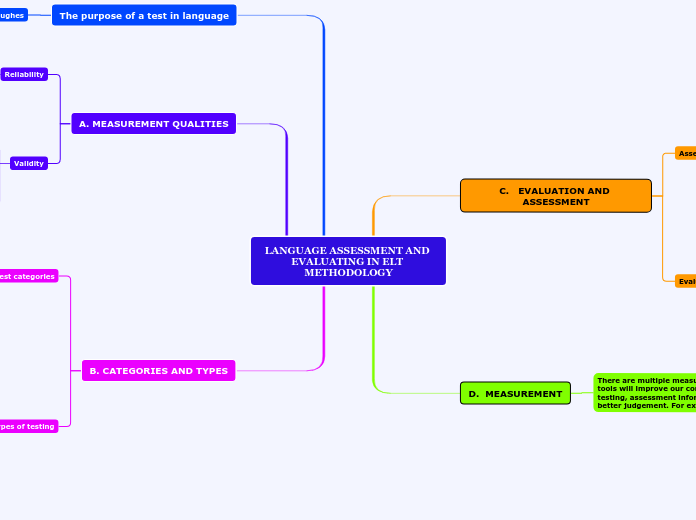

LANGUAGE ASSESSMENT AND EVALUATING IN ELT METHODOLOGY

C. EVALUATION AND ASSESSMENT

Assessment

Is the process of gathering data about a student’s learning process and/or a teachers teaching methods:

- Assessment of progress: Gives us enough information to provide feedback to know if something is successful and what it needs to be reinforced.

- Formative assessment: Gives us information while in the process of learning / teaching.

- Summative assessment: Recolects information after the process is finished.

- Direct assessment: Is a direct observation of knowledge with measurable indicators.

- Indirect assessment: Compare one student’s performance against another one’s through assumptions.

- Self assessment: Where one subject , teacher or student analyse their own performance and process.

Evaluation

Is a wider process where you can discernment with the information collected.They need to be authentic and has to be a collaborative job between students and teachers.

Evaluations has two purposes:

- For learning

- For development

D. MEASUREMENT

There are multiple measurement tools, said tools will improve our comprehension of testing, assessment information to make a better judgement. For example:

Rubrics: Is a measurement tool that helps you judge certain performance according to a criteria.There are two type of rubrics...

- Analytic Rubric: It provides a description of each criteria in a detailed way, each criteria has an individual score. This rubric will help a person have a better and clearer understanding about their process and performance, giving them their weaknesses and strengths in a more specific way.

- Holistic Rubric: Has a general score for all the criteria presented, according to the level of achievement of the student. Compared to the analytic rubric this one doesn’t take much time and it's efficient for big groups. However, detailed information is not recollected

The purpose of a test in language

According to Hughes

Is to measure the language proficiency and qualities of a student.

A. MEASUREMENT QUALITIES

Reliability

Result must be the same as trials, scores need to be stable and not have any major changes.

The three aspects of reliability according to Miller are:

- Equivalence

- Stability

- Internal consistency

Validity

Needs to measure what you intended, also there needs to be enough informational support to prove a test validity.

There are various types of test validities:

- Content validity

- Predictive validity

- Face validity

- Factorial validity

- Etc...

B. CATEGORIES AND TYPES

Test categories

There are a total of 8 categories, said categories were divided by Ivanova.

The test categories are:

- According to purpose

- According to test timing

- According to test administration

- Is answer type

- According to decision type

- According to item format type

- According to evaluation method

- According to test quality

Types of testing

There are numerous types of testing, it differs from things such as the test itself, its purpose or according to the authors

Some of these types of testing are:

- Diagnostic tests: Analyse the information providing diagrams of each class.

- Placement test: Students are placed in a certain group according to their test results.

- Progress test: Shows if a student is learning new knowledge or not.

- Direct test: Test are aimed to a particular skill by the teacher preference.

- Indirect test: Tests the use of language in real life situations.

- Non referenced testing: Measures the knowledge and compares it with another participant results.

- Criterion referenced tests: It uses a score that can be interpreted by the student rather than a percentage one.