av Arlis Tranmer för 3 årar sedan

293

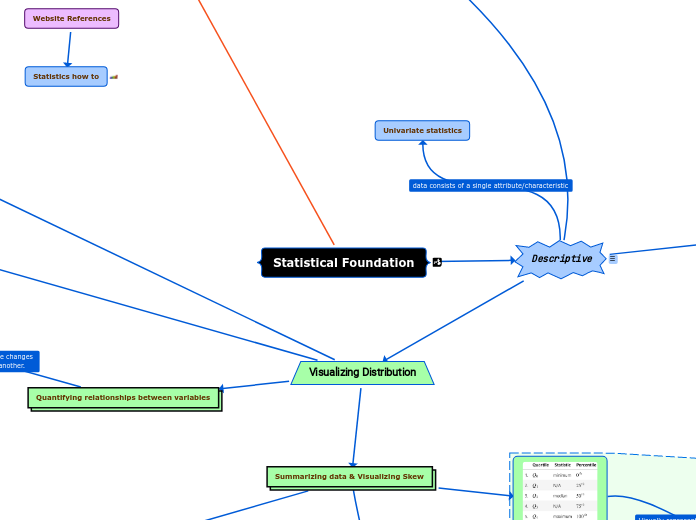

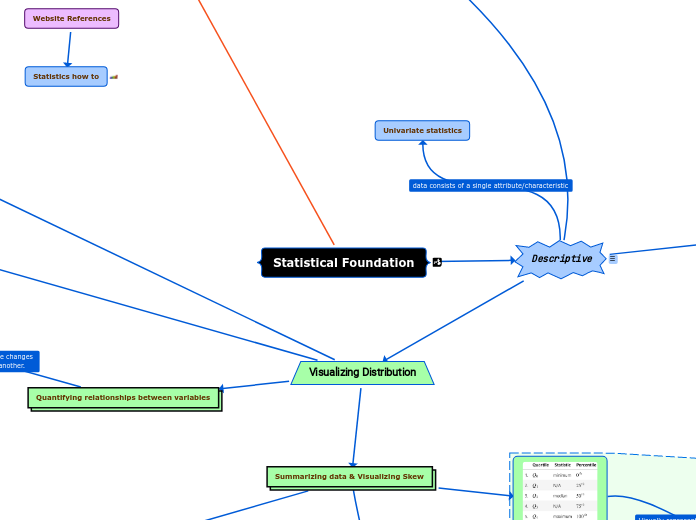

Statistical Foundation

Mind Map of Statistics used in data analysis

av Arlis Tranmer för 3 årar sedan

293

Mer av detta

Should always be randomly sampled.

Additional Bootstrap Video

StatQuest YouTube Video

describe the sample

Scaling data

In order to compare variables from different distributions we have to scale the data.

There are, of course, additional ways to scale our data, and the one we end up choosing will be dependent on our data and what we are trying to do with it. By keeping the measures of central tendency and measures of dispersion in mind, you will be able to identify how the scaling of data is being done in any other methods you come across.

Z-score

To standardize using the Z-score we would subtract the mean from each observation and then divide by the standard deviation to standardize the data.

The resulting distribution is normalized with a mean of 0 and a standard deviation (and variance) of 1.

min-max scaling

Take each data point and subtract it by the minimum of the dataset, then divide by the range. This normalizes the data (scales it to the range [0,1]).

Covariance

E[X] = the expected value of X or the expectation of X. It is calculated by summing all the possible values of X multiplied by their probability - it is the long-run average of X.

This will tell us whether the variables are positively or negatively correlated.

Correlation

Pearson correlation coefficent

To find the correlation, we calculate the Pearson correlation coefficient, symbolized by ρ (the Greek letter rho), by dividing the covariance by the product of the standard deviations of the variables:

This normalizes the covariance and results in a statistic bounded between -1 and 1, making it easy to describe both the direction of the correlating (sign) and the strength of it (magnitude).

Perfect negative correlation [as x increases y decreases]

Perfect positive (linear) correlation [as x increases y increases]

Scatter plot example of correlation between x and y variables.

It is possible that there is another variable Z that causes both X and Y.

While there are many probability distributions, each with specific use cases, there are some that we will come across often. The Gaussian, or normal, looks like a bell curve and is parameterized by its mean (μ) and standard deviation (σ). The standard normal (Z) has a mean of 0 and a standard deviation of 1. Many things in nature happen to follow the normal distribution, such as heights. Note that testing whether a distribution is normal is not trivial—check the Further reading section for more information.

The Poisson distribution is a discrete distribution that is often used to model arrivals. The time between arrivals can be modeled with the exponential distribution. Both are defined by their mean, lambda (λ). The uniform distribution places equal likelihood on each value within its bounds. We often use this for random number generation. When we generate a random number to simulate a single success/failure outcome, it is called a Bernoulli trial. This is parameterized by the probability of success (p). When we run the same experiment multiple times (n), the total number of successes is then a binomial random variable. Both the Bernoulli and binomial distributions are discrete.

We can visualize both discrete and continuous distributions; however, discrete distributions give us a probability mass function (PMF) instead of a PDF:

Binomial PMF - many Bernoulli trials

Standard Normal (Z)

Bernoulli trial

Uniform distribution

Exponential distribution

Poisson distribution

Gaussian/normal

Visualize Skew & Kurtosis

Skew

No Skew

Right (positive) skewed distribution

Left (negative) skewed distribution

Kurtosis

Important note:

There is also another statistic called kurtosis, which compares the density of the center of the distribution with the density at the tails. Both skewness and kurtosis can be calculated with the SciPy package.

Cumulative distribution function (CDF)

Random Variable

Each column in our data is a random variable, because every time we observe it, we get a value according to the underlying distribution—it's not static.

Empirical cumulative distribution function (ECDF)

import numpy as np x = np.sort(df['column_name']) y = np.arange(1,len(x)+1) / len(x)

# by default plt.plot generates lines. To connect our data points we pass '.' to the marker and 'none' to the linestyle.

_ = plt.plot(x,y, marker='.', linestyle = 'none')

_ = plt.xlabel('percent of vote for Obama')

_ = plt.ylabel('ECDF')

To keep data off plot edges we set plot margins to (0.02)

plt.margins(0.02)

Cumulative Probability

y-axis = evenly spaced data points with a maximum of 1

y = np.arange(1,len(x)+1) / len(x)

x-axis = the sorted data being measured

import numpy as np x = np.sort(df['column_name'])

5-number summary

Box plot (or box and whisker plot)

Top line = Max or 75 quartile

Tukey box plot

The lower bound of the whiskers will be Q1 – 1.5 * IQR and the upper bound will be Q3 + 1.5 * IQR, which is called the Tukey box plot:

Histograms

Important note:

In practice, we need to play around with the number of bins to find the best value. However, we have to be careful as this can misrepresent the shape of the distribution.

Both the KDE and Histogram estimate the distribution.

Discrete variables (people & time)

Rolling a 6-sided die can only result in 6 possible outcomes. (1,2,3,4,5, or 6) this is an example of a discrete variable because it isn't possible to role a 2.2 or 3.4 etc. On the other hand, a continuous variable such as height can end up being any number or fraction of a number between two discrete numbers. A persons hight can be 5"6" or 5"6.3" etc.

Kernel density estimates (KDEs)

KDEs can be used for discrete variables, but it is easy to confuse people that way.

Probability density function (PDF)

The PDF tells us how probability

is distributed over the values.

(Higher values = Likelihoods)

Continuous variables (Heights or time)

Range

Gives us the dispersion of the entire dataset.

Downfall:

r = max(salaries) - min(salaries)

print(f"range = {r}")

Output:

range = 995000.0

Interquartile range (IQR)

Quartile coefficient of dispersion

It is calculated by dividing the semi-quartile range (half the IQR) by the midhinge (midpoint between the first and third quartiles):

Quantiles (25%, 50%, 75%, and 100%)

Variance

The variance describes how far apart observations are spread out from the average value (the mean). The population variance is denoted as σ2 (pronounced sigma-squared), and the sample variance is written as s2. It is calculated as the average squared distance from the mean. Note that the distances must be squared so that distances below the mean don't cancel out those above the mean.

from statistics import variance

mean(salaries)

u = sum(salaries) / len(salaries)

#* find the variance of salaries without statistics module

sum([((x - u)**2) for x in salaries]) / (len(salaries) - 1)

variance(salaries)

Standard Deviation

Standard deviation We can use the standard deviation to see how far from the mean data points are on average. A small standard deviation means that values are close to the mean, while a large standard deviation means that values are dispersed more widely. This is tied to how we would imagine the distribution curve: the smaller the standard deviation, the thinner the peak of the curve (0.5); the larger the standard deviation, the wider the peak of the curve (2):

The standard deviation is simply the square root of the variance. By performing this operation, we get a statistic in units that we can make sense of again ($ for our income example):

Note that the population standard deviation is represented as σ, and the sample standard deviation is denoted as s.

from statistics import stdev

u = sum(salaries) / len(salaries)

#* WITHOUT STATISTICS MODULE: VARIANCE =

sum([((x - u)**2) for x in salaries]) / (len(salaries) - 1)

#! VARIANCE WITH STATSTICS MODULE

variance(salaries)

#* WITHOUT STATISTICS MODULE: STANDARD DEVIATION =

math.sqrt(sum([((x - u)**2) for x in salaries]) / (len(salaries) - 1))

#! STANDARD DEVIATION WITH STATISTICS MODULE

stdev(salaries)

Coefficient of Variation (CV)

cv = stdev(salaries) / mean(salaries)

print(f"The coefficient of variation = {cv}")

The coefficient of variation = 0.45386998894439035

Comparing between parameters using relative units such as Celsius and Fahrenheit.

Celsius and Fahrenheit are two different scales that measure the same thing. The two different sensors result in relative values not absolute values.

Freezing temperature:

Celsius = 0

Fahrenheit = 32

Kelvin uses a thermometric scale that reads zero for the theoretical absolute zero.

The Problem is that you have divided by a relative value rather than an absolute.

Comparing the same data set, now in absolute units:

Kelvin: [273.15, 283.15, 293.15, 303.15, 313.15]

Rankine: [491.67, 509.67, 527.67, 545.67, 563.67]

Compare volatility or risk with the amount of return expected from investments.

CV is the Ratio of the standard deviation to the mean

Comparing Two Datasets with different units problem

Tip The i th percentile is the value at which i% of the observations are less than that value, so the 99th percentile is the value in X where 99% of the x's are less than it.

multimodal = many modes

bimodal = 2 modes

Unimodal = 1 mode

from statistics import median

median(salaries)

If n is even

sample mean