作者:Mike Ton 4 年以前

574

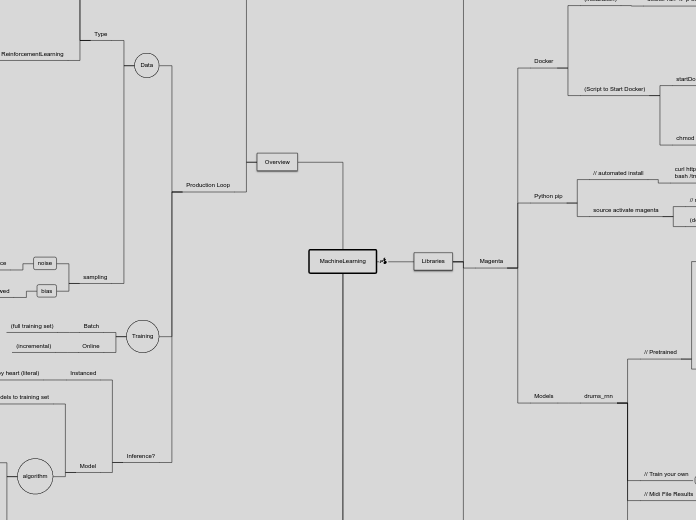

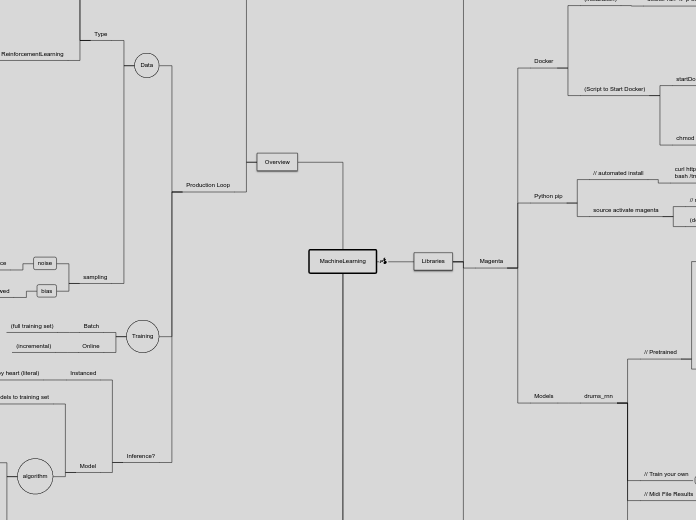

MachineLearning

作者:Mike Ton 4 年以前

574

更多类似内容

The term broadcasting describes how numpy treats arrays with different shapes during arithmetic operations. Subject to certain constraints, the smaller array is “broadcast” across the larger array so that they have compatible shapes. Broadcasting provides a means of vectorizing array operations so that looping occurs in C instead of Python. It does this without making needless copies of data and usually leads to efficient algorithm implementations. There are, however, cases where broadcasting is a bad idea because it leads to inefficient use of memory that slows computation.

.shape()

np.array?

# Documentation

calculus

linear algebra

stats

vocabulary

distribution

Normal

Gaussian

z-scores

deviation

Deviation is the tendency of outcomes to differ from the expected value

It allows one to quantify how much the outcomes of a probability experiment tend to differ from the expected value

variations

Variance is a statistic that is used to measure deviation in a probability distribution

Probability experiments that have outcomes that are close together will have a small variance

Probability distributions that have outcomes that vary wildly will have a large variance

Studying variance allows one to quantify how much variability is in a probability distribution

means

Logistic regression

Cost function

(measures full training set)

Lost function

(single training example)

want y^ = y (from training data)

L(y^, y)

y^

sigmoid ()

1/(1-e^z)

small (or larger negatives approach 0)

large numbers approaches one

(w^t)x + b

In statistics, logistic regression, or logit regression, or logit model[1] is a regression model where the dependent variable (DV) is categorical

binary dependent variable

output = 0 or 1

Gradient Descent

Neuron

Layers

[2]

Output

[1]

(vector)

Hidden

Needs to be calculated through iteration and learning

[0]

(scalar)

Input

Training Data

Types

Unstructured

text

features = characters

images

features = pixel

raw audio

features = wave

Structured

Home

Price

Rooms

Size

(features have labels/meaning)

Recurrent Network

English to Chinese

Audio To Text

(1 dimensional stream)

Convolution Network

image/vision

Neural Network

Ad/Clicks

House Feature/Price

(categorization == prediction)

Unsupervised

Model

algorithm

underfit

too simple

overfit

too complex

regularization

hyper parameters

// parameter of training algorithm not training data

// stays constant during training

// must be set prior to training

// reduce model risk of overfitting

// simplifying

// contstraining

Tunes parameters and fits models to training set

Presumably the resulting model generalizes to the production set

Instanced

Learns examples by heart (literal)

Generalizes by interpolating new instance to existing ones

Online

(incremental)

Batch

(full training set)

sampling

non representative data because sampling method is flawed

noise

nonrepresntative data as a result of chance

not enough data

Type

ReinforcementLearning

Agent learn by trial and error

Examples

Product Delivery

Delivery Fleet Management

late delivery

delivered on time

Marketing

Cost of Mailing campaign

Estimated revenue from campaign

Music Personalization

reward

-

skips

--

closes app/leaves

+

listens to

++

ad

song

Not explicitly given "right" answer

Must balance

Exploiting sources of rewards it already knows

Looking for new ways to get reward

Exploring the environment

bias

When agent remains in the same region of an environment and all it's learning are localized

Buffer past experiences further back than immediate experience

Supervision via rewards

Can't loss function/gradient descent solution

Creating agents that maximize rewards in an environment over time

NonSupervised

Supervised

explicitly coded rules

machines improving at a a task learning from data

drums_rnn

// Play Midi

timidity yourfile.mid

brew install timidity;

// Midi File Results

drums_rnn/generated

...

2017-05-28_145521_01.mid

// Train your own

// Creating a Bundle File

// Generate Drum Tracks

// Train and Evaluate the Model

// Create SequenceExamples

// To extract drum tracks from our NoteSequences and save them as SequenceExamples

(output)

/tmp/drums_rnn/sequence_examples

training_drum_tracks.tfrecord

eval_drum_tracks.tfrecord

--eval_ratio=0.10

--output_dir=/tmp/drums_rnn/sequence_examples \

--input=/tmp/notesequences.tfrecord \

--config=

drums_rnn_create_dataset \

// Two collections of SequenceExamples will be generated

evaluation

--eval_ratio = 0.10

// 90% will be saved in the training collection

// 10% of the extracted drum tracks will be saved in the eval collection

// fraction of SequenceExamples in the evaluation set is determined by --eval_ratio

training

// Each SequenceExample will contain a sequence of

labels

inputs

// represent a drum track

// Create NoteSequences

/tmp/notesequences.tfrecord

// NoteSequences are protocol buffers

// fast and efficient data format

// easier to work with than MIDI files

// convert a collection of MIDI files into NoteSequences

// Pretrained

// Generate a drum track

(cmd)

--primer_drums="[(36,)]"

--num_steps=128 \

--num_outputs=10 \

--output_dir=/tmp/drums_rnn/generated \

--bundle_file=${BUNDLE_PATH} \

--config=${CONFIG} \

drums_rnn_generate \

CONFIG=

BUNDLE_PATH=

drum_kit.mag

source activate magenta

(deactivate)

conda remove -n magenta --all

// Uninstall the environment

rm -r ~/miniconda2

// For complete uninstall, remove the installed anaconda directory:

source deactivate magenta

// deactivate environment

// run to use Magenta every time you open a new terminal window

// automated install

curl https://raw.githubusercontent.com/tensorflow/magenta/master/magenta/tools/magenta-install.sh > /tmp/magenta-install.sh bash /tmp/magenta-install.sh

docker run -it -p 6006:6006 -v /tmp/magenta:/magenta-data tensorflow/magenta

docker pull tensorflow/magenta

// update the image to the latest version

keyshortCuts

Shift+Enter = runCell

Tab == AutoCompletion

Shift+Tab = ListParameters

Tutorial

1_hello_tensorflow

with tf.Session():

(constants)

result = output.eval()

// eval() is also slightly more complicated than it looks.

// So, this is the point where the addition is actually performed, not when add was called, as add just put the addition operation into the TensorFlow computation graph.

// It's important to realize it also runs the computation graph at this point, because we demanded the output from the operation node of the graph; to produce that, it had to run the computation graph.

// It does get the value of the vector (tensor) that results from the addition. It returns this as a numpy array, which can then be printed.

output = tf.add(input1, input2)

// You might think add just adds the two vectors now, but it doesn't quite do that. What it does is put the add operation into the computational graph. The results of the addition aren't available yet. They've been put in the computation graph, but the computation graph hasn't been executed yet.

input2 = tf.constant([2.0, 2.0, 2.0, 2.0])

input1 = tf.constant([1.0, 1.0, 1.0, 1.0])

// Constants creates a tensor of the necessary shape and applies the constant operator to it to fill it with the provided values

(numpy)

import numpy as np x, y = np.full(4, 1.0), np.full(4, 2.0) print("{} + {} = {}".format(x, y, x + y))

(raw)

print([x + y for x, y in zip([1.0] * 4, [2.0] * 4)])

similar to numpy's array and numpy's full

(context)

Deferring the execution like this provides additional opportunities for parallelism and optimization, as TensorFlow can decide how to combine operations and where to run them after TensorFlow knows about all the operations.

When you create tensors and operations, they are not executed immediately, but wait for other operations and tensors to be added to the graph, only executing when finally requested to produce the results of the session.

When you run an operation in TensorFlow, you need to do it in the context of a Session. A session holds the computation graph, which contains the tensors and the operations.

import tensorflow as tf

flow

The "flow" part of the name refers to computation flowing through a graph.

Training and inference in a neural network, for example, involves the propagation of matrix computations through many nodes in a computational graph.

tensors

arrays of arbitrary dimensionality.

A matrix is a 2-d array and a 2nd-order tensor.

A vector is a 1-d array and is known as a 1st-order tensor.

# This import brings TensorFlow's public API into our IPython runtime environment.

Pycharm

(Script to Start Docker)

chmod +x startDocker.sh

// Make your script executable:

startDocker.sh

Make a note of the URL that is given.

http://localhost:8888/?token=f9b7564b536abd483bdb29cff2dd4a57381f367f58c05a5d

http://localhost:8888/tree?token=someLongSeriesOfHexadecimalDigits

#! /bin/bash

(installation)

docker run -it -p 8888:8888 gcr.io/tensorflow/tensorflow

This will download tensorflow and will take a few minutes (~ 8 minutes)