Major Processing

Text Normalization

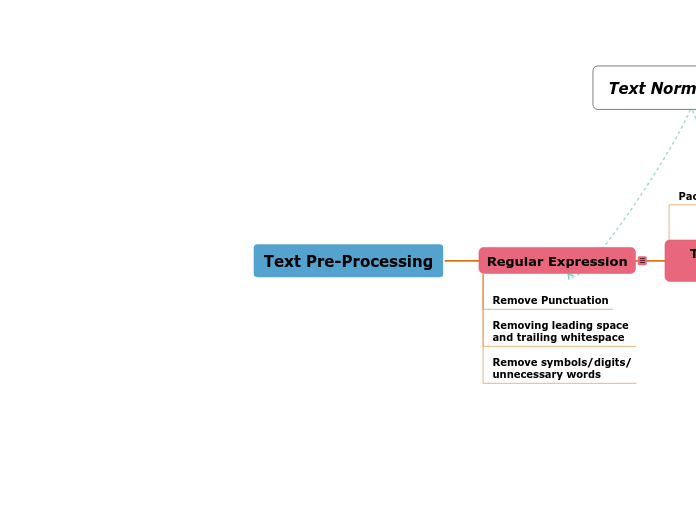

Text Pre-Processing

Sentiment Score

Description:

calculates the sentiment score of the text based on the individual words in a sentence are categorized

Issue:

- current package can be used to English language

- might not be able to determine the score accurately on several texts

Library/Package

textblob

nltk.sentiment.vader

Remove Stopword

Description:

Remove the common words that in the text such as is are, be, the, and, etc.

Issue:

- Might mislead the actual context

- The words depends on the pre-trained model of the library

Stemming/Lemmatize

Description:

Transform the text into the base form/root word

Issue:

- Highly depend on the pre-trained model that available in the library

- some words not available

Important:

- Avoid words redundancy (WordCloud, BOW, Tfidf)

Part of Speech Tagging

Description:

assign parts of speech to each word of a given text (nouns, verbs, adjectives, and others) based on its definition and its context

Issue:

- The words are based on the pre-trained model of the library

- Need to update some of the words in our text

Name Entity Recognition

(NER)

Description:

aims to find named entities in text and classify them into pre-defined categories (names of persons, locations, organizations, times, etc)

Issue:

- some of words in the text we have might not be able to detect

- need to update the model of the library package used

Tokenize

Description:

Transform the text into single chunk/token of word

Issue:

- tokenize can mislead the meaning of the context

- need to consider of the n-gram of the token used (max 3)

n-gram

trigram

bigram

unigram

Translate & Detect Language

Description:

Translate to universal language which is English

Issue:

- Package/Library that support multiple language

- Open source and free to use without limitation (TBC)

- Not able to detect & translate the words accurately if the text is written informal

Important:

- Avoid redundant of word

- Help in analyzing the context

Package/Library

deep_translator

translate

Issue:

Limited of words per day can be used

Regular Expression

Description:

Issue:

- Different text has different expression

- Need to form a general pattern where can be use to remove unnecessary expression in every text

Important:

- To extract the main words that needed for analysis

Remove symbols/digits/

unnecessary words

Removing leading space

and trailing whitespace

Remove Punctuation