References

https://www.kameleoon.com/blog/what-you-need-to-know-abtesting-before-you-start

https://www.youtube.com/playlist?list=PLHS1p0ot3SVjQg0q1eEPrmOmPUY_AT1vB

https://data36.com/tag/ab-test/

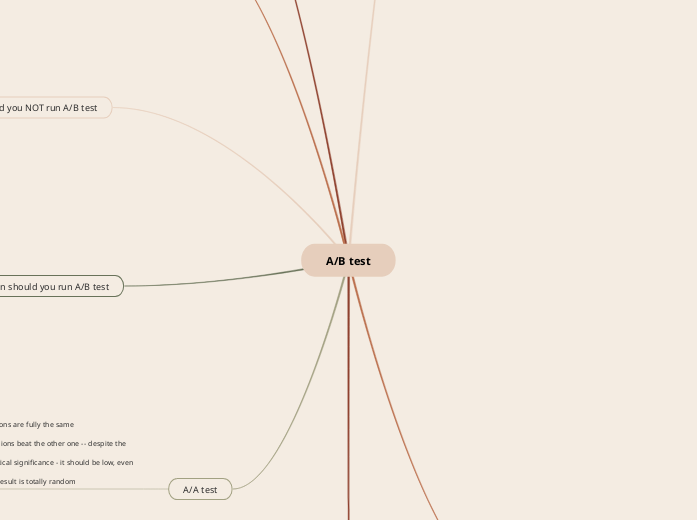

A/B test

A/A test

- Similar to A/B test except both versions are fully the same

- Why is this useful?

- Because you'll see one of the versions beat the other one -- despite the fact that they are both the same

- We should take note of the statistical significance - it should be low, even if the difference in metrics is high

- Here we know for a fact that the result is totally random

When should you run A/B test

When you start something new

When optimizing first user experience

For higher priced products (that requires the users to make rational decisions), you should A/B test the sales copy

- Should help the users to make rational decision by providing more comprehensive product description

For lower priced products (that people impulse buy), you should do A/B test with images

- Impulse purchase is an emotional decisions and images influence emotions

When you want to change something that is already successful

When you run something extremely different from industry best practices

When should you NOT run A/B test

When you have a website with a huge

proportions of returning visitors

- This could be a risk if the returning visitors are already used to (and like) the old design

When you have nothing to lose

- In such cases, you should just implement the change without running A/B test

When you already know what the

result of the test will be

When you have a small audience

- You don't have to run A/B test on

parts of your website that has small

visitor counts

Key questions before starting A/B test

(4) What will I learn from this A/B test?

(3) Can I take a risk of not A/B testing this?

(2) Is A/B test the best solution to my given business problem?

(1) Do I have large enough audience to run this experiment?

Important concepts

Main takeaways from A/B test:

- Whether your new design/ new copy/ new x is better than your original ones

- Not the quick wins but what you can learn from the experiments

A/B test is not conversion rate optimization,

it's research

A/B test with prices: risky

Correlation != Causation

- analyzing historical data shows correlations

- running experiments shows causation

A/B test misconception

Can I use other than 50/50 users split?

- Yes, but only to favor your original version eg 70 for your original version, 30 for your new version

- Why do this? -- to make sure that the new version is indeed better than the original version, we could test the new version only on smaller percentage of visitors

- This might not be feasible if you don't have big enough audience

- Eg implementation:

(1) test with 98/2 for original to new version ratio (98% for original, 2% for new version)

(2) if new version wins, test again using 50/50 ratio

(3) if new version wins again, release new version 100%

Can I run A/B/C/D/n test?

- The difference between A/B/C.. test with multivariate test is -- you don't necessarily combine multiple elements

- The same answer to multivariate test applies here

Should I use multivariate testing

- Only recommended for big businesses

- To run multiple versions, you need bigger sample size

- Best advice is to do test a single variation at a time - this is much simpler and you can easily interpret the result

You can only change one thing at a time

- Changing more than one thing is risky because you'll only see the total effect of all the changes

- But it is still an acceptable risk for small businesses

- Big businesses should only change one thing at a time

- Big business: should test individual elements

- Small business: should test concepts

Limitations

A/B testing is not the only research tool you can use

A/B test is only good for measuring short term effects

4 steps to A/B test

(5) Post Experiments

Follow-ups

- Follow up in a few months time so you can see the long-term effects

Summary

- Write a short one-page summary containing:

1. Your initial thoughts

2. Your research results

3. Hard facts (eg conversion % change, statistical sig. at the end of the experiment)

4. Additional thought (eg conclusion, summary)

- Share with coworkers & build knowledge base

(4) Evaluation

What to do when your A/B test underperforms?

Scenario 2

Scenario 1

Never lie to yourself while evaluating results

- Always stick with the things you've defined before you start the experiment (be it success metric, statistical sig. etc)

(3) Running Experiments

- 3rd party vendors: Optimizely, Google Analytics etc

Checking the interim results on daily basis

- Ideally you should only check it for a few times while its running:

- Right after you start your experiment to see all is ok

- Once in every 3-4 days to make sure nothing's broken

- After you've stopped your experiment

Changing the audience distribution in an ongoing A/B test

- Will mess up your result

Changing anything in an ongoing A/B test

- You must always wait for the test to get statistically sig. result before making any conclusion.

- Remember:

- Main goal for an A/B test is to learn

- A losing version B is not a failure - it is a useful information.

You stop the test at the slightest chance when you see the new version wins

- To mitigate:

- Set a stopping rule (you could set it using test duration you calculated in Prep stage) before you start your A/B test

- You could add another condition for the stopping rule: Stop experiment IF test duration matched AND your confidence level has increased to above 95% (or whatever the statistical sig. you set during Prep stage) for the last 3 consecutive days

(2) Implementation

Typical mistakes

Avoid flickering

- Not a good user experience to see flickering website.

- User might know they are part of an A/B test if they see flickering.

- Make sure to put the tracking snippet on the very top of the website to avoid flickering.

You should make sure your

new version works

- Unresponsive version B will definitely skew the result.

Your users should not know

they are part of an experiment

- Telling users (intentionally or not) will risk biasing their behaviour.

A/B versions should run parallel

- Running them not in parallel risk having external factors ruins the result (unknowingly).

- Eg if your version A runs in October, and your version B runs in November, seasonality might mess up your result.

Randomization

- Always use a random generator.

(1) Preparation

(1.6) Set up hypothesis

- Hypothesis consist of 2 parts: I'll do X so I expect Y to happen

- A more comprehensive (& supported) hypothesis covers 5 key questions:-

(5) How big of a sample size do you need? &

How long will your test run?

- These should be decided during preparation stage.

- Rule of thumb: Between 2-5 weeks (no more than 5 weeks).

- Use online sample size & duration calculator.

(4) What results you expect to see?

- Describe your success metric

- How much % at least you expect the success metric to move? (Minimum Detectable Effect - MDE)

(3) What will you change?

- Describe on which part of your online business & want exact changes you will make

(2) The research result

- What qualitative & quantitative research supports your concept (include results from your initial research to support this)

- What problem/ opportunity you discovered?

- What qualitative & quantitative datapoints validate it?

(1) The concepts

(1.5) Set up success metrics

- 2 rules on how to choose success metrics:

- (a) The success metric should be set before the experiment

- (b) You should choose only 1 success metric (to avoid observer bias - ie cherry picking the metric that aligns with what we want to believe)

- How to choose success metric?

- (a) You have enough datapoints for it

- (b) It is not a lagging metrics

- (c) It should be an important key metric for your business

A workaround to lagging metric is to use early predictor. This is a "conversion metric" that we can use to predict our lagging key metric early on.

Example of a lagging metric is 'trial-to-paid' conversion. Imagine you have a product and want to give out 30days free trial period, the number of trial customers who convert to paid customers is a lagging metric. This is because we need to wait for the trial period to end to get our first conversion data.

Eg2 scenario: Netflix

- Netflix want to test its landing page and one of its key metrics is 'trial-to-paid' conversion. But this is a lagging metric

- Instead of using this, Netflix can use an early predictor.

Eg1 scenario: Running the naming of a course using A/B test

- The success metrics that would fulfil all 3 conditions: Subscription

- But we will never get enough data points for the metrics to be statistically sig.

- So here, we should use Click Through as success metric instead. This is okay if we are confident both of these have the same spread.

(1.4) 5 seconds testing

- Filter out bad candidate solutions through qualitative research again

- What is 5 seconds test:

- Show a landing page/ advert to potential user for 5 seconds

- After 5 seconds, ask them what they think the page sell

- If the candidate solution pass the 5 seconds test, it is a good sign we could proceed to A/B tets it.

5 seconds test demo

(1.3) Brainstorming

- Get everyone in the team involved

- Great brainstorming sessions will results in ~5 candidate solutions

(1.2) Data Analysis

- Validate your hunches with actual data

- Advantages:

- More data points to work with

- Unbiased user behaviour

(1.1) Qualitative Research

- Collect ideas and hunches

- Eg methods: user interviews, usability tests, surveys

- Work with smaller sample size but aim for deep understanding

- Recommendation: running 5 usability tests will give you hunches & suspicions with 10-30 elements on it prioritized by importance (this is not statistically sig, but good enough to start)