CNN_2

8. Analyzing Results

Analyse results on Validation Set

Most incorrect 1

#Most incorrect dogs

plot_val_with_title(most_by_correct(1, False), "Most incorrect dogs")

Most incorrect 0

#Most incorrect cats

plot_val_with_title(most_by_correct(0, False), "Most incorrect cats")

Plotting confusion Matrix

preds = np.argmax(probs, axis=1)

probs = probs[:,1]

# Plotting confusion Matrix

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y, preds)

plot_confusion_matrix(cm, data.classes)

# Test Time Augmentation.

# we are going to take 4 data augmentations at random as well as the un-augmented original (center-cropped).

# We will then calculate predictions for all these images, take the average, and make that our final prediction.

# Note that this is only for validation set and/or test set

7. Test time Augmentation

Individual Prediction

Method 2

# Method 2

trn_tfms, val_tfms = tfms_from_model(arch, sz)

im = val_tfms(open_image(PATH + fn)) # open_image() returns numpy.ndarray

preds = learn.predict_array(im[None])

np.argmax(preds)

Method 1

# Method 1

# We will pick a first file from the validation set.

# This is the shortest way to get a prediction:

trn_tfms, val_tfms = tfms_from_model(arch, sz)

ds = FilesIndexArrayDataset([fn], np.array([0]), val_tfms, PATH)

dl = DataLoader(ds)

preds = learn.predict_dl(dl)

np.argmax(preds)

learn.data.classes[np.argmax(preds)]

Observe data point

# What if we want to run a single image through a model to get a prediction?

fn = data.val_ds.fnames[0]; fn

Image.open(PATH + fn).resize((150,150))

Creation of Probability

Matrix

Optional Create link of output

# Now you can call ds.to_csv to create a CSV file

# and compression='gzip' will zip it up on the server.

SUBM = f'{PATH}/subm/'

os.makedirs(SUBM, exist_ok=True)

df.to_csv(f'{SUBM}subm.gz', compression='gzip', index=False)

# You can use Kaggle CLI to submit from the server directly,

# or you can use FileLink which will give you a link to download the file from the server to your computer.

FileLink(f'{SUBM}subm.gz')

Insert ID

# Insert a new column at position zero named id.

# Remove first 5 and last 4 letters since we just need IDs

# (a file name looks like test/0042d6bf3e5f3700865886db32689436.jpg)

df.insert(0, 'id', [o[5:-4] for o in data.test_ds.fnames])

View shape

probs.shape # (n_images, n_classes)

(10357, 120)

Testing

# It is always good idea to use TTA:

# is_test=True : it will give you predictions on the test set rather than the validation set

# By default, PyTorch models will give you back the log of the predictions,

# so you need to do np.exp(log_preds) to get the probability.

log_preds, y = learn.TTA(is_test=True) # use test dataset rather than validation dataset

probs = np.mean(np.exp(log_preds),0)

#accuracy_np(probs, y), metrcs.log_loss(y, probs) # This does not make sense since test dataset has no labels

Validtion

Accuracy

Dog breed

accuracy_np(probs,y), metrics.log_loss(y, probs)

Cats & dogs

# Accuracy with Final model & TTA

accuracy_np(probs, y)

Predictions

log_preds,y = learn.TTA()

probs = np.mean(np.exp(log_preds),0)

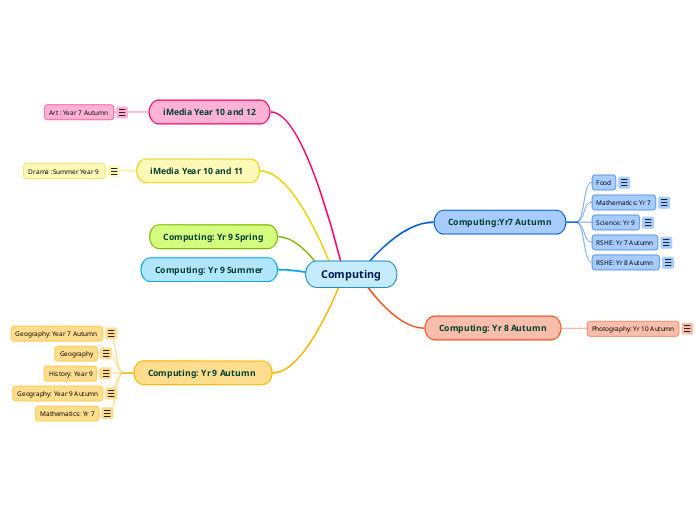

6. Model Development

e. Fine-tuning and

differential learning rate annealing

# So far, we have not retrained any of pre-trained features —

# specifically, any of those weights in the convolutional kernels.

# All we have done is we added some new layers on top and learned how to mix and match pre-trained features.

# Images like satellite images, CT scans, etc have totally different kinds of features all together

# so you want to re-train many layers.

# For dogs and cats, images are similar to what the model was pre-trained with,

# but we still may find it is helpful to slightly tune some of the later layers.

# Here is how you tell the learner that we want to start actually changing the convolutional filters themselves:

learn.fit(lr, 3, cycle_len=1, cycle_mult=2)

Check Accuracy

epoch trn_loss val_loss accuracy

0 0.045694 0.025966 0.991

1 0.040596 0.019218 0.993

2 0.035022 0.018968 0.9925

3 0.027045 0.021262 0.992

4 0.027029 0.018686 0.993

5 0.022999 0.017357 0.993

6 0.02003 0.017553 0.993

# Earlier we said 3 is the number of epochs, but it is actually cycles.

#So if cycle_len=2 , it will do 3 cycles where each cycle is 2 epochs (i.e. 6 epochs). Then why did it 7?

#It is because of cycle_multcycle_mult=2 : this multiplies the length of the cycle after each cycle

# (1 epoch + 2 epochs + 4 epochs = 7 epochs).

Set Differential Learning Rate

lr=np.array([1e-4,1e-3,1e-2])

Observe Learning Rate

# Visualization of LR due to LR anealing and SDGR and differential learning rate

learn.sched.plot_lr()

# Earlier layers like the first layer (which detects diagonal edges or gradient) or

# the second layer (which recognizes corners or curves) probably do not need to change by much, if at all.

# Later layers are much more likely to need more learning. So we create an array of learning rates (differential learning rate

Unfreeze Conv Filters

learn.unfreeze()

# So far, we have not retrained any of pre-trained features —

# specifically, any of those weights in the convolutional kernels.

# All we have done is we added some new layers on top and learned how to mix and match pre-trained features.

# Images like satellite images, CT scans, etc have totally different kinds of features all together

# so you want to re-train many layers.

# For dogs and cats, images are similar to what the model was pre-trained with,

# but we still may find it is helpful to slightly tune some of the later layers.

# Here is how you tell the learner that we want to start actually changing the convolutional filters themselves:

d. Choosing Learning Rate

Save Model

View model arch

learn.summary()

Load Model

# Load saved model

learn.load('224_lastlayer')

# Save model

learn.save('224_lastlayer')

Fit Model on Current data

learn.fit(1e-2, 3, cycle_len=1)

# cycle_len enables stochastic gradient descent with restarts (SGDR).

# small changes to the weights may result in big changes to the loss.

# We want to encourage our model to find parts of the weight space that are both accurate and stable.

# Therefore, from time to time we increase the learning rate (this is the ‘restarts’ in ‘SGDR’),

# which will force the model to jump to a different part of the weight space if the current area is “spiky”

# The basic idea is as you get closer and closer to the spot with the minimal loss,

# you may want to start decrease the learning rate (taking smaller steps) in order to get to exactly the right spot.

# This is called learning rate learning rate annealing

# An approach is simply to pick some kind of functional form

# really good functional form is one half of the cosign curve

# which maintains the high learning rate for a while at the beginning, then drop quickly when you get closer.

# So far We are using a pre-trained network which has already learned to recognize features

# (i.e. we do not want to change hyper parameters it learned),

# so what we can do is to pre-compute activations for hidden layers and just train the final linear portion.

# To use data augmentation, we have to do learn.precompute=False

learn.precompute=False

c. Model Development Step 2

Check accuracy

epoch trn_loss val_loss accuracy

0 0.048828 0.029881 0.99

# Step 1-

learn.fit(1e-2, 5)

# step 2

learn.precompute = False

learn.fit(1e-2,5,cycle_len=1)

# Step 3

learn.fit(1e-2,5,cycle_len=1)

learn.fit(1e-2, 1)

Option 2

learn = ConvLearner.pretrained(arch, data, precompute=True, ps=0.5)

# ps - indicates drop out rate

Option 1

#At its creation (ie, when you run the code above : learn = ...) and by default,

# Initially, the augmentations actually do nothing because of precompute=True

learn = ConvLearner.pretrained(arch, data, precompute=True)

learn.fit(1e-2, 1)

# At this point, your data (images) are formatted according to your pre-trained model (arch) and preferences (sz, DA, zoom…),

# and they are ready-to-be used

# Before data = ImageClassifierData.from_paths(PATH, tfms=tfms_from_model(arch,sz))

# Now

# new data object that includes this augmentation in the transforms.

# allows the convolutional neural net to learn how to recognize cats or dogs from different angles.

data = ImageClassifierData.from_paths(PATH, tfms=tfms)

Data Transformation

Visualize Data Transformations

def get_augs():

data = ImageClassifierData.from_paths(PATH, bs=2, tfms=tfms, num_workers=1)

x,_ = next(iter(data.aug_dl))

return data.trn_ds.denorm(x)[1]

ims = np.stack([get_augs() for i in range(6)])

or

Perform Data Transformation

# Defining Data augmentation transformations

tfms = tfms_from_model(resnet34, sz, aug_tfms=transforms_side_on, max_zoom=1.1)

for dog breed -or

data=get_data(sz,bs)

def get_data(sz, bs):

tfms = tfms_from_model(arch, sz, aug_tfms=transforms_side_on,max_zoom=1.1)

data = ImageClassifierData.from_csv(PATH, 'train', f'{PATH}labels.csv', test_name='test', num_workers=4,val_idxs=val_idxs, suffix='.jpg', tfms=tfms, bs=bs)

return data if sz>300 else data.resize(340, 'tmp')

b. Choosing Learning Rate

# find an optimal learning rate find an optimal learning rate.

# we simply keep increasing the learning rate from a very small value, until the loss stops decreasing

# we should re run this command as model changes

learn.lr_find()

Observe Learning rate

LR with loss

# plot of loss vs learning rate

learn.sched.plot()

LR with iterations

# for mini batch we increate the learning rate , the losses will decrease & then get worse

learn.sched.plot_lr()

Find learning rate

we should re run this command as model changes

For learning rate at lowest point , divide /10

Create learner with

Precompute= True

# Create new learner to set learning rate for new untrained model

learn = ConvLearner.pretrained(arch, data, precompute=True)

a. Model Development step1

# Example of cats and dogs

# list directories of 'PATH'

os.listdir(PATH)

['sample', 'valid', 'models', 'train', 'tmp', 'test1']

# list directories of 'Valid'

os.listdir(f'{PATH}valid')

['cats', 'dogs']

e. Analyse results on Validation Set

Observe Image Size

Info

# Now we check image size. If they are huge, then you have to think really carefully about how to deal with them.

# If they are tiny, it is also challenging. Most of ImageNet models are trained on either 224 by 224 or 299 by 299 images

Analyse Results on validation

Most Uncertain Predictions

#Most uncertain predictions

most_uncertain = np.argsort(np.abs(probs -0.5))[:4]

plot_val_with_title(most_uncertain, "Most uncertain predictions")

Most Current 1

# Most correct dogs

plot_val_with_title(most_by_correct(1, True), "Most correct dogs")

Most Current 0

# Most correct cats

plot_val_with_title(most_by_correct(0, True), "Most correct cats")

Correct labels

# 1. A few correct labels at random

plot_val_with_title(rand_by_correct(True), "Correctly classified")

Incorrect labels

# 2. A few incorrect labels at random

plot_val_with_title(rand_by_correct(False), "Incorrectly classified")

Data Preparation before analysis

#label for a val data

#This is what the validation dataset label (think of it as the correct answers) looks like:

print(data.val_y)

Some critical funcations

Function set 4

#Source:

def resize(self, targ, new_path):

new_ds = []

dls = [self.trn_dl,self.val_dl,self.fix_dl,self.aug_dl]

if self.test_dl: dls += [self.test_dl, self.test_aug_dl]

else: dls += [None,None]

t = tqdm_notebook(dls)

for dl in t: new_ds.append(self.resized(dl, targ, new_path))

t.close()

return self.__class__(new_ds[0].path, new_ds, self.bs, self.num_workers, self.classes)

#File: ~/fastai/courses/dl1/fastai/dataset.py

Function set 3

def most_by_mask(mask, mult):

idxs = np.where(mask)[0]

return idxs[np.argsort(mult * probs[idxs])[:4]]

def most_by_correct(y, is_correct):

mult = -1 if (y==1)==is_correct else 1

return most_by_mask(((preds == data.val_y)==is_correct) & (data.val_y == y), mult)

Function set 2

def load_img_id(ds, idx): return np.array(PIL.Image.open(PATH+ds.fnames[idx]))

def plot_val_with_title(idxs, title):

imgs = [load_img_id(data.val_ds,x) for x in idxs]

title_probs = [probs[x] for x in idxs]

print(title)

return plots(imgs, rows=1, titles=title_probs, figsize=(16,8)) if len(imgs)>0 else print('Not Found.')

Function set 1

def rand_by_mask(mask): return np.random.choice(np.where(mask)[0], min(len(preds), 4),

replace=False)

def rand_by_correct(is_correct): return rand_by_mask((preds == data.val_y)==is_correct)

def plots(ims, figsize=(12,6), rows=1, titles=None):

f = plt.figure(figsize=figsize)

for i in range(len(ims)):

sp = f.add_subplot(rows, len(ims)//rows, i+1)

sp.axis('Off')

if titles is not None: sp.set_title(titles[i], fontsize=16)

plt.imshow(ims[i])

Convert Log to real Prob

#The output represents a prediction for cats, and prediction for dogs

# converting log probabiities to 0 or 1

preds = np.argmax(log_preds, axis=1)

# probhability of dog

probs = np.exp(log_preds[:,1])

Make Log predictions

# Let’s make predictions for the validation set (predictions are in log scale):

log_preds = learn.predict()

log_preds.shape

# Observe Log predictions

log_preds[0:5]

Find Class of labels

# We learn cats is 0 & dogs is 1

print (data.classes)

label for a val data

d. Fit the model

# At this point (precompute=True and first layers frozen), only the last layers of your new NN will be trained

# (ie, their weights will be updated in order to minimize the loss of the model)

learn.fit(0.01,2)

Check accuracy

epoch trn_loss val_loss accuracy

0 0.053085 0.030423 0.989

1 0.046366 0.031399 0.9875

For dog breed

learn.fit(1e-2, 5)

data contains the validation and training datalearn contains the model

c. Create learner with Precompute =True

#At its creation (ie, when you run the code above : learn = ...) and by default,

# the new NN freezes the first layers (the ones from arch) and downloads the pre-trained weights of arch.

# More, precompute=False by default. Therefore, you must precise precompute=True if you want to change the default behavior.

learn = ConvLearner.pretrained(arch,data,precompute=True)

# At this point (precompute=True and first layers frozen), only the last layers of your new NN will be trained

# (ie, their weights will be updated in order to minimize the loss of the model)

# On first atempt it may take time to execute precompute action id data is transformed

b. Perform Data Formatting

data variable actions

Data resize

data.resize(340, 'tmp')

View Training data

fn = PATH + data.trn_ds.fnames[0]; fn

img = PIL.Image.open(fn); img

data training file

data.trn_ds

data validation - view variable

data.val_ds.y

data test files

# data.classes : contains all the different classes

data.test_ds.fnames : test file names

View data classes

# data.classes : contains all the different classes

data.classes

For dod breed

# When we start working with new dataset, we want everything to go super fast.

# So we made it possible to specify the size and start with something like 64 which will run fast.

#Later, we will use bigger images and bigger architectures at which point, you may run out of GPU memory.

# If you see CUDA out of memory error, the first thing you need to do is to restart kernel (you cannot recover from it),

#then make the batch size smaller.

def get_data(sz, bs):

tfms = tfms_from_model(arch, sz, aug_tfms=transforms_side_on,max_zoom=1.1)

data = ImageClassifierData.from_csv(PATH, 'train', f'{PATH}labels.csv', test_name='test', num_workers=4,val_idxs=val_idxs, suffix='.jpg', tfms=tfms, bs=bs)

return data if sz>300 else data.resize(340, 'tmp')

# Call function

data=get_data(sz,bs)

For cat - dog

# At this point, your data (images) are formatted according to your pre-trained model (arch) and preferences (sz, DA, zoom…),

# and they are ready-to-be used

data = ImageClassifierData.from_paths(PATH, tfms=tfms_from_model(arch,sz))

when the images are loaded to the data object - they are cropped to square (side of the square = smaller dimension of original image) and they are resized till the value of sz (i.e. 64x64 if sz=64).

https://forums.fast.ai/t/understanding-how-image-size-is-handled-in-fast-ai/12330

a. Optional Step

a. Optional Step

# Uncomment the below if you need to reset your precomputed activations

# shutil.rmtree(f'{PATH}tmp', ignore_errors=True)

5. Observations

c. Observe Files

Folder Structure

- Dog breed-csv

For dog - breed CSV

Access Data

# You can access to training dataset by saying data.trn_ds and trn_ds contains a lot of things including file names (fnames)

fn = PATH + data.trn_ds.fnames[0]; fn

img = PIL.Image.open(fn); img

img.size

Data transformations

tfms = tfms_from_model(arch, sz, aug_tfms=transforms_side_on, max_zoom=1.1)

data = ImageClassifierData.from_csv(PATH, 'train', f'{PATH}labels.csv', test_name='test', val_idxs=val_idxs, suffix='.jpg', tfms=tfms, bs=bs)

# max_zoom — we will zoom in up to 1.1 times

# ImageClassifierData.from_csv — last time, we used from_paths but since the labels are in CSV file, we will call from_csv instead.

#test_name — we need to specify where the test set is if you want to submit to Kaggle competitions

#val_idx — there is no validation folder but we still want to track how good our performance is locally.

Information

Transformations have to be done before observing trends in training data

Distribution of breed

# Code for Multi breed classification

label_df.pivot_table(index='breed', aggfunc=len).sort_values('id', ascending=False)

Read head of file CSV

# Code for Multi breed classification

label_df = pd.read_csv(label_csv)

label_df.head()

Folder Structure

- Cats & Dogs

# Observe file names

os.listdir(f'{PATH}valid/cats')[0]

# observe cat picture

img = plt.imread(PATH + 'valid/cats/' + os.listdir(f'{PATH}valid/cats')[0])

plt.imshow(img);

# Observe img shape

img.shape

b. Optional - for structure similar to do breed- csv

create training &

validation rows

For do breed classification

# This is a little bit different to our previous dataset.

# Instead of train folder which has a separate folder for each breed of dog, it has a CSV file with the correct labels

# Open CSV file, create a list of rows, then take the length. -1 because the first row is a header.

# Hence n is the number of images we have.

# Command to open CSV files

label_csv = f'{PATH}labels.csv'

# number of total rows minus the header

n = len(list(open(label_csv)))-1

print (n)

# Creation of rows for validation set, default = 20%

# “get cross validation indexes” — this will return, by default, random 20% of the rows (indexes to be precise)

# to use as a validation set.

# You can also send val_pct to get different amount.

val_idxs = get_cv_idxs(n)

print(len(val_idxs))

a. Observe Folder Structure of path

# Example of cats and dogs

# list directories of 'PATH'

os.listdir(PATH)

['sample', 'valid', 'models', 'train', 'tmp', 'test1']

# list directories of 'Valid'

os.listdir(f'{PATH}valid')

['cats', 'dogs']

For dog breed

['train.zip',

'sample_submission.csv',

'.ipynb_checkpoints',

'test',

'all.zip',

'labels.csv',

'train',

'test.zip']

4. Set Parameters

# Example 1: Binary Image Classifcation

#Path is path to Data

PATH= '/home/paperspace/fastai/courses/SelfCodes/Binary_Image_Classiff_cats_dogs/Binary_Image_Classiff_cats_dogs/data/dogscats/'

# sz' is size of images, should be changed in order to perform fast training,

# One should start with small size, once results improve saizes can be increased

sz=224

# Select D-learning model

arch=resnet34

# Fo dog breed we try

arch = resnext101_64

# bs- batch size - default is 64

bs=58 # start from 28

Information on Architecture

# different architectures have different number of layers, size of kernels, filters, etc.

# We have been using ResNet34 — a great starting point and often a good finishing point

# because it does not have too many parameters and works well with small dataset.

# here is another architecture called ResNext which was the second-place winner in last year’s ImageNet competition.

# ResNext50 takes twice as long and 2–4 times more memory than ResNet34

3. Check NVidia GPU framework

# NVidia GPU with programming framework CUDA is critical & following command must return true

torch.cuda.is_available()

# Make sure deep learning package from CUDA CuDNN is enabled for improving training performance ( prefered)

torch.backends.cudnn.enabled

2. Import all main extrnal libraries

# Confirm Python Version 3

from platform import python_version

print(python_version())

import os

#Change working Directory to import Fastai libraries

os.chdir('/home/paperspace/fastai/courses/dl1')

%pwd

# Import all main extrnal libraries

from fastai.imports import *

# Manage transformation library written from scratch in PyTorch.

# The main purpose of the library is for data augmentation, also use it for more general image transformation purposes

from fastai.transforms import *

# Get a pre-trained model with the fast ai library

from fastai.conv_learner import *

# DL models by fastai (http://files.fast.ai/models/)

from fastai.models import *

# Module has the necessary functions to be able to download several useful datasets

from fastai.dataset import *

# Module to reform SGDR

from fastai.sgdr import *

# Module to display plots - such as learning rate

from fastai.plots import *

# Additional Libraries for Multi Class image classification

from fastai.imports import *

from fastai.torch_imports import *

Update Arch weights

!wget http://files.fast.ai/models/weights.tgz

!tar -xvzf weights.tgz

1. auto-reload modules

#To auto-reload modules in jupyter notebook (so that changes in files *.py doesn't require manual reloading):

%reload_ext autoreload

%autoreload 2

#To inline the output of plotting commands is displayed inline within frontend Jupyter notebook

%matplotlib inline