VM270 6350

Chapter 5: Eigenvalues and Eigenvectors

Matrices

Diagonal

Matrix with non-zero elements only along the diagonal of the matrix and zeroes everywhere else

Upper Triangular

2nd Corollary from Ch. 6: Supose V is a complex vector space and T c L(V). Then T has an upper-triangular matrix with respect to some orthonormal basis of V.

This corollary is essentially "cutting out the fat" of the previous two.

Corollary from CH. 6: If the above theorem is true, then T has an upper-triangular matrix with respect to some orthonormal basis of V.

Theorem: Suppose V is a complex vector space and T c L(V). Then T has an upper-triangular matrix with respect ot some basis of V.

Theorem: Every operator on a finite-dimensional, nonzero, complex vector space has an eigenvalue.

Tu = λu

The non-zero vector u c V is called the eigenvector of T (corresponding to λ).

null (T -λI) = The set of eigenvectors of T corresponding to λ.

The scalar λ c F is an eigenvalue of T c L(V) when u is a non-zero vector.

Recall: An Operator is a linear map function from a vector space to itself.

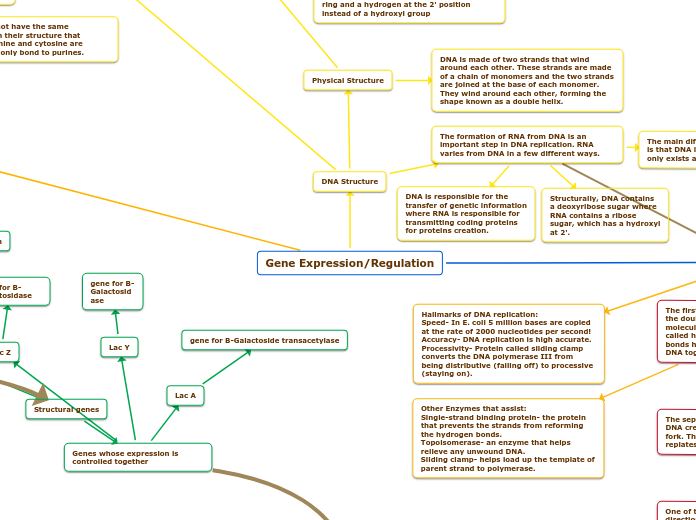

NOTE FOR PROF BARAKAT

I'm sorry if this mindmap is messy. After a while I grew tired of constantly switching between underscores and italics and all this would quickly aggravate my Carpal Tunnel, so at some point some of this typing is mostly in a way that is not necessarily the cleanest way of typing things, but the fastest and easy enough for me to understand. After all, these maps were mainly for our own benefit in grasping the subject, weren't they?

Also, the boundaries were included to highlight what I believed to be the most fundamental or difficult topics we've gone over so I could quickly find them.

Sorry for any inconvenience

Chapter 6: Inner-Product Spaces

Orthogonal Decomposition

u = av + w.

Set w = u - av

Take into account that = 0 because w is supposed to be orthogonal to av.

Find a value for a in terms of u and v.

Substitute the value into u = av + (u - av)

This gives you an Orthogonal Decomposition.

Note: Originally wrote much more than this, but Mindomo deleted the branch and couldn't redo it so this is sufficient for now.

Theorems

If U is a subspace of V, then

V equals the direct sum of U and the orthogonal complement of U.

The Gram-Schmidt procedure

This procedure essentialy allows you to transform a linearly independent set of vectors (v1,...,vm) into an orthonormal set (e1,...,em) of vectors in V, such that span (v1,...,vj) = span (e1, ..., ej) for j= 1, ..., m.

Parallelogram Equality

This is one of the easiest theorems that you realize you need to use during a proof/problem. If it uses u+v or u-v, there's a good chance you need to use this equality.

||u+v||^2 + ||u-v||^2 = 2 * (||u||^2 + ||v||^2)

Triangle Inequality

||u + v|| <= ||u|| + ||v||

Cauchy-Schwarz Inequality

If u,v c V, then || =< ||u|| ||v||

Pythagorean Theorem

||u + v||^2 = ||u||^2 + ||v||^2

Definitions

Conjugate transpose

Linear Functional

Orthogonal Projection

Norm

The length of a vector

Adjoint

For T c L(V,W), =

A function/linear map

Orthogonal complement of U

The set of all vectors in V that are orthogonal to every vector in U. Denoted as U^(Perpendicular symbol)

Orthogonal

= 0.

Basically, this is when u and v are perpendicular to each other.

Inner-Product Space

Euclidean Inner Product

Def: A vector space V along with an inner product on V

What is an Inner-Product?

Conjugate Symmetry

Homogeneity in first slot

Additivity in first slot

Definiteness

= 0 if and only if v=0

Positivity

>= 0 for all v c V

Formal Definition

A function that takes every order pair (u, v) c V to the number c F.

An Inner Product basically measures how much u points in the direction of v when u,v C V. It takes two vectors and produces a number.

Chapter 7: Operators on Inner-Product Spaces

Isometry

For S c L(V), ||Sv|| = ||v||

Def: An operator is an isometry if it preserves norms.

The Spectral Theorem

Overall Result

Whether F=R or F=C, every self-adjoint operator on V has a diagonal matrix with respect to some orthonormal basis.

Components

Real Spectral Theorem

Supposing V is a real inner-product space and T c L(V), V has an orthonormal basis consisting of eigenvectors of T if and only if T is self-adjoint.

Lemmas

2

Suppose T c L(V) is self-adjoint. Then T has an eigenvalue.

Important mostly for Real vector spaces

1

Suppose T c L(V) is self-adjoint. If a¸b cR are such that

(a)^2 < 4b, then

T^2 + aT + bI

is invertible.

Complex Spectral Theorem

Supposing V is a complex inner-product space and T c L(V), V has an orthonormal basis consisting of eigenvectors of T if and only if T is normal.

What is it?

This theorem characterizes operators on V, for which there is an orthonormal basis of V with respect to the operator's diagonal matrix, as either a normal or self-adjoint operator, depending on whether F=C or F=R.

Self-Adjoint and Normal Operators

Normal

Prop: T is normal if and only if

||Tv|| = ||T*v||

Definition

TT* = T*T

In other words, the operator commutes with its adjoint.

All self-adjoint operators are also normal.

Self-Adjoint

For T c L(V), T = T*

Chapter 3: Linear Maps

Matrix

Range

A.k.a. Image

Null Space

Proposition: If T c L(V,W), then null T is a subspace of V

The subset of V consisting of the vectors that T maps to 0.

Types of Linear Maps

From F^n to F^m

Backward shift

Multiplication by x^2

Integration

Differentiation

Identity Map

Iv = v

Zero Function

0v = 0

Linear Map

Ex: T: V -> W maps a vector in V to W.

Def: A function that "maps" a vector from one vector space onto another vector space

Properties of a Linear Map

Homogeneity

T(av) = a(Tv)

Additivity

T(u + v) = Tu + Tv

Vocabulary

Intersection (Ո)

Remember, it's the KFC.

The set of elements in set A and set B.

Union (U)

Remember, it's the stores on street A and B, including the KFC on the corner.

The set of elements in set A or set B.

Operator

Def: A linear map function from a vector space to itself.

Invariant

A subspace that gets mapped onto itself by an operator is said to be invariant.

Subjective

For T: V -> W, T is surjective if its range equals W.

Def: Onto

Injective

For T: V -> W, T is injective whenever u, v c V and Tu = Tv, we get u = v.

Def: One to one

Chapter 2:

Finite Dimensional

Vector Spaces

Isomorphic

Two vector spaces are isomorphic if there is an invertible linear map from one vector space onto the other one.

Invertible

A linear map T c L(V,W) is invertible if there is a linear map S c L(W,V) such that ST = I c V and TS = I c W.

Dimension

Bases

Linear Dependence and Independence

Linear Dependence

Linear Independence

Span

Linear Combination

Def: Composed of a list (v1, ..., v

Chapter 1: Vector Spaces

Direct Sum

Theorem

If U and W c V, then V is a direct sum of U and W if and only if V = U + W and U Ո W = {0}

When every vector in V can be represented as a unique linear combination of subspaces U and W, it is said that V is a Direct Sum of U and W.

Sum

A Sum is a linear combination of a vector in V using vectors in subspaces of V.

Notation/Example:

V = U + W when U, W c V.

Vector Spaces

Must have a addition function and a scalar multiplication function so the other properties may follow:

Distributative Properties

(a + b)u = au + bu for all a,b c F and all u c V

a (u + v) = au + av for all a c F and all u,v c V

1v = v for all v c V

For every v c V, there exists w c V such that v + w = 0.

Additive Identity

There exists an element 0 c V such that v + 0 = v for all v c V

(ab)v = a(bv) for all a,b c F and all v c V

(u + v) + w = u + (v + w) for all u,v,w c V

u + v = v+ u for all u,v c V

Definition

A set V with addition and scalar multiplication functions in V that allow the listed properties to hold.

Complex Numbers

Created this branch in case i needed to reference something.

Properties

Division

w/z = w * (1/z) for w,z c C with z not equal to 0.

z * (1/z) = 1 for z c C and z not equal to 0.

Subtraction

w - z = w + (-z) for w,z c C

z + (-z) = 0 for z c C

Distributative

Multiplicative Inverse

Additive Inverse

Identities

Associativity

Commutativity

Definition: C ={a+bi: a,b c R}