by Eric Nic 2 days ago

735

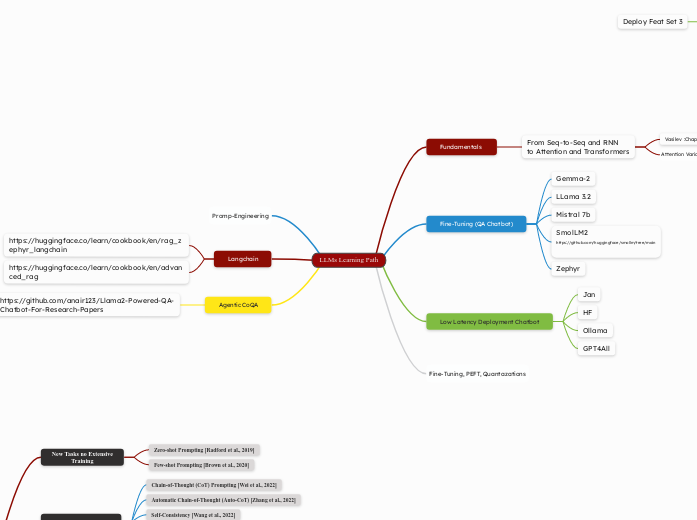

LLMs Learning Path

The content revolves around various aspects of large language models (LLMs) and their applications. It delves into different types of AI-driven chatbots, including medical QA bots and recommendation systems.