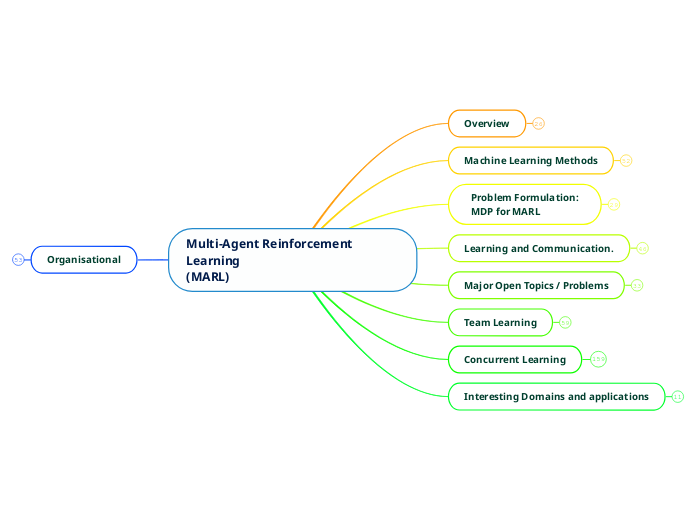

Multi-Agent Reinforcement Learning

(MARL)

Organisational

RL Theory Refresher

Single-Agent RL

Policy-Based Methods

Find the policy directly from searching the policy space, rather than state space.

go in the direction of the gradient to improve policy.

Find gradient of policy.

ie how the policy changes as a function of the actions you pick?

Essentially hill climbing.

Policy Gradient Theorem

Value-Based Methods

To find the optimal Q-function Q*.

To find π* from Q*:

π* = argmax_a (Q*(s, a)).

So at any state s, π* should return the action a that gives the best values of Q*(s,a)

To find Q*:

DQN

A neural network approach to estimate Q*.

Q-Learning algorithm

Approximate Q* (Q_hat) by updating its value via temporal-difference learning.

temporal error is given by the difference of the temporal difference target and the current value of Q_hat(s,a)

given by the reward gained and the max Q_hat at s_t+1, varying the action a.

update Q_hat(s,a) by updating current value with temporal difference error

Q* implies that the policy that is followed, ie the actions that are chosen in each state, is the optimal one.

V(s, a) function

This is evaluated in each state, therefore providing information as to which route to take at any moment to maximize expected, discounted, cumulative return.

Following policy π, V(s) evaluates each state s based on what other valued states it can reach from s.

Reward is used to judge the intrinsic value of a state to reach a solution to the MDP.

Q(s, a) function

Hence it evaluates each state-action pair while following π (so not all state-action pairs), based on what the agent could do from that state onwards.

Following policy π, ie a is dictated by π,

Q(s, a) is the expected, discounted cumulative reward for taking an action according to π in state s, evaluated at all states s.

Problem Formulation: Markov Decision Process

The goal of the agent is to solve the MDP.

Ideally it will find that policy π* which maximises the expected , discounted, cumulative reward.

To do this, it will find a policy function π: S -> Δ(A).

ie a strategy or a decision or a solution

A function that returns an action to pick based on the state the agent is in.

γ is [0, 1]: the discount factor that diminishes the values that are far away in time.

R: S x A x S -> RealNumber. Function returning a real scalar based on the agent's transition from s to s', as a result of action a.

P: S x A -> Δ(S) transition probability from state s, by taking action a to state s'.

A: the set of possible actions taken by the agent at any state

a is an action

S: the set of possible states in the environment

s is a state

Things to look up

references 11, 135 and 260 in Panait&Luke

Probability Simplex

Things I don't know/understand

Nash Equilibria

If two players Alice and Bob choose strategies A and B, (A, B) is a Nash equilibrium if Alice has no other strategy available that does better than A at maximizing her payoff in response to Bob choosing B, and Bob has no other strategy available that does better than B at maximizing his payoff in response to Alice choosing A.

(Wikipedia)

A situation where no-one has anything to gain from changing only their own strategy.

When all players have no better move than the current, as a response to other player's moves.

Optimal Control theory?

Deep Q-Learning (DQN)

Interesting Domains and applications

Herding

1 agent needs to herd it into a designated area.

1 agent is the 'sheep' which simply avoids obstacles

Cooperative target observation

multi-agent patrolling.

multi-agent object tracking, but while moving.

multi agent tracking of (multiple objects)

Cooperative navigation

navigating across an area without bumping into each other or other obstacles.

Foraging

assembling resources

Concurrent Learning

Teammate modelling, for learners to model what other learners will do.

Sekaran and Sen investigate the effect of reciprocity on multiagent systems.

cooperation

for cooperation:

so if the ratio of the cost of helping to the benefit it will impart is smaller than the acquaintanceship.

degree of acquaintanceship > ratio of cost of altruistic help TO the benefit to the recipient

degree of acquaintanceship

ie how acquainted they are by giving and receiving help.

probability that an agent knows another's reputation

reputation

reciprocity improves an agent's reputation.

indirect reciprocity

an agent gives help and so will expect to receive help in the future.

direct reciprocity

A helps B, so A will expect help in the future from B

They show that in this scenario those agents that don't cooperate are worse off.

they apply a stochastic decision-making algo that encourages reciprocity while avoiding being taken advantage of.

Wellman and Hu on initial beliefs

they also suggest that the best policy for learners is to minimize their assumptions about other learners' policies.

An alternative:

find out who is cooperating, competing or in another form of relationship.

Depending on beliefs, result can be better or worse.

they suggest that resulting behaviours of learning other agents models is highly dependent on agents' initial beliefs.

Banerjee et al, Mukherjee and Sen experiment of 1-level agents

this produces some form of trust

agents trust each other since they know how they reason?

1-level agents that each other's probability distribution.

Infinite recursion

Categorisation of agents based on their belief of on the adaptability of other agents.

in general N-level agent models teammates as (N-1)-level agents

1-level agent:

I guess it assumes agents are just slightly learning & adapting.

models its teammates as 0-level.

0-level agent:

thinks none of its teammates as performing any learning activity nor adaptive.

Agent A predicts Agent B which predicts Agent A which predicts....

Chalkiadakis and Boutilier's Bayesian Learning for updating models of other agents.

based on these models, agents modify their behaviour to better cooperate with other agents.

Learning about other agents in the environment so as to make good guesses about their expected behaviour.

Credit Assignment Problem

For sequences of problems that require completion of sequential tasks. Eg: Get info A (done by agent 1), and with A solve the problem (done by agent 2)

Averaging Rewards over sequences of tasks, rather than discounting.

Cooperation is achieved when agents that are further away also get rewarded.

More immediate reward ensures that agents further away from the solution, due to workflow get less reward.

Wonderful Life Utility

so I guess:

it rewards important contributions

it rewards participation

reward based on how the team would accomplish the task without the learner.

Markov Chain + Kalman Filtering

The agent may use a Kalman filter to separate these two signals, and compute its real reward from participating in completing the global task.

In CV, it is used to try to track an object as it passes behind an occlusion.

A Kalman filter can be used to estimate an unknown quantity based on noise or other variables, by estimating a joint probability distribution on these variables.

The learner assumes the reward is a sum of

Some Markov process that estimates the contribution of teammates.

its own contribution to solving the task

Social Reinforcement

2. Vicarious Reinforcement

this allows to balance between local rewarding and global rewarding.

so, reward is received whenever other learners are rewarded.

Vicarious = feeling through/for others.

1. Observational Reinforcement

obtained when a learner reproduces the behaviour of another learner.

may improve overall behaviour by reproducing rare behaviour.

Local Reward

Local reward increases the homogeneity of the learners.

This might lead to more general solutions, so that any agent in any circumstance might find solutions.

With local rewards, learners tend to converge on similar solutions.

This promotes greediness in learners. They won't cooperate, because they don't have enough incentive to.

Reward each agent based only on their performance. Ignore team efforts.

Global Reward

Problems:

Situations where reward has to be assigned differently because global reward can't be computed.

ie: reward for all agents cannot be gathered together.

Not enough individual feedback to learn a solution.

Some learners end up doing most of the work, others just become lazy.

Split team reward equally among learners

divide the reward so that the whole team benefits when 1 team member is rewarded (or punished).

how to reward individual learners as a team

Dynamics of Learning

Related observations from competitive learning.

cyclic behaviours

in competitive learning, agents may get stuck in loops rather than building an arms race.

agents circle about one another due to non-transitive relationships

ie they get stuck in loops.

Red-queen effect

it could be that the rest of the team is performing worse and the single learner is getting locally rewarded for better performance.

as a learner improves with respect to the reward it obtains, is the entire team improving as well?

Loss of gradient

Related to laziness and transfers over to cooperative scenarios.

when one learner dominates the other. There is no further learning, since neither learner can get enough reward to adapt.

"Host-parasite" coevolution

1 target learner adapts to test cases, while a secondary competitive learner adjusts the test cases.

competitive concurrent learning is better at wider problem test cases.

The theory that competition pushes players to innovate.

as agents are pitted against each other, they are forced to improve to win.

General Sum Games

WoLF: Win or Learn Fast

to large values when losing.

small when winning

varies the learning rate from:

Correlated-Q algo

based on "correlated algorithm" solution concept

Friend-or-Foe Q-Learning

or an adversarial one for competitive agents.

either find a coordination equilibrium for cooperative agents

EXORL algo

Learns optimal response to adaptive and fixed policies of other agent.

Bowling, Hu and Wellman distributed RL algo

Nagayuki et al present an approach where agents approximate other agent's policies rather than their q-values.

Agents also learn q-values for all other agents.

Q-Learning has been applied to extensive-form games.

Normal form: players act simultaneously.

Extensive form: Players alternate in performing actions

General sum games are non-zero sum games. This means that while players compete for score, the total score doesn't necessarily sum to 0.

At the same time, they are also not fully cooperative in the sense that players gain score for themselves, not everyone in the game.

Fully cooperative scenarios

Cooperative CoEvolutionary Algorithms (CCEAs)

Agents tend to co-adapt to other agents (including poor collaborators), rather than trying to optimise their performance, by choosing the ideal collaborator.

Solution to both split problems into subproblems, and concurrently search for solutions to the subproblems.

Peshkin et al. distributed RL approach to partially observable fully cooperative stochastic games.

Their algo converges to local optima, which are not necessarily nash equilibria.

POMDPs: Partially Observable MDPs are used to extend stochastic games by limiting an agent's ability to observe the state of the environment.

Optimal Adaptive Learning Algo

Guaranteed to converge to Nash Equilibria

This converges to Nash equilibria, it's been proved.

Collaboration may not be achieved, and agents reach a nash equilibrium whose outcome is inferior to collaboration among all agents.

Not necessarily the optimal solution.

Scenarios where global reward is assigned. Equally divide reward among all agents.

Limited tools available to model and analyse the dynamics of concurrent learners.

Vidal and Durfee

by combining this info you can approximate the error in the agents' decision function during learning.

another system that uses parameters

rate at which other agents are learning

learning and retention rates

rate of behaviour change

Evolutionary Game Theory

has been used to:

study trajectory of concurrent Q-Learning processes.

visualise basins of attraction to Nash Equilibria

study cooperative evolution

How does it work??

Understanding the impact of co-adaptation on the learning process.

With multiple learners, adaptations to the environment and other agents by a single learner, become invalid when other agents change simultaneously.

One approach: treat the other learners as part of a dynamic enviornment.

BUT: That would mean that as a learner adapts to other learner's adaptations, they'd be changing the environment in a way that makes the original learner's adaptation invalid.

The learners' adaptations would include obsolete assumptions on other agent's behaviour, since the latter would have changed as well.

however, the environment becomes non-stationary.

this violates assumptions of machine learning methods.

ie: values of action vary with time, without having taken that action

environment that changes while training due to other agents.

Concurrent learning is preferable in those domains where decomposition is possible and helpful.

breaking the learning process into smaller chunks can allow for more flexible use of computational resources.

differing techniques, and optimizations.

If the problem can be broken down into sub-problems, then the overall search space can be broken down too, and assigned to different learners, hence reducing the search space and computational complexity.

Definition

Agents are truly independent.

The idea: reduce search space by dividing it into N separate spaces.

multiple concurrent learners.

1 learner for each agent/team member.

Team Learning

Hybrid Team Learners

Automatically Defined Groups (ADG)

a grouping technique to automatically discover the optimum number of groups and their compositions.

From research, this method seems to push for the division of labour by the agents.

So one learner decides to use the split up teams of agents as opposed to not.

it seems different teams would end specialising in differing tasks.

All agents in a squad have the same beahviour.

homogenous

set of agents is split into several squads.

heterogenous

mix of homogenous and heterogenous.

Disadvantages

centralization of the learning algo

all resources need be available in single place where training/computation is performed...

applies poorly to space?

EC can do better.

This can be too much for RL techniques.

Learner usually has to explore very large problem spaces, since multiple agents can be in different states.

Heterogenous Team Learners

"One-Population" Coevolution

(EC)

Comparing how the formed heterogenous teams perform without the specialised agents

reports of appearances of squads and 'subspecies' that I guess are specialised.

a single population is evolved, but agents are tested by pairing them with individuals chosen at random to form a heterogenous team.

don't really get it.

Perdator-prey pursuit domain

They suggest this to be due to deadlocks caused by identical behaviour between agents.

heterogeneity can help despite the rather homogenous domain.

all predator could be homogenous

all prey could be homogeneous

Restricted Breeding

(Evolutionary Computation)

Restricted breeding works best in situations where agents are grouped by teams that end up specialising.

Cross breeding between teams, but only agents that are similarly specialised.

I infer that to promote specialisation, this allows the breeding of agents more and more specialised in one direction.

agents can only breed with similar agents from other teams.

make teams of agents as differing candidate solutions to the problem.

better solutions through agent specialisation.

need to cope with large search space

One learner can develop unique behaviour for each agent.

Advantages

Learner learns how to best use the entire team, so there is no need to get into inter-agent credit assignment. (which can be complex)

Since it's single-learner, you basically apply Single-Agent machine learning.

Homogenous Team Learners

Cellular Automation (CA) as a training method.

CAs have many of the hallmarks of MAS.

and a state-update rule, that dictates agent behaviour.

so all agents have the same behaviour:

homogenous.

each with their own internal state

consists of a neighbourhood of agents.

There is debate over whether Machine Learning approaches are suitable to homogenous learning.

with a very large number of agents heterogenous is just too complex, homogeneous is better.

search space is drastically reduced.

ie: all agents have the same behaviour.

One learner develops the same single-agent behaviour for every agent on the team.

Emergent Complexity of MAS

Challenges lie in the interaction of agents after 1 learner, since you cannot expect how they'll behave.

Single learner is not representative of 1 agent, but of the entire team of agents.

So it learns solutions on how to tell the entire team how to behave.

Major Open Topics / Problems

Problem Decomposition

These are all developed for Single-Agent Learning.

how to parallelise the learning of these sub-behaviours

how multi-agent sub-behaviours might interact

Not much has been done to decompose tasks into sub-behaviours for multiple agent systems

2. Decompose the problem heuristically. Create subproblems and sub-behaviours for the agents to learn.

Fitness switching

Adaptively changes the reward to emphasise those behaviours that have made the least progress.

Shaping

gradually changes the reward function to favour more complex behaviour.

Layered Learning

then learn increasingly complex behaviours on top

learn basic behaviours first

1. Provide more "powerful" actions that reduce the amount of actions, and imply more behaviour.

ie as opposed to turn right, go straight and turn left to reach location A

Go to location A.

Therefore, use domain knowledge to simplify the state space.

the idea that a complex problem can be split into simpler sub problems.

Adaptive Dynamics and Nash Equilibria

Authors argue that agents should be less rational and focus more on coordination.

rational agents may learn optima if they trust their teammates share a common goal of achieving better team behaviour.

Due to this, learning methods tend to converge to Nash Equilibria and not the optimal solutions.

This "rational" movement towards nash equilibria can be a movement away from optima.

Rational because learners prefer to be safe at nash equilibria instead of risking a worse reward by chasing an overall optimal solution.

as agents learn, their adaptations to one another change the environment of all agents.

How can agents learn in an environment where "the goalposts keep moving"?

Scalability

Emergent behaviour causes major difficulty in the prediction of other agents, which causes difficulty for agents to learn concurrently.

Scalability is an aspect that needs to be evaluated seriously, as MAS quickly scale up and can become too difficult to tackle.

Authors believe that a large, heterogeneous, strongly intercommunicating MAS cannot be learned.

The dimensionality of the search space grows rapidly with

size of the network of interactions.

number of agents

number and complexity of behaviours

Learning and Communication.

Indirect communication

Using agents' own body to convey meaning

Body, moves or location used to convey meaning between agents.

Pheromone-based communication

Can agents learn to emit pheromones in rational locations?

AntFarm

successfully applied to forage for food around obstacles.

a system that combines EC and pheromone communication.

EC approach to tune agent policy involving multiple digital pheromones.

RL algos adopting a fixed pheromone laying procedure and use the amounts to infer sensor information.

Typical for foraging problems

Like insects that emit locally detectable chemicals, which can be used to infer a meaning. Communication can happen in this way.

implicit transfer of information as the environment is modified by agents.

think of leaving footsteps on the ground or arranging objects in the environment intentionally to communicate

communication that occurs inevitably as the agent changes the environment

Direct Communication

learning a language for communication.

in this situation, Ackley and Littman have shown that rewarding agents for communicating is not necessary.

I guess communication is just that valuable to increasing reward.

agents learn to communicate anyway.

Steels has reported emergence of coherent lexicons between agents.

Purposeless communication channel

A communication channel is provided, but its purpose is not specified. It is up to the agents to define it, through learning.

Shared utility table and/or joint policy table

Berenji and Vengerov suggest that simultaneous update of a central policy reduces convergence to sub-optimal behaviours.

This is communication: learners are teaching each other learned information.

each agent contributes to it, as separate learners.

Communication can improve team performance in many ways.

sharing knowledge related to agents' current policies, for cooperation.

(state, action, utility)

an agent can share information about past experiences in the form of episodes (state, action, reward) that others may not have experienced yet.

an agent can inform others of its current state

Intentional, decided upon communication between agents.

Communication can significantly increase a learner's search space.

it seems there is a lack of research on using selective communications only when necessary.

Is it really a Multi-Agent System is agents communicate?

real world applications usually don't allow for such limitless communication.

assuming agents can trade information without restrictions, they essentially become effectors for each other.

Stone and Veloso argue that communication converts a MAS to something isomorphic: a Single-Agent system

Definition of communication

and deduce information from them.

such that other agents can perceive the modifications

A modification of the environment,

Problem Formulation:

MDP for MARL

Policy-Based MARL

the policy gradient theorem applies here too.

derivative to figure out in which direction to climb the hill?

use a neural network for function approximations....

a neural network can figure it out?

Policy-based algorithms with function approximations to deal with curse of dimensionality.

Value-Based MARL

unfortunately this is just hiding how to actually do all this stuff behind unexplained functions.

solve()

returns a part of an agent's optimal policy at some equilibrium point. ???

eval()

gives an agent's expected long-term reward under some equilibrium, while considering other agent's interests.

each agent must evaluate the situation of the game by considering other agents' interests, as represented by their Q-functions.

This has the curse of dimensionality. Too many state-action pairs.

same temporal difference learning, but new operators are included to represent the consideration of other agents.

Solving a stochastic game.

To solve a stochastic game and maximise their cumulative discounted reward agents must consider the other agent's actions when determining their policies.

Rewards are the values in the matrix, if the matrix dimensions represent each agent's possible actions.

consider them as a sequence of normal-form games.

Actions are taken simultaneously by players

normal-form games are games that can be represented in a matrix. ie player A's actions in columns, player B's in rows, and the outcome is based on the A's and B's actions.

Hence, it contains

γ is the discount factor, representing far away states as less impactful.

R^i is the reward given to agent i. A scalar value to the ith agent, when the transition from s to s' occurs, by the joint actions of all agents.

P is the transition probability from s to s'. The action taken is a vector containing all agents' actions. That's what brings the environment into a new state.

A^i: the set of actions of agent i.

S is the set of states, this is shared by all agents.

N is the number of agents.

Same as an MDP, but it's expanded to keep track of all agents.

Machine Learning Methods

Stochastic Search Methods

"Old Style Search"

Stochastic Hill-Climbing.

Simulated Annealing

Evolutionary Computation (EC)

Coevolutionary Algorithms (CEAs)

Cooperative coevolution:

succeed together or fail together.

Competitive coevolution:

benefit at the expense of other individuals

fitness then becomes context-sensitive and subjective.

fitness is based on interaction with other individuals

More than one population of individuals. They interact with individuals from the other populations.

examples

genetic programming

evolving actual computer programs

evolution strategies

genetic algorithms

Generally algos work like this:

Generations.

Keep going until a good enough individual is found.

Fittest members are chosen, bred and produce 'children' that are then added to population replacing worse individuals.

maybe some random mutation can be introduced

Population is then evaluated according to a 'fitness' function.

function that evaluates how good they are at solving the problem.

Begin with a random population of 'individuals' (candidate solutions.)

Applies Darwinian models of evolution to refine populations of candidate solutions to a given problem.

definition

Not really clear on pros and cons compared to RL.

search the space of possible solutions without the use of value functions.

directly learn behaviours without using value functions.

reward-based

RL Methods

RL methods have theoretical proofs of convergence, but these usually don't hold in MAS.

Decision-making

Challenges

Consider other agents' choices in the environment.

Not only consider immediate value in a decision, but also its impact on future choices

Apart from converting data into knowledge,

decisions need to be taken based on this knowledge.

2 main methods:

Policy-gradient

Parametrise the policy directly, therefore approximating it directly.

Value-based

Compute the expected return at a state or (state, action) pair and then the policy chooses based on this, at any state.

reinforcement information is provided after actions are taken in environment.

critic provides an assessment of the learner's output.

ie reward based on actions taken

Unsupervised

no feedback is provided

Supervised Learning

Deep Learning NNs

Through these representations, the software can train itself to perform new tasks.

Can find representations in high-dimension data.

ie they transform input data into representations, effectively transforming data into actionable knowledge.

the teacher or 'critic' provides the correct output

Overview

Types of Games

Stochastic Games

the state depends on stochastic function of the old state and the player's interaction.

Eg: Chess?

At any point the game is in a state

extensions of repeated games.

Repeated Games

the game consists in a series of interactions between players.

Agents

based on information received from the environment.

performing actions in its environment

that exhibits a high degree of autonomy

Computational mechanism

Multi-Agent Systems (MAS):

Multi-Agent Environment

agents may not know everything about the world that other agents know

these interact with one another

There is more than 1 agent

Focus on cooperative multi-agent learning

joint behaviour of autonomous agents whose interactions can provide the emergence of a solution.

Multi-Agent Reinforcement Learning (MARL)

by interacting with environment and other agents.

whose goal is to maximise their long-term reward

sequential decision-making in a stochastic environment with other agents

Distributed Systems:

for decentralised solutions to problems

multiple entities work together to cooperatively solve problems.