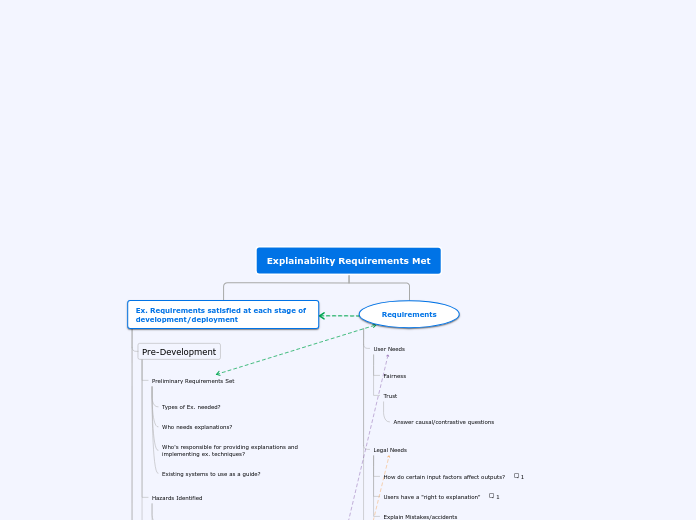

Explainability Requirements Met

Requirements

Technical Needs

Contestability

Predictability

Do explanations allow us to predict model behavior?

Justification

Transparency

Relate parameters to human-understandable concepts

Relate inputs to outputs

Robustness

Explainability

”Global methods generate evidence that apply to a whole model, and

support design and assurance activities by allowing reasoning about all pos-

sible future out-comes for the model. Local methods generate explanations

for an individual decision, and may be used to analyse why a particular

problem occurred, and to improve the model so future events of this type

are avoided.” R. Ashmore, R. Calinescu, and C. Paterson, “Assuring the machine learning lifecycle

Global

Generate explanations about the whole logic of the model

Make predictions about future outputs

Inform design

Local

Can individual decisions be explained?

Improve/correct model

Analyse why a particular problem occurred

Legal Needs

Explain Mistakes/accidents

Users have a "right to explanation"

B. Goodman and S. Flaxman, “European union regulations on algo-

rithmic decision-making and a ”right to explanation”,”

AI Magazine,

Vol 38, No 3, 2017

, 2016.

How do certain input factors affect outputs?

R. Budishet al., “Accountability of ai under the law: The role of ex-planation,”

User Needs

Trust

Answer causal/contrastive questions

Fairness

Ex. Requirements satisfied at each stage of development/deployment

Deployment

Legal requirements met

Users trust model

Users satisfied with explanations

Acceptable safety is assured in case of misuse and unavoidable accidents

Potential harm from adversarial attacks is mitigated

Potential harm from accidents is mitigated

Global Ex. allows predictability so accidents can be predicted

Explainability allows contestability to challenge harmful model decisions

Potential harm from misuse is mitigated

Explainability allows insight to foresee and limit potential for misuse

During Dev.

Model Verification

Explanations used to justify results provided by model

Explanations used to provide insights into operation domain

Errors identified and appropriately explained/corrected

Model Learning

Explainability techniques planned

Techniques Provide appropriate types of Ex.

Techniques appropriate for model

Model Selection

What types of ex. are possible for potential models?

Do they meet requirements?

Could a more ex. model be used?

Accuracy/explainability tradeoffs

Pre-Development

Evidence to support sufficiency of explainability

Evaluation of Ex. Techniques Planned

e.g. human tests

Hazards Identified

What types of explainability needed to mitigate hazards?

Risks of lack of explainability?

Preliminary Requirements Set

Existing systems to use as a guide?

Who's responsible for providing explanations and implementing ex. techniques?

Who needs explanations?

Types of Ex. needed?