によって bosio mattia 13年前.

258

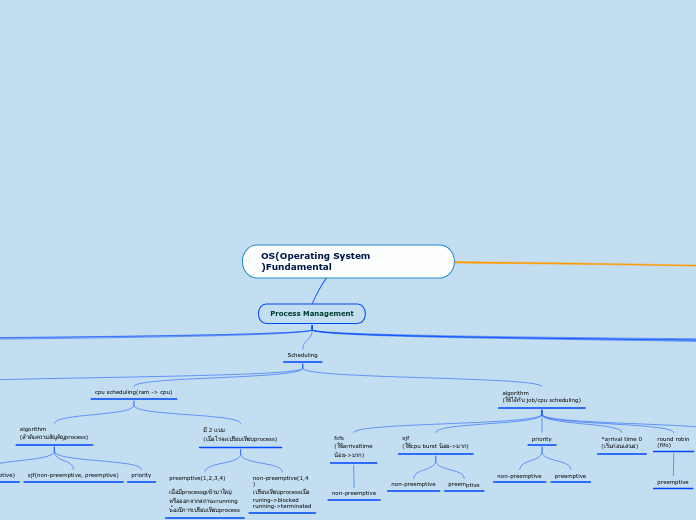

Article map

In the realm of microarray classification, the challenge of dealing with few samples compared to the vast number of genes is prevalent. Various filter approaches for univariate feature selection are often employed but risk overfitting to the training data.

開く

Computation

Time Tree construction time ? Need to include that? Feature selection 99% of time Time scalability with features IFFS SW : linear x Wsize IFFS : non linear and unfeasible SFFS : linear Is our algorhtm

better ? Common

Ground MAQC II set MCC value comparable Many samples Contemporary YES for

MCC mean

vlue MCC value

Mean value across

endpoints Metagenes

are useful? Treelet/

Euclidean ? Is the improvement

enough to compensate

for tree construcion? PROS -Resume of + genes - Expanded feat space - Interpretable comb. -Common behaviour NEW

ELEMENT? Reliability

Score useful? Score parameter Include it in article? Selection how? Classifier

Transparent Any other, only needed dist from boundary LDA Interpretable Robust Simple NEW

ELEMENT! More useful for

small sample number

After that is it totally

related with error rate? gives more info

about data distribution

wrt classifier boundary

than ERROR RATE only Microarray classification overfit to train data

Infer data distribution

from train set Many filter approaches

problem of univariate

feature selection Few samples wrt gene number