arabera bosio mattia 13 years ago

258

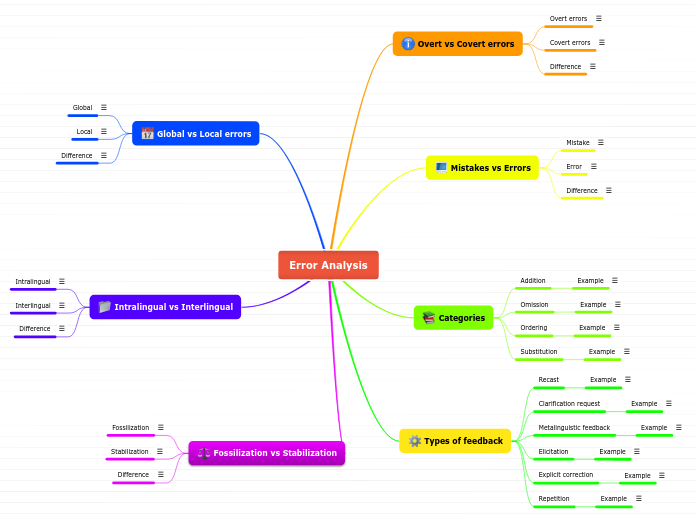

Article map

In the realm of microarray classification, the challenge of dealing with few samples compared to the vast number of genes is prevalent. Various filter approaches for univariate feature selection are often employed but risk overfitting to the training data.