Notes on Qualification Certificate of Computer and Software Technology Proficiency - System Architect

Domain-driven design

Domain model

Utility of a model

3. The model is distilled knowledge.

2. The model is the backbone language.

1. The model dictates the form of design of the heart of the software.

A domain model is not a particular diagram; it is the ideal that the diagram is intended to convey. it's not just the knowledge in a domain expert's head; it's a rigorously organized and selectively abstraction of that knowlege.

Domain

The subject area to which the user applies the program

Book: Domain-Driven Design by Eric Evans

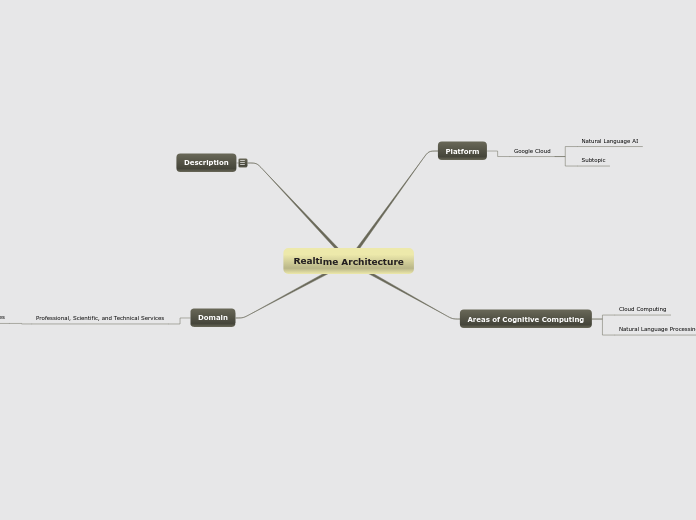

Architecture case-study

J2EE

Types of enterprise Java Beans

Message driven bean

Session bean

Entity bean

Model 2 architecture

Git

Git Objects

Kafka

Understand Kafka as if you had designed it

Redis

Redis设计与实现 第二版

Domain Driven Design (DDD)

What is domain

It's an interpretation of the reality and abstracts of aspects relevant to solving the problem at hand and ignores extraneous detail.

It's a simplification

It's the subject area to which the user applies the program or system.

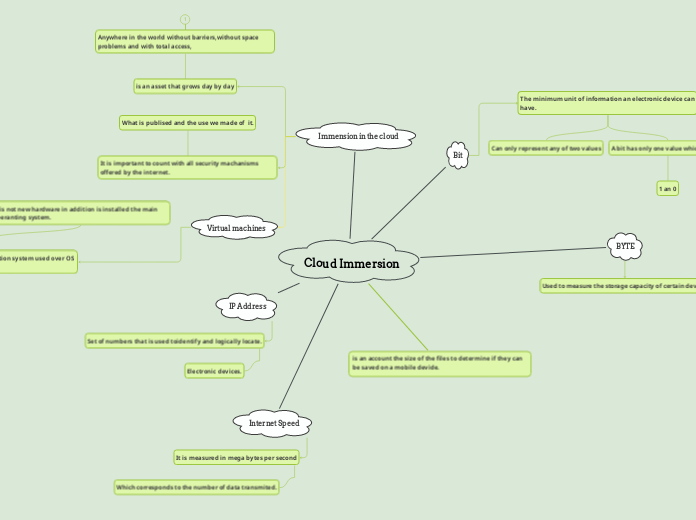

Cloud computing

Data coordination

etcd

Zookeeper

Time coordination

Order of events

Vector clock

GPS satellite

NTP

Load balancer

Increase the availability of services

Increase the amount of work that can be handled

Hierarchy of load balancer

Message distributing algorithm

Round-robin

Failure in the cloud

Long tail latency

Two techniques to handle long tail latency

Alternative requests

Hedged requests

"Launch instance" requests to AWS

Timeout tactic

Public cloud infrastructure

Gateways

Message gateway

Talks to hypervisors which manage VMs on physical computer

Management gateway

Splitting

Data center

Availability zone

Rejion

Cluster

Fail-over redundancy

VIP & VRRP

Load balancing

Layer-7 balancing

Layer-4 balancing

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software.

How to optimize distributed system?

Distributed operating system

Each node holds a specific software subset

2. System management components

Coordinate the node's individual and collaborative activities

1. Microkernal

A system software over a collection of independent, networked, communicating, and physically separate computational nodes.

Proxy and load balancer

Forward proxy and reverse proxy

HTTP proxy

7-layer proxy

Great for microservice

Smart balancing

Look into the HTTP header. Also modify the IP header.

4-layer proxy

One tcp connection

Look into the IP header, modify the IP header (NAT)

It means using multiple (real or virtual) computers cooperatively together, thereby producing faster performance and more robust system than a single computer doing all the work.

Software development life-cycle (SDLC)

Domain specific software architecture (DSSA)

System architecture

This drawing depicts the DSSA concept. The component class specifications in the reference architecture are realized in multiple system architectures with existing and reengineered components from the DSSA library, generated components, and new components.

A system architecture is an instance of an architecture that meets the specifications in a reference architecture tailored to meet the requirement of a specific system.

Reference architecture

It defines the solution space.

A reference architecture is composed of component class specifications.

A component class specification is an element of the reference architecture that specifies what elements of the architecture do and what their interfaces are.

A reference architecture is a generic set of architecture component specification for a domain (and at least one instance).

Reference requirements

It defines the problem space.

A reference requirement is a generic requirement for the domain.

Domain library

Domain library is a library containing domain-specific software assets for reuse in DSSA process.

DSSA process life cycle (James W. A.)

4. Operate and maintain applications

3. Build applications

2. Populate and maintain library

1. Develop domain-specific base

It's a software life cycle based on the development and use of domain-specific software architectures, components and tools. It is a process life cycle supported by a DSSA library and development environment.

Five stages of DSSA process (Will Tracz)

Architecture realization

5. Produce reusable or gather workproducts

It focuses on populating the domain-specific software architectures (high-level designs) with components that may be used to generate new applications in the problem domain.

Implementation/collection of reusable artifacts (e.g., code, documentation, etc.).

Architecture design and analysis

4. Development domain models/architectures

Goal: come up with generic architectures and to specify the syntax and semantics of the modules or components that form them.

Several domain-specific software architectures may have to be designed, within one application domain, to satisfy the previously identified requirements and constraints.

At each layer of the decomposition, the architecture, subsystem, or module, can be modeled, analyzed and treated as parameterized (configurable) black box.

Top-down decomposition

Modules

Smaller subsystems

Subsystems

Architecture

Similar to high-level design -- emphasis is on defining module/model interfaces and semantics.

Domain anaylysis

3. Define/refine domain specific design and implementation constraints.

Identify and characterize the implementation constraints.

"What" requirements are Stable requirements; "How" requirements are Variable requirements.

Functional requirements are those "What" requirements and constrains are those "How" requirements.

Similar to requirement analysis -- emphasis is on the solution-space.

2. Define/refine domain specific concepts and requirements

More details will be added to the block shown in Stage 1.2 -- define the domain.

Special emphasis on trying to "standardize" and "classify" the basic concepts in the domain.

With special emphasis on "identifying commonalities" and "isolating differences" between applications in the domain.

Goal: compile a dictionary and thesaurus of domain specific terminology.

Similar to requirement analysis -- emphasis is on the problem-space.

1. Define the scope of the domain analysis

Answer a set of questions

3. What general needs are satisfied by applications in the domain?

2. What is the short description of the application domain?

1. What is the name of the domain being modeled?

Output: a list of needs user of applications in this domain require being met.

Focuses on determining what is in the domain of interest and to what ends is this process being applied.

Define what can be accomplished -- emphasis is on the user's needs

Concept

What make DSSA distinct

Case-based reasoning and reverse engineering are no central mechanisms for identifying reusable resources, but rather existing applications are used as vehicle to validate the architectures that are derived, to-down, from generalized user requirements.

Separate problem-space analysis from solution-space analysis

The separation of functional requirements and implementation constraints.

The separation of user needs from system requirements and implementation constraints.

The domain specific software architecture, which we call a reference architecture, is specified by reference requirements, the product of a domain analysis. Application systems are constructed by tailoring the reference architecture to meet the specific system requirements and populating the architecture with components from the DSSA library. -- James W. A.

A domain specific software architecture is, in effect, a multiple-point solution to a set of application specific requirements (which define a problem domain). -- Will Tracz

Formal: A context for patterns of problem elements, solution elements and situation that define mapping between them. -- Will Tracz

Structured systems analysis and design method (SSADM)

Three most important techniques

Entity event modeling

Data flow modeling

Logical data modeling

Builds on a set of methods

Tom DeMarco's structured analysis

Jackson Structured Programming

Yourdon Structured Method

Larry Constantine's structured design

Peter Checkland's soft systems methodology

SSADM is a waterfall method for the analysis and design of information systems. SSADM can be thought to represent a pinnacle of the rigorous document-led approach to system design, and contrasts with more contemporary agile methods such as DSDM or Scrum.

Joint application design (JAD)

Agile

James Martin RAD

RUP

Nigh disipline

Supporting

9. Environment

8. Project management

7. Configuration and change management

Technical

6. Deployment

5. Testing

4. Implementation

3. Analysis and design

2. Requirements

1. Business modeling

Four phases

4. Transition

The released software product

3. Construction

User manual

The software system itself that is ready to be transferred to the end users

2. Elaboration

A preliminary user manual (optional)

Prototypes that demonstrably mitigates each identified technical risks

A development plan for the overall project

Revised business case and risk list

An executable architecture that realizes the architectural significant use cases.

Description of software development process

Description of software architecture

Use case model (80% completion)

1. Inception

Outputs

Project description

Key features

Constraints

The core project requriements

Initial risk assessment

Project plan

A basic use case model

Business case

Success factor

Market recoginizaton

Expected revenue

Business context

Model

Iterative Incremental

Rapid Prototype

Spiral

V-Shape

Waterfall

Project optimization

Project resource leveling

PERT / CPM Resource Leveling - Ed Dansereau

Project crashing

Critical path method

Database

Distributed Database

Replication

Replication schema

Partial replication

Some fragments of the database may be replicated whereas others may not.

Fully-replicated

System can continue to operate as long as at least one site is up

No replication

Fragmentation and sharding

Allocation schema

Fragmentation schema

Fragmentation types

Mixed fragmentation

Properties

Autonomy

Execution autonomy

Communication Autonomy

Design autonomy

It determines the extend to which each individual notes or DBs in a connected DDB can operate independently.

Vertical scalability

Horizontal scalability

Transparency

Fragmentation transparency

Vertical fragmentation

Horizontal fragmentation

Replication transparency

Data organization transparency (distribution or network transparency)

Naming transparency

Location transparency

Most important advantages

Reliability

Possible absence of homogeneity among connected nodes

Logical interrelation of connected databases

Connected via computer network

Parallel vs Distributed

Heterogeneity

Multi-processor parallel

Shared-nothing

Shared disk

Shared memory

Database transaction, concurrent control and recovery mechanism

Recovery

ARIES big picture

Rollback

Cascading rollback

If a transaction T is rolled back, any transaction S that has, in the interim, read the value of some data item X written by T must also be rolled back.

After whatever failure happened and before the transaction commits

Two-phase commit protocol

Housekeeping transactions

Aborted transaction list since last checkpoint

Committed transactions list since last checkpoint

Active transaction list

Disk block cache (buffer)

An example WAL (write-ahead logging) protocol with UNDO/REDO log using Steal/No-force approach

2. The committing operation of a transaction cannot be completed until all the REDO-type log records for that transaction have been force-written to disk.

All the logs need to be written before a committing complete

1. The BFIM of an item cannot be overwritten by its AFIM in the database on disk until all UNDO-type log records for the updating transaction up to this point have been force-written to disk.

means log will always be written before the data page

Compare policies

Force/No-force

No-force: a committed transaction can still has pages updated in the cache but have not written to the disk.

Force: all pages updated by a transaction are immediately written to disk before the transaction commits

REDO never needed

Steal/no-steal

No-steal: a cache page updated by a transaction cannot be written to disk before the transaction commits

UNDO never needed

steal: It happens when replace an existing page, which has been updated but whose transaction has not committed.

Cache replace (flush)

Shadowing

Log is not necessary

In-place updating

Log entry types

UNDO-type log entry

Includes the old value (AFIM)

REDO-type log entry

Includes the new value (AFIM)

Write-Ahead logging

The BFIM must be recorded in log entry and flushed to disk before the BFIM is overwritten with the AFIM in the database on disk.

BFIM (before image) and AFIM (after image)

Blocks

Log file blocks

Index file blocks

Data file blocks

Pin/Unpin

Cache directory

Updating policy

The UNDO and REDO operations are required to be idempotent

Steal: Immediate update

Variation

UNDO/NO-REDO

All updates are required to be recorded in the database on disk before a transaction commits

UNDO/REDO

The database may be updated by some operations of a transaction before the transaction reaches its commit point.

No-steal: Deferred update

NO-UNDO/REDO

No physical update of the database on disk until after a transaction commits

Concurrency control

Deadlock prevention protocols

Transaction timestamp based protocols

Wound-wait

Wait-die

Not practical protocols

Lock in same order

Lock all as a whole in advance

Deadlock

Example

The wait-for graph

The schedule

Deadlock occurs when each transaction T in a set of two or more transactions is waiting for some item that is locked by some other transaction T'.

Two phase lock protocol (2PL)

2PL can introduce deadlock

If every transaction in a schedule follows the two-phase lock protocol, the schedule is guaranteed to be serializable, obviating the need to test for serializability of schedules.

A transaction can be considered as being divided into two phases: expanding (acquisition) phase and shrinking (release) phase

All locking operations, including the upgrading, must precede the first unlock operation in a transaction.

Nonserialized shedule

Caused by locks released too earlier

When T1, T2 not serialized

When T1, T2 serialized

Shared/Exclusive lock (or read/write lock, multi-mode lock)

Lock conversion

Downgrading

Upgrading

unlock(X)

write_lock(X)

read_lock(X)

A waiting queue associated to each locked data item

Unlocked data items are not traced, so the value of the LOCK variable will be either read_locked or write_locked

Record in lock table:

The LOCK variable has tree values: read-locked, write-locked, unlocked

Binary lock

Operations

unlock_item(X)

lock_item(X)

Data structure

Only needs to maintain records for items that are currently locked in the lock table. Hence the value of the lock variables in lock table will always be 1.

A waiting queue of a data item

Records in lock table:

A binary-valued variable LOCK, associated with each data item in the database

T reaches commit point

2. Effect of all the transaction operations on the database have been recorded in the log file

Any portion of the log that is in the log buffer but has not been written to the disk yet must now be written to the disk -- force-writing

1. All its operations that access the database have been executed successfully

Log

Log entries

[abort, T]

[commit, T]

[read_item, T, X]

[write_item, T, X, old_value, new_value]

[start_transaction, T]

Log buffers

Log entries are firstly added to the log buffer.

Hold the last part of log entries in main memory

A log is a sequential, append-only that is kept on disk

Database model

Database cache

Buffer replacement policy

LRU

Database operations

Write_item(X)

4. Store the updated disk block from the buffer back disk (either immediately or at some late point in time)

3. Copy item X from the program variable named X into its correct location in the buffer

Read_item(X)

3. Copy item X from the buffer to the program variable named X

2. Copy the disk block into main memory buffer. Buffer size = block size

1. Find address of the disk block that contains the item X

Granularity: size of data time

A collection of named data items

A data item has a name

Filename

Block address

Record Id

Examples of data item

A disk block

A file

A database record

Transaction

Desirable properties

Schedule properties based on serializability

Serializable

Equivalence

Equivalence = Conflict-equivalence: We define a schedule S to be serializable if it is conflict equivalent to some serial schedule S'.

Conflict-equivalence

Two schedules are said to be conflict equivalent if the relative order of any two conflicting operations is the same in both schedules.

For two schedules to be equivalent, the operations applied to each data item affected by the schedules should be applied to that item in both schedules in the same order.

We don't consider result equivalence, it is unstable and depends on other internal status of the schedules.

S1 and S2 are result equivalent if the initial value of X is 100

Saying that a nonserial schedule is serializable is equivalent to saying that it is correct, because it is equivalent to a serial schedule, which is considered correct.

Conflict-serializable schedule

Nonserial schedule

Serial schedule

Schedule properties based on recoverability

Nonrecoverable and recoverable schedules

Ex. nonrecoverable

Sb: r1(X), w1(X), r2(X), r1(Y), w2(X), c2, a1

When T1 aborts, T2, that has committed, has to be rolled back since the value of X that T2 read is no longer valid.

Ex. recoverable

Se: r1(X), w1(X), r2(X), r1(Y), w2(X), a1, a2

This is a cascading rollback because T2 has to be rolled back since it reads data from T1 and T1 aborted.

This is another fix of Sb below by aborting T1 and then T2. Note T2 cannot commit in this case.

Sc: r1(X), w1(X), r2(X), r1(Y), w2(X), c1, c2

This is a fix of Sb below by committing T1 before committing T2

Sa: r1(X), r2(X), w1(X), r1(Y), w2(X), c2, w1(Y), c1

Recoverable

Condition

No transaction T in S commits until all transaction T' that have written some item X that T reads have committed.

Nonrecoverable

A committed transaction may have to be rolled back during recovery

ACID

Durability or permanency

Isolation

Consistency perservation

Atomicity

Transaction schedule (or history)

Complete schedule

Committed projection C(S)

3. For any two conflicting operations, one of the two must occur before the other in the schedule -- partially ordered

2. For any pair of operations from the same transaction Ti, their relative order of appearance in S is the same as the order of appearance in Ti.

1. Operations in S are exactly those in T1, T2, ..., Tn including a commit or abort operation as the last operation for each transaction in the schedule.

Conflicting operations

Write-write conflict

Read-write conflict

Changing the order of conflicting operations in a schedule, will resulted in different outcome.

Conditions

3. At least one of the operations is write_item(X)

2. They access the same item X

1. They belong to different transactions

A schedule S of n transactions T1, T2, ..., Tn is the ordering of the operations of the transactions

Ex2

Ex1

Transaction operations

abort: a

commit: c

end: e

write_item: w

read_item:r

Start: s

State transition diagram of transaction

Either Committed or Aborted

Not allow some operations in a transaction T to be applied to the database while other operations of T are not.

B

Read-write transaction

Read-only transaction

A

Interactively

Embedded

A transaction is an executing program that forms a logical unit of processing

Retrieval

Modification (update)

Deletion

Insertion

Relational Database

SQL

Outer join

Full outer join

Right outer join

Left outter join

Inner join

Normal forms

Normal forms cheat sheet

Relational decompositions

Algorithm: Synthesis info 3NF

Lossless (Nonadditive) join property

Testing algorithm for binary decomposition (NJB property test)

General testing algorithm

The word loss in lossless refers to loss of information, not to loss of tuples. If a decomposition does not have the lossless join property, we may get additional spurious tuples after the PROJECT (π) and NATURAL JOIN (*) operations are applied; these additional tuples represent erroneous or invalid information.

Dependency preservation

It is always possible to find a dependency-preserving decomposition D with respect to F such that each relation Ri in D is in 3NF.

Lost dependency

Ex

If a decomposition is not dependency-preserving, some dependency is lost in the decomposition. To check that a lost dependency holds, we must take the JOIN of two or more relations in the decomposition to get a relation that includes all left- and right-hand-side attributes of the lost dependency, and then check that the dependency holds on the result of the JOIN—an option that is not practical.

Each functional dependency X -> Y specified in F of R either appeared directly in one of the relation schemas Ri in the decomposition D or could be inferred from dependencies that appear in some Ri.

Attribute preservation

Decompose an universal relation schema R = {A1, A2, ..., An} into a set of relation schema D = {R1, R2, ..., Rm}

Functional dependency (FD)

Minimal cover of a set of dependencies E

Algorithm: finding a minimal cover F from a set of dependencies E

Definition: If F is a minimal cover of E, then

3. We cannot remove any dependency from F and still have a set of dependencies that is equivalent to F

2. We cannot replace any dependency X -> A in F with a dependency Y -> A, where Y is a proper subset of X, and still have a set of dependencies that is equivalent to F.

No extraneous attribute

1. Every dependency in F has a single attribute on the right-hand side.

Keys

Primary key

A choice of (or a designated) candidate key; any other candidate key is an alternate key

Candidate key

A candidate key (or minimal superkey) is a superkey that cannot be reduced to a simpler superkey by removing an attribute.

Superkey

A set of attributes that uniquely identifies each tuple of a relation.

Because superkey values are unique, tuples with the same superkey values must have the same no-key attribute values. That is, non-key attributes are functionally dependent on the superkey.

A set of all attributes is always a superkey (the trivial superkey). Tuples in a relation are by definition unique.

Inference rules

IR6: pseudotransitive rule

{X -> Y, WY -> Z} |= WX -> Z

IR5: Union or additive rule

{X -> Y, X -> Z} |= X -> YZ

IR4: decomposition or projective rule

{X -> YZ} |= X -> Y

IR3: transitive rule

{X -> Y, Y -> Z} |= X -> Z

IR2: augmentation rule

{X -> Y} |= XZ -> YZ

IR1: Reflexive rule (trivial)

If X ⊇ Y, then X -> Y

A functional dependency, denoted by X → Y, between two sets of attributes X and Y that are subsets of R specifies a constraint on the possible tuples that can form a relation state r of R. That constraint is that, for any two tuples t1 and t2 in r that have t1[X] = t2[X], they must also have t1[Y] = t2[Y].

Universal schema notation: R = {A1, A2, ... , An}

Relation calculus

Domain calculus

Tuple calculus

Ex.7

Ex.6

Ex.5

Ex.4

Ex.3

Ex.2

Transforming the Universal and Existential Quantifiers

NOT (∃x)(P(x)) ⇒ NOT (∀x)(P(x))

(∀x)(P(x)) ⇒ (∃x)(P(x))

(∃x) (P(x) AND Q(x)) ≡ NOT (∀x) (NOT (P(x)) OR NOT (Q(x)))

(∃x) (P(x)) OR Q(x)) ≡ NOT (∀x) (NOT (P(x)) AND NOT (Q(x)))

(∀x) (P(x) OR Q(x)) ≡ NOT (∃x) (NOT (P(x)) AND NOT (Q(x)))

(∀x) (P(x) AND Q(x)) ≡ NOT (∃x) (NOT (P(x)) OR NOT (Q(x)))

(∃x) (P(x)) ≡ NOT (∀x) (NOT (P(x)))

(∀x) (P(x)) ≡ NOT (∃x) (NOT (P(x)))

Universal quantifer: (∀t)(F)

If F is a formula, then so is (∀t)(F), where t is a tuple variable. The formula (∀t)(F) is TRUE if the formula F evaluates to TRUE for every tuple (in the universe) assigned to free occurrences of t in F; otherwise, (∀t)(F) is FALSE.

Existential quantifier: (∃t)(F)

If F is a formula, then so is (∃t)(F), where t is a tuple variable. The formula (∃t)(F) is TRUE if the formula F evaluates to TRUE for some (at least one) tuple assigned to free occurrences of t in F; otherwise, (∃t)(F) is FALSE.

Range relation R of tuple variable t: R(t). Ex. EMPLOYEE(e)

Rational algebra

Join

Natural join

Natural join (⋈) is a binary operator that is written as (R ⋈ S) where R and S are relations. The result of the natural join is the set of all combinations of tuples in R and S that are equal on their common attribute names.

If the two relations have more than one attribute in common, then the natural join selects only the rows where all pairs of matching attributes match.

If the two relations have no attributes in common, then their natural join is simply their Cartesian product.

Operations from Set theory

Cartesian product (or cross product)

Set difference (or Minus)

Intersection

Union

Project

Select

Commutative

RTOS

Task scheduler

Rate monotonic scheduling

Optimal

If a set of processes cannot be scheduled by RM scheduling, it cannot be scheduled by any other static priority based algorithm.

For harmonic tasks set, the upper bound can be relaxed up to 1.0

LIM n(s^(1/n) - 1) = ln n = 0.693174...

Example of impossible schedule: above the least upper bound

Example of possible schedule: below the least upper bound

Least upper bound

Two types

Dynamic priority

Fixed priority

More frequency task (with shorter period) will always has a higher priority than a less frequency (with longer period) one.

It's preemptive

Priority based preemptive scheduling

Round-robin (nonpreemptive)

Preemptive time slicing

Video course

Cyber threats

21 Top Cyber security Threats

Auth0

Secure electronic transaction (SET) protocol

Kerberos

Taming Kerberos

KDC

PKI

CA & RA

Computer Science Fundamentals

Disk scheduling

FCSF

SSFT

Multi-core processors

BMP

SMP

AMP

File Allocation

Index

Linked

Contiguous

Computer architecture

Memory management

Set associative caches

Directly mapped caches

Fully associative cache and replacement algorithm

Coding theory

Stream coding

CRC

CRC-32 Table Lookup Algorithm

Other examples

Example: polynomial = x^3 + x + 1 (n = 3), message = 11010011101100

Verification

CRC is a linear function: CRC(x + y + z) = CRC(x) + CRC(y) + CRC(z)

Parity bit: generator = x + 1, CRC-1

Polynomial long division

4. Remainder as result

3. Quotient discarded

2. Message as divident

1. Generator polynomial as divisor

CRC-n

Polynomial has n degree, n + 1 items

Block coding

Hamming code (SED)

Minimal distance is 3

Detect up to two-bits error and correct one-bit error

Some two-bit error can have the same value as some one-bit errors

Hamming code with additonal parity (SECDED)

Can tell difference between one-bit error and two-bit error

Minimal distance is 3 + 1 = 4

Means, if you flip one bit, you have to also flip at least two parity bits to make it a valid code.

Algorithm

Any given bit (data or parity) is included in a unique set of parity bits. So if there is k parity bits, bits from 1 up to 2^k - 1 can be covered. After discounting the k parity bits, 2^k - k - 1 bits remain for use as data.

Visual inspection

Notation: (n, k), where n is block length, ke is message length, and n = 2^r - 1, where r is number of the parity bits

Example: Hamming(7,4) (with r=3)

Relative distance: d/n

Minimum distance d

Rate

R = k/n, where k is message length, n is block length

Hamming Distance

In information theory, the Hamming distance between two strings of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of substitutions required to change one string into the other, or the minimum number of errors that could have transformed one string into the other.

Business system planning (BSP)

Criticism

Conclusion

Final step

Proposals

Verifying study impact

Establishing IS-development priorities

A number of criteria (costs and development time, for example) establish the best sequence of system implementation. High-priority subsystems may be analyzed more deeply. This information is given to the sponsor, who determines which information subsystems will be developed.

Define information architecture

To define an organization's information architecture,[4] it is necessary to connect the information subsystems using matrix processes and data classes to find appropriate subsystems. The organization then reorders processes according to the product (or service) life cycle.

Analysis

Management discussion

Information support

Data class

There are usually about 30–60 data classes, depending on the size of the organization. Future IS will use databases based on these classes

Ex. data classes

Supplier

Invoice

Employee

Customer

Corporation

Process

There are about 40-60 business processes in an organization (depending on its size), and it is important to choose the most profitable ones and the department responsible for a particular process.

Ex. processes

Contract creation

Invoicing

Car rental

Transfer

Preparation

Enterprise Application Integration (EAI)

General design topics

Coupling and cohesion

Types of cohesion (worst to best)

Perfect cohesion (atomic)

Cannot be reduced anymore

Functional cohesion (best)

Parts of a module are grouped because they contribute to a well-defined task of the module.

Sequential cohesion

Grouped together because the output of one part is input of another part.

Communicational/informational cohesion

Grouped because they operate on the same data

Procedural cohesion

Grouped because they always follow a certain sequence of execution.

Temporal cohension

Grouped by when they are processed.

Logical cohesion

Ex.2: All M, V, C routines are in separated folders in a MVC pattern.

Ex.1: all mouse and keyboard inputs handling are grouped together

Parts of a module are grouped together because of they are logically categorized to do the same thing but are different by nature.

Coincidental cohesion

Ex: an utilities class

Parts of a module are grouped together by no reason

Decreasing coupling

Functional design

Each module has only one responsibility and performs that responsibility with the minimum of side effects on other parts.

Types of coupling

Object-oriented programming

Coupling increases between two classes A and B if:

A is a subclass of (or implements) class B

A has a method that references B (via return type of parameter)

A calls on services of an object B

A has an attribute that refers to (is type of) B

Temporal coupling

Subclass coupling

Procedural programming (low to high)

Content coupling

An 'arc' modifies 'point' instance inside a 'line' instance by a way not via its interface or contract.

Common coupling

Several modules have access to the same global data.

External coupling

Two modules share an externally imposed data format, communication protocol, or device interface

Control coupling

Ex: passing a what-to-do flag

Stamp coupling (data structure coupling)

Ex: passing a whole record to a function which needs only one field of it.

Modules share a composite data structure and use only parts of it.

Data coupling

Math

Two-person zero-sum game theory

Transportation problem

MODI method

Stepping stone method

Linear programming

Integral linear programming

Ex.1

Simplex method

Noncanonical

Ex.2 Minimize -- converted to the dual problem

Ex.1 Minimize, equality constraints

Canonical

Ex.5 Maximize 3x2

Ex.4 Maximize 2x2

Ex.3, Maximize 2x2

Ex.1,2: Minimize/maximize 2x3

Networking

LAN

VLAN

Broadcast domain

Collision domain

Network storage

IPSAN

FCASN

NAS

SAN

DAS

Recursive query vs iterative query

DNS message

IPv4

DiffServ

Classful network

IPv6

IPv6 address configuration command line

SLAAC: Stateless Address Autoconfiguration

RFC 4861 Neighbor Discovery - SLAAC - ICMPv6

IPv6 Address

Cheetsheet

Multicast

Solicited

ff02:0:0:0:0:1:ff00::/104

Transient

ff10::/12

Well known

ff00::/12

Unicast

Unique local

fc00::/7

Loopback

::1/128

Global unicast

Link-local address

RFC7217 address

fe80::/64 + EUI-64

fe80::/10

Ipv6 Header

Structured cabling

The six subsystems

Three-tier hierarchical internetworking model

WikI

Core layer

Hi-speed forwarding services for different regions of the network

Distribution layer

Also called smart layer, workgroup layer. Routing, filtering, QoS

Access layer

Access layer devices are usually commodity switching platforms. May or may not provide layer 3 switching.

TODOs

Readings

Domain Driven Design for Services Architecture

Popular Java-based architectures

Popular Web framework/architecture

Object-Oriented Design

Software design pattern

Behavioral patterns

Proxy

Provide a surrogate or placeholder for another object to control access to it.

Strategy

2. A context forwards requests from its clients to its strategy. Clients usually create and pass a ConcreteStrategy object to the context; thereafter, clients interact with the context exclusively. There is often a family of ConcreteStrategy classes

for a client to choose from.

1. Strategy and Context interact to implement the chosen algorithm. A context may pass all data required by the algorithm to the strategy when the algorithm is called. Alternatively, the context can pass itself as an argument to Strategy operations. That lets the strategy call back on the context as required.

Define a family of algorithms, encapsulate each one, and make them interchangeable. Strategy lets the algorithm vary independently from clients that use it.

Interpreter

Part 5

Part 4

3. The interpret operations at each node use the Context to store and access the state of the interpret.

2. Each NonterminalExpression node defines interpret() in terms of interpret on each subexpression. The interpret operation of each TerminalExpression defines the base case in the recursion.

1. The client builds (or is given) the sentence as an abstract syntax tree of NonterminalExpression and TerminalExpression instances. Then the client initializes the Context and invoke the interpret operation.

Abstract syntax tree as an instance of the above class diagram

The regular expression language

Given a language, define a representation for its grammar along with an interpreter that uses the representation to interpret sentences in the language.

Chain of Responsibility

Avoid coupling the sender of a request to its receiver by giving by more than one object a chance to handle the request. Chain the receiving objects and pass the request along the chain until an object handle it.

Structural patterns

Decorator

Decorator forwards requests to its Component. It may optionally perform additional operations before and/or after forwarding the request.

Attach additional responsibilities to an object dynamically. Decorators provide a flexibly alternative to subclassing for extending functionality.

Composite

part 1

Clients use the Composite class interface to interact with objects in the composite structure. If the recipient is a Leaf, then the request is handled directly. If the recipient is a Composite, then it usually forward requests to its child components, possibly performing additional operations before and/or after formwarding.

Compose objects into tree structures to represent parts-whole hierarchies. Composite lets client treat individual objects and compositions of objects uniformly.

Bridge

Abstraction forwards client requests to its implementor object.

Decouple an abstraction from its implementation, so the two can vary independently.

Adapter

Client call operations on an Adapter instance. In turn, the adapter calls Adaptee operations that carry out the request.

Convert the interface of a class into another interface clients expect. Adapter lets classes work together that couldn't otherwise because of incompatible interfaces.

Creational patterns

Singleton

Clients access a Singleton instance solely through Singleton's Instance() operation.

Ensure a class has only one instance and provide a global point of access to it.

Prototype

Part 3

A client asks a prototype to clone itself.

Specify the kinds of objects to create using a prototypical instance, and create new objects by copying this prototype.

Factory method

Part 2

Part 1

Define an interface for creating an object, but let subclasses decide which class to instantiate. Factory method lets a class defer instantiation to subclasses.

Dependency injection

5. The simplest implementation: manually assembly services and clients in the root place.

4. Full size implementation: The client implemented the injection interface and the injector class takes care the dependencies setup and switching.

3. Pass in the dependency via Setter method. Drawback: how to ensure the Setter was correctly called?

2. Pass the dependency via the Client's constructor. Drawback: hard to change the Service later.

1. Without the injection, the Client directly depends on the Service.

Structure and collaborations

A class accepts objects it requires from an injector instead of creating the objects directly.

Builder

Bicycle

Maze

Part3

Part2

Part1

Collaborations

Each converter class is called a builder in the pattern, and the reader is called the director. Applied to this example, the Builder pattern separates the algorithm for interpreting a textual format (that is, the parser for RTF documents) from how a converted format gets created and represented. This lets us reuse the RTFReader's parsing algorithm to create different text representations from RTF documents—just configure the RTFReader with different subclasses of TextConverter.

Separate the construction of a complex object from its representation, allowing the same construction process to create various representation.

Abstract factory

Sample

Python

Use the class itself as factory

C++

Motivation

Intent

Provide an interface for creating families of related or dependent objects without specifying their concrete classes.

Software design pattern is a general, reusable solution to a commonly occurring problem with a given context in software design.

Design pattern is more general than software design pattern

Design pattern is a reusable form of solution to a design problem.

An object contains encapsulated data and procedures grouped together to represent an entity. The 'object interface' defines how the object can be interacted with. An object-oriented program is described by the interaction of these objects.

Object-oriented design is the discipline of defining the objects and their interactions to solve a problem that was identified and documented during object-oriented analysis.

Reliability Engineering

Software reliability

Metric

The number of software faults, expressed as faults per thousand lines of code

Software unreliability is the result of unanticipated results of software operations.

Hardware unreliability is a result of component or material failure that results in the system not performing its intended function. Repairing or replacing the hardware component restores the system to original operating state.

Reliability assertment

FTA: fault tree analysis

Failure probability (at input events)

Unlike conventional logic gate diagrams, the gates in a fault tree output probabilities related to the set operations of boolean logic. The probability of gate's output event depends on the input event probabilities.

FMEA

Example FEMA worksheet

The analysis should always be started by listing the functions that the design needs to fulfill.

Terms

Severity (S): The consequence of the failure mode.

V: Catastrophic

IV: Critical

III: Minor, no damage, light injures.

II: Very minor, no damage, no injures

I: No relevant effect on reliability or safety

Probability (P): the likelihood of the failure occurring.

E: Frequent

D: Reasonably possible

C: Occasional

B: Remote (relatively few failures)

A: Extremely unlikely

Local effect: The failure effect as it applies to the item under analysis.

Failure effect: immediate consequence of a failure on operation, or more generally on the needs for the customer/user that should be fulfilled but now is not, or not fully, fulfilled.

Failure mode: The specific manner or way by which a failure occurs in terms of failure of the part, component, function, equipment, subsystem, or system under investigation.

Failure: The lost of function under stated conditions

Redundancy design

Active redundancy

General expression

Dual redundant system

Series reliability mode

Distributions

Normal distribution

Exponential distribution

f(t) =λ e^(-λt)

R(t) = 1- F(t) = 1 - (1 - e^(-λt)) = e^(-λt)

Failure rate is a frequency with an engineered system or component fails, expressed in failures per unit of time.

Under certain engineering assumptions (e.g. besides the above assumptions for a constant failure rate, the assumption that the considered system has no relevant redundancies), the failure rate for a complex system is simply the sum of the individual failure rates of its components, as long as the units are consistent, e.g. failures per million hours. This permits testing of individual components or subsystems, whose failure rates are then added to obtain the total system failure rate

MTTF

An example from "Computer Architecture - A Quantitative Approach

Definition from "Computer Architecture - A Quantitative Approach"

MTTR

The average time required to repair a failed component or device

Availability: MTBF/(MTBF + MTTR)

MTBF

Calculation

For network components

Calculation for in-series and in-parallel components

Intuitively, both these formulae can be explained from the point of view of failure probabilities. First of all, let's note that the probability of a system failing within a certain timeframe is the inverse of its MTBF. Then, when considering series of components, failure of any component leads to the failure of the whole system, so (assuming that failure probabilities are small, which is usually the case) probability of the failure of the whole system within a given interval can be approximated as a sum of failure probabilities of the components. With parallel components the situation is a bit more complicated: the whole system will fail if and only if after one of the components fails, the other component fails while the first component is being repaired; this is where MDT comes into play: the faster the first component is repaired, the less is the "vulnerability window" for the other component to fail.

Any practically-relevant calculation of MTBF or probabilistic failure prediction based on MTBF requires that the system is working within its "useful life period", which is characterized by a relatively constant failure rate (the middle part of the "bathtub curve") when only random failures are occurring.

Assuming a constant failure rate λ results in a failure density function as follows: f(t)=λ e^-λt, which, in turn, simplifies the above-mentioned calculation of MTBF to the reciprocal of the failure rate of the system:

MTBF = 1/λ

In practice, the mean time between failures (MTBF, 1/λ) is often reported instead of the failure rate. This is valid and useful if the failure rate may be assumed constant – only relate to the flat region of the bathtub curve, which is also called the "useful life period".

Bathtub curve: The 'bathtub curve' hazard function (blue, upper solid line) is a combination of a decreasing hazard of early failure (red dotted line) and an increasing hazard of wear-out failure (yellow dotted line), plus some constant hazard of random failure (green, lower solid line)

With reliability function R(t) or density function f(t)

From the definition

Mean time between failures (MTBF) is the predicted elapsed time between inherent failures of a mechanical or electronic system, during normal system operation. MTBF can be calculated as the arithmetic mean (average) time between failures of a system.

The term is used for repairable systems

Failure rate λ

Plot

Example of establishing failure rate

Hazard rate h(t) is failure rate λ(t) when Δt tends to zero

For exponential failure distribution, the hazard rate h(t) equals λ

Proof

Failure rate is a conditional probability

Fault rate λ is the frequency with which an engineered system or component fails, expressed in failures per unit of time.

Definition: The total number of failures within an item population, divided by the total time expended by that population, during a particular measurement interval under stated conditions.

Survival function (S(t)) or Reliability Function (R(t))

Failure distribution function f(t)

f(t): time to (first) failure distribution (i.e., the failure density function)

Cumulative distribution function F(t)

it describes the probability of failure (at least) up to and including time t

Rules of Probability

Popular architectures in detail

Serverless

Sam's speech at goto; conference

API Gateway

FaaS

BaaS

Mike Roberts' definition

5. Implicit high availability

4. Performance capabilities defined in terms other than host size/count.

3. Costs based on precise usage.

2. Self auto-scale and provision based on load (demand)

1. No management of server hosts or server processors

Serverless architectures are application designs that incorporate third-party “Backend as a Service” (BaaS) services, and/or that include custom code run in managed, ephemeral containers on a “Functions as a Service” (FaaS) platform. By using these ideas, and related ones like single-page applications, such architectures remove much of the need for a traditional always-on server component. Serverless architectures may benefit from significantly reduced operational cost, complexity, and engineering lead time, at a cost of increased reliance on vendor dependencies and comparatively immature supporting services

Microservices

Saga

Choreographed sagas

Orchestrated sagas

Camunda

Zeebe

Rollback and compensating transactions

The first option could be to just not split the data apart in the first place.

Avoid to use distributed transactions

Service mesh and API gateway

API gateway

Ambassador

The API gateway's main concern is mapping requests from external parties to internal microservices.

Ex. usages

API keys

Rate limiting

Logging

Akin to HTTP proxy

Service mesh

Istio

Linkered

Aren't service mesh smart piple?

Common behaviors we put into service meshes are not specific to any one microservice. No business functionality has leaked to the outside. We are configuring generic things like how request time-outs are handled.

To implement shared behaviors among microservices

Service discovery

Dynamic service registries

Kubernetes

Consul

ZooKeeper

DNS

Handling changes between microservices

Coexist incompatible microservice versions

Communication implementation

Techniques

Message brokers

Choices

Kalfka

Topics and queues

Topic: event driven

Queue: request-response

Message brokers are intermediaries, often called middleware, that sit between processes to manage communication between them.

GraphQL

REST

Two styles

Event driven (Atom)

Request-response (main)

Six architectural constraints

Code on demand (optional)

Cacheable

Stateless

Client-server

Hypermedia as the engine of application state (HATEOAS)

Resources and its representations

JSON and XML

Once you have a representation of a resource, a Customer for example, then you can make request to create/change/update it. The external representation of a resource is completely decoupled with how it stored internally.

Representational State Transfer: An architecture style where you expose resources (Customer, order, etc.) that can be accessed via a common set of verbs (GET, POST, PUT).

gRPC

Over HTTP

Communication patterns

Event-driven

Request-response

Asynchronous

Synchronous

Through common data

Asynchronous non-blocking

Synchronous blocking

How to model microservices

Eight principles -- talked on the conference speech

8. Highly observable

7. Isolate failure

6. Customer first

5. Deploy independently

Subtopic

4. Decentralize all the things

3. Hide implementation detail

2. Culture of automation

1. Modelled around business domain

Follow DDD

Event storming

Identify aggregates

Identify commands

Identify events

Mapping aggregates and bounded contexts to microservices

Coarser-grained bounded contexts can in turn contain further bounded contexts.

Both can therefor work well as service boundaries.

As you find your feed, you decide, you decide to break these services into smaller services, you need to remember aggregates themselves don't want to be split aport.

When working out, you want to reduce the number of services you work with, so you should probably target services that encompass entire bounded contexts.

Both can give us units of cohesion with well-defined interfaces with the wider system.

Bounded context

It represents a collection of associated aggregates, with explicit interface to the wider world.

Aggregate

It's a self-contained state machine that focus on a single domain concept.

Ubiquitous language

Good boundary

The prior art can still guide us in how to define good microservice boundary.

Coupling and cohesion are obviously related

A structure is stable if cohesion is strong and coupling is low.

Coupling

When services are loosely coupled, a change to one service should not require a change to another.

Cohesion

So we want to find boundaries within our problem domain that help ensure related behavior is in one place and that communicate with other boundaries as loosely as possible.

The code that changes together, stays together.

Information hiding

By reducing the number of assumptions that one module (or microservice) makes about another, we directly impact the connections between them.

The connections between modules are the assumptions which the modules make about each other.

In essence, microservices are just another form of modular decomposition, albeit one has network-based interaction between the modules and all the associated challenges that brings.

Key concepts

Flexibility

Size

Don't worry about size, instead, focus on two key things:

2. How do you define microservice boundaries to get the most out of them, without everything becoming a horribly coupled mess?

1. How many microservices can you handle?

"As small an interface as possible" -- Chris Richardson.

"A microservice should be as big as my head" -- James Lewis.

When you get into what makes microservices work as a type of architecture, the concept of size is actually one of the least interesting aspects.

How big should a microservice be?

Alignment of architecture and organization

Conway's Law: Organizations which design systems ... are constrained to produce designs which are copies of communication structures of these organizations.

The microservice case

The three-tire case

Owning their own state

If a microservice wants to access data that hold by another microservice, it should go and ask that second microservice for the data.

Should avoid the use of shared database.

Modeled around a business domain

Comparing to layered architecture

By this, we can make it easier to roll out new functionality and recombine microservices in different ways to deliver new functionality to our users.

Independent deployability

Hence, we must make sure:

2. we have explicit, well-defined, and stable contracts between our microservices.

1. our microservices are loosely coupled.

We can make a change to a microservice, deploy it, and release that change to our users, without having to deploy any other microservices.

Software Architecture Design

IEEE-1471-2000

Viewpoints

Link bit error rate viewpoint

Viewpoint language

What is the feasibility of building and maintaining consistency with operational information flow?

What is the bit error rate on a communication link?

Physical interconnect view point

Viewpoint languages

Shared link

Point to point link

Physical identifiable node

What is the feasibility of construction, compliance with standards, and evovability?

What are the physical communications interconnects and their layering among system components?

Behavioral viewpoint

Analytic methods

Partially ordered sets of events

Pi-calculus

Communication sequential processes

Modeling methods

Operations on those entities

States

Processes

Events

Concerns

What are the behaviors of system components? How do they interact?

How do these actions relate (ordering, synchronization, etc.)?

What are the kinds of actions the system produces and participates in?

What are the dynamic behaviors of and within a system?

Structural viewpoint

Perry and Wolf

Elements classes

Connecting elements

Data elements

Processing elements

Architecture = {elements, forms, rationals}

Conceptual model of AD

Component

Component-Based Software Engineering (CBSE, or CBD)

Component architecture

Application Server

The combination of application servers and software components is usually called distributed computing.

Middleware

Categories from QCCSTP

Communication based categories

ORB

MOM

Advantages

Transformation

Routing

Asynchronicity

RPC

Layered categories

集成型中间件

IBM WebSphere

EAI

Workflow

通用型中间件

IBM MQSeres

BEA WebLogic

IONA Orbix

底层中间件

Products

Microsoft CLR

Sun JVM

Technologies

ACE

CLR

JVM

Categories

Machine-time service

Human-time service

Other definitions

Database access services are often characterised as middleware. Some of them are language specific implementations and support heterogeneous features and other related communication features. Examples of database-oriented middleware include ODBC, JDBC and transaction processing monitors.

ObjectWeb

Services that can be regarded as middleware include:

Enterprise services bus

Object request broker (OBR)

Message oriented middleware (MOM)

Data integration

Enterprise application integration (EAI)

The software layer that lies between the operating system and applications on each side of a distributed computing system in a network.

IETF

Middleware includes web servers, application servers, content management systems, and similar tools that support application development and delivery.

In this more specific sense middleware can be described as the dash ("-") in client-server, or the -to- in peer-to-peer.

those services found above the transport (i.e. over TCP/IP) layer set of services but below the application environment (i.e. below application-level APIs).

Middleware is a type of computer software which provides services to software applications beyond those available from the operation system. It can be described as 'software clue'.

Three characteristics

Independent failure of components

Lack of a global clock

Concurrency of components

Distributed computing is a field that studies distributed systems. A distributed system is a system whose components are located on different network computers, which communicate and coordinate their actions by passing messages to one another from any system. The components interact with one another in order to achieve a common goal.

A computer running several software components is often called an application server.

Component models

A component model is a definition of properties that components must satisfy, methods and mechanisms for the composition of components

CORBA

COM/DCOM

EJB

An individual software component is a software package, a web service, a web resource or a module that encapsulates a set of related functions (or data). Components communicates with each other via interfaces.

Design: Discovering components

Anti-pattern: Entity trap

Workflow approach

Event-storming

Actor/Actions

6. Goto 3

5. Restructure components

4. Analyze architecture characteristics

3. Analyze roles and responsibilities

2. Assign requirements to components

1. Identify initial components

Examples and varieties

Distributed service

Event processor

A layer or subsystem

A wrapper of a collection of code

Two characteristics

Independently upgradable

Independently replaable

An individual software component is a software package, a web service, a web resource, or a module that encapsulates a set of related functions (or data).

Quality Attributes

Measuring

...

Identifying

Extract architecture characteristics from requirements

Extract architecture characteristics from domain concerns

List

references

WiKi

Cross-cutting

Usability/Achievability

Security

Security is a measure of a system's ability to protect data and information from unauthorized access while still providing access to people and systems that are authorized.

while being attacked

Integrity

Confidentiality

Privacy

Legal

Archivability

Accessibility

Structural

Upgradability

Supportability

Portability

Maintainability

Localization

Leveerageability/reuse

Installability

Extensibility

Configurability

Operational

Elasticity

Scalability

Robustness

Reliability/Safety

Recoverability

Continuity

Availability

The degree to which a system is in a specified operational and committable state at the start of a mission, when the mission is called for at an unknown, i.e., a random, time.

MTBF/(MTBF + MTTR)

Availability refers to a property of software, that is there and ready to carry out its task when you need it to be.

Architecture style and patterns

Recognized patterns and styles

Space-based architecture (SBA)

Apache Geode

Block Diagram

Virtualized middleware

Deployment manager

Processing grid

Data grid

Messaging grid

Processing unit

Data replication engine

In-memory data grid cache

Space-based architecture (SBA) is a distributed-computing architecture for achieving linear scalability of stateful, high-performance applications using the tuple space paradigm. It follows many of the principles of representational state transfer (REST), service-oriented architecture (SOA) and event-driven architecture (EDA), as well as elements of grid computing. With a space-based architecture, applications are built out of a set of self-sufficient units, known as processing-units (PU). These units are independent of each other, so that the application can scale by adding more units.

Shared-nothing architecture

Service-oriented (SOA)

Service oriented architecture

Key deference with Microservice

Has an ESB

Service structure

Implementation

Contract

Interface

Type of services

Infrastructure service

Application service

Enterprise service

Functional service

Service requester/consumer

Service broker, register or repository

Service provider

Offspring

SaaS

Mashhups

Cloud computer

Came from

Modular programming

Distributed computing

It promotes loose-coupling between services, separate functions into distinct units or service, which developers make accessible over a network in order to combine and reuse them in the production of applications. These services and their corresponding consumers communicate with each other by passing data in a well-defined, shared format, or by coordinating an activity between two or more services.

Rule-based

Representational state transfer (REST)

Web Service

APIs

Media type

Standard HTTP Methods

DELETE: delete the target resource's state

PUT: Create or replace the state of the target resource with the state defined by the representation enclosed in the request

POST: Let the target resource process the representation enclosed in the request

GET: Get the representation of the target resources's state

Base URI

Architectural constraints

Uniform interface

Code on demand

Layered system

Cacheability

Statelessness

Client-server architecture

REST is a software architectural style that was created to guide the design and development of the architecture for the World Wide Web. REST defines a set of constraints for how the architecture of an Internet-scale distributed hypermedia system, such as the Web, should behave. The REST architectural style emphasises the scalability of interactions between components, uniform interfaces, independent deployment of components, and the creation of a layered architecture to facilitate caching components to reduce user-perceived latency, enforce security, and encapsulate legacy systems.

Any web service that obeys the REST constraints is informally described as RESTful. Such a web service must provide its Web resources in a textual representation and allow them to be read and modified with a stateless protocol and a predefined set of operations. This approach allows the greatest interoperability between clients and servers in a long-lived Internet-scale environment which crosses organisational (trust) boundaries.

Reactive architecture

Plug-ins

Media Players

Eclipse IDE

Email clients

Mechanism

The host application provides services which the plug-in can use, including a way for plug-ins to register themselves with the host application and a protocol for the exchange of data with plug-ins. Plug-ins depend on the services provided by the host application and do not usually work by themselves. Conversely, the host application operates independently of the plug-ins, making it possible for end-users to add and update plug-ins dynamically without needing to make changes to the host application.

Programmers typically implement plug-ins as shared libraries, which get dynamically loaded at run time.

Plug-in is a software component which adds specific feature to an existed computer program. When a program supports plug-ins, it enables customization.

Pipes and filters

In software engineering, a pipeline consists of a chain of processing elements (processes, threads, coroutines, functions, etc.), arranged so that the output of each element is the input of the next; the name is by analogy to a physical pipeline. Usually some amount of buffering is provided between consecutive elements. The information that flows in these pipelines is often a stream of records, bytes, or bits, and the elements of a pipeline may be called filters; this is also called the pipes and filters design pattern. Connecting elements into a pipeline is analogous to function composition.

Narrowly speaking, a pipeline is linear and one-directional, though sometimes the term is applied to more general flows. For example, a primarily one-directional pipeline may have some communication in the other direction, known as a return channel or backchannel, as in the lexer hack, or a pipeline may be fully bi-directional.

Peer-to-Peer

Block diagram

eDonkey

Bittorrent

Compare to Client-Server architecture

A peer-to-peer network is designed around the notion of equal peer nodes simultaneously functioning as both "clients" and "servers" to the other nodes on the network. This model of network arrangement differs from the client–server model where communication is usually to and from a central server. A typical example of a file transfer that uses the client–server model is the File Transfer Protocol (FTP) service in which the client and server programs are distinct: the clients initiate the transfer, and the servers satisfy these requests.

Definnition

Peer-to-peer (P2P) computing or networking is a distributed application architecture that partitions tasks or workloads between peers. Peers are equally privileged, equipotent participants in the application. They are said to form a peer-to-peer network of nodes.

Peers make a portion of their resources, such as processing power, disk storage or network bandwidth, directly available to other network participants, without the need for central coordination by servers or stable hosts. Peers are both suppliers and consumers of resources, in contrast to the traditional client–server model in which the consumption and supply of resources is divided.

Monolithic application

Word processor

Microservice architecture

Martin Fowler at GOTO 2014

Jame Lewis, Martin Fowler

Compare to SOA

Grain

SOA and microservice architecture differ in the scope. SOA has an enterprise scope and microservice has an application scope.

Service granularity

If domain-driven design is being employed in modeling the domain for which the system is being built, then a microservice could be as small as an aggregate or as large as an bounded context.

It's bad practice to make services too small as then the runtime overhead and the operational complexity can overwhelm the benefits of the approach.

Services that are dedicated to a single task, such as calling a particular backend system or making a particular type of calculation, are called as atomic services. Similarly, services that call such atomic services in order to consolidate an output, are called as composite services.

There is no consensus or limits on the service granularity as the right answer depends on business and organizational context.

A key step in defining a microservice architecture is figuring out how big an individual microservice has to be.

What it is not

It's not a layer within a monolithic application

What it is

4. Services are small in size, messaging-enabled, bounded by context, autonomously developed, independently deployable, decentralized and built and released with automated processes.

3. Services can be implemented using different programming languages, database, hardware and software environment depending on what fits best.

2. Services are organized around business capabilities

1. Services in a microservice architecture are often processes that communicate over network to fulfill a goal using technology-agnostic protocols such as HTTP.

Layered (or multilayered architecture)

Three-tier

Data tier

Logical tier

Presentation tier

Persistent layer

Business layer

Service layer (Application layer)

Presentation Layer

Event-driven (or implicit invocation)

Resources

The Many Meanings of Event-Driven Architecture • Martin Fowler • GOTO 2017

The Saga Pattern in Microservices (EDA - part 2)

What is Event Driven Architecture? (EDA - part 1)

Pros and Cons

Cons

Inconsistency

Complexity

Performance

Procs

Reverse dependency

Decouple components

JavaScript

Java Swing

Event processing engine (Event sink)

Event channel

Event generator/emitter

What is event?

Structure

Event body

Event header

In EDA system, what is produced, published, propagated, detected or consumed is a (typically asynchronous) message called the event notification, and not the event itself.

A significant change in state

Event driven architecture (EDA) is a software architecture paradigm promoting the production, detection and consuming of, and reaction to events.

Data-centric

Component-based

Client-Server (2-tire, 3-tier, n-tier, cloud computing)

Compare to Peer-to-Peer architecture

Network printing

World Wide Web

Email

Client–server model is a distributed application structure that partitions tasks or workloads between the providers of a resource or service, called servers, and service requesters, called clients. Often clients and servers communicate over a computer network on separate hardware, but both client and server may reside in the same system. A server host runs one or more server programs, which share their resources with clients. A client usually does not share any of its resources, but it requests content or service from a server. Clients, therefore, initiate communication sessions with servers, which await incoming requests.

Blackboard

Blackboard Systems - H. Ycnny Nii

Koala example

Solution-space

Jigsaw example

Simple model

Implementations

Adobe Acrobat OCR text recognization

Heresay II speech recolonization system

Components

3. The control shell, which controls the flow of problem-solving activity in the system. Just as the eager human specialists need a moderator to prevent them from trampling each other in a mad dash to grab the chalk, KSs need a mechanism to organize their use in the most effective and coherent fashion. In a blackboard system, this is provided by the control shell.

Various kinds of information are made globally available to the control modules

The control information can be used by control modules to determine the focus of attention

Criteria are provided to determine when to terminate the process.

Behavior sequence

4. Depending on the information contained in the focus of attention, an appropriate control module prepares it for execution:

c. If the focus of attention is knowledge source and an object, then that knowledge source is ready for execution. The knowledge source is executed together with the context, thus described.

b. If the focus of attention is a blackboard object, then a knowledge source is chosen which will process that object (event-scheduling approach).

a. If the focus of attention is a knowledge source, then a blackboard object is chosen to sever as the context of its invocation (knowledge-scheduling approach).

3. Using information from 1 and 2, a control module selects a focus of attention.

2. Each KS indicates the contribution it can make to the new solution state

1. A KS makes change(s) to blackboard object(s).

A control record also is kept

The focus of attention can be:

3. The combination of the above both.

2. Blackboard objects (i.e., which solution island to pursue next)

1. knowledge sources

The focus of attention indicates the next thing to do

Can be on the blackboard or kept separately

This is a set of control modules that monitor the changes on the blackboard and decide what actions to take next.

The knowledge sources respond opportunistically to changes on the blackboard.

2. The blackboard, a shared repository of problems, partial solutions, suggestions, and contributed information. The blackboard can be thought of as a dynamic "library" of contributions to the current problem that have been recently "published" by other knowledge sources.

The blackboard can have multiple panels

The relationship between objects are denoted by named links.

The objects and their properties define the vocabulary of the solution space.

The objects are hierarchically organized into levels of analysis

The blackboard consists objects from the solution space.

The purpose of the blackboard is to hold computational and solution-state data needed by and produced by the knowledge sources.

1. The software specialist modules, which are called knowledge sources (KSs). Like the human experts at a blackboard, each knowledge source provides specific expertise needed by the application.

Each KS is responsible for knowing the conditions under which it can contribute a solution

It holds preconditions that indicate the condition on the blackboard which must exist before the body of the KS is activated.

The knowledge sources modify only the blackboard or control data structures (that also might be on the blackboard), and only the knowledge sources modify the blackboard.

The knowledge sources are represented as:

Logic assertions

Set of rules

Procedures

The objective of each KS is to contribute information that will lead to a solution to the problem

A KS takes the set of current state on the blackboard and update it as encoded in its specialized knowledge

The domain knowledge needed to solve the problem is partitioned into knowledge sources

Framework

Metaphor

A group of specialists are seated in a room with a large blackboard. They work as a team to brainstorm a solution to a problem, using the blackboard as the workplace for cooperatively developing the solution.