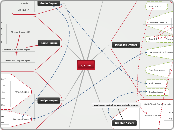

Business Sectors

Technology and Software Development

Manufacturing

Knowledge Management

Supply Chain Management

Human Resources and Talent Management

Research and Development

Healthcare

Education and Training

Regulatory Compliance

Customer Relationship Management (CRM)

Sales and Marketing

Finance and Banking

e-Business

Basic LLMs Tasks

Content Generation and Correction

Information Extraction

Text-to-Text Transformation

Semantic Search

Sentiment Analysis

Content Personalization

Ethical and Bias Evaluation

Paraphrasing

Language Translation

Text Summarization

Conversational AI

Question Answering

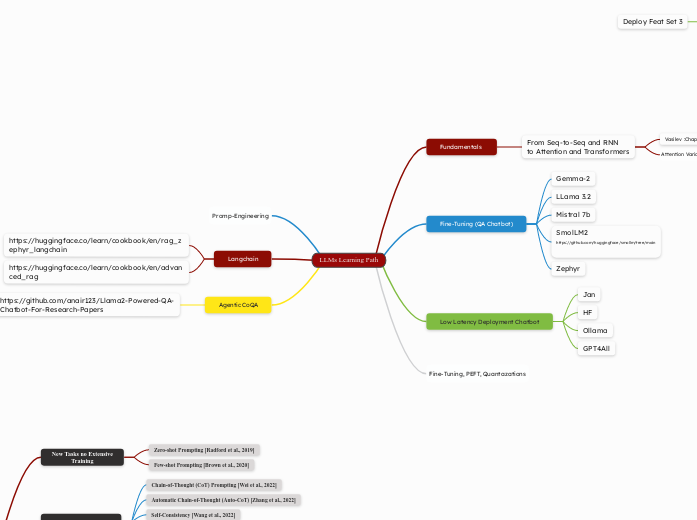

LLMs Learning Path

Efficient LLMs

Efficient Inference

System-Level Inference

Efficiency Optimization

vLLM ,

DeepSpeed-Inference

Algorithm-Level Inference

Efficiency Optimization

Effitient Architecture

Long Context LLMs

MOE

Efficient Attention

Hardware-Assisted Attention

FlashAttention, vAttention

Learnable Pattern Strategies

HyperAttention

Fixed Pattern Strategies

Sparse Transformer , Longformer , Lightning Attention-2

Model Compression

Knowledge Distillation

Low-Rank Approximation

Parameter Pruning

Quantization

Quantization-Aware Training

Post-Training Quantization

Weight-Activation Co-Quantization

RPTQ, QLLM

Weight-Only Quantization

GPTQ, AWQ, SpQR

Efficient Fine-Tuning

Frameworks

Unsloth

MEFT (Q-LoRA, QA-LoRA, ...)

PEFT

Prompt Tuning

Prefix Tuning

Adapter-based Tuning

Low-Rank Adaptation (LoRA, DoRA)

AI Apps

Frameworks

Local

Gradio

Jan

Deployment

Anyscale

Hugging Face Inference Endpoints.

Serving

vLLM

BentoML

Integration

LlamaIndex

Scaled Multi-Agents

Local AI Agents

Local Chatbots

LLM-Based Recommendation Chatbot

Medical QA ChatBot

Research Assistant(Talking Papers)

Generative AI Agents

Frameworks and Practices

RASA

Langchain / LangGraph

CrewAI

SmolAgents

Courses

HF Agent Cource

Books

https://arxiv.org/pdf/2401.03428

https://www.arxiv.org/pdf/2504.01990

LLMs Adaptation

Promp-Engineering

RAG

20 Types of RAG Arch

Langchain

Indexes

weaviate

Faiss

Pinecone

Qdrant

Chroma

Prompt

Agent

Chains

Memory

Fine-Tuning ( Transfer learning, Strategies )

- Instruction-tuning

- Alignment-tuning

- Transfer Learning

Fundamentals

HF Transformers, pyTorch

HF Course:

https://huggingface.co/docs/transformers/en/quicktour

Language Modeling and llms

https://arxiv.org/pdf/2302.08575

ChatgptDiscussion:

https://chatgpt.com/share/6819003f-e674-8005-af8b-11a7ef37bd70

https://arxiv.org/pdf/2303.18223

https://arxiv.org/pdf/2402.06853

https://arxiv.org/pdf/2303.05759

https://wandb.ai/madhana/Language-Models/reports/Language-Modeling-A-Beginner-s-Guide---VmlldzozMzk3NjI3

- Full Language Modeling

- Prefix Language Modeling

- Masked Language Modeling

- Unified Language Modeling

From Seq-to-Seq and RNN

to Attention and Transformers

Papers

Lewis- Wolf

Vasilev